Bad UX and User Self-Blame - "I'm sorry, I'm not a computer person."

In my recent podcast with UX expert and psychologist Dr. Danielle Smith the topic of "user self-blame" came up. This is that feeling when a person is interacting with a computer and something goes wrong and they blame themselves. I'd encourage you to listen to the show, she was a great guest and brought up a lot of these points.

In my recent podcast with UX expert and psychologist Dr. Danielle Smith the topic of "user self-blame" came up. This is that feeling when a person is interacting with a computer and something goes wrong and they blame themselves. I'd encourage you to listen to the show, she was a great guest and brought up a lot of these points.

Self-blame when using technology has gotten so bad that when ANYTHING goes wrong, regular folks just assume it was their fault.

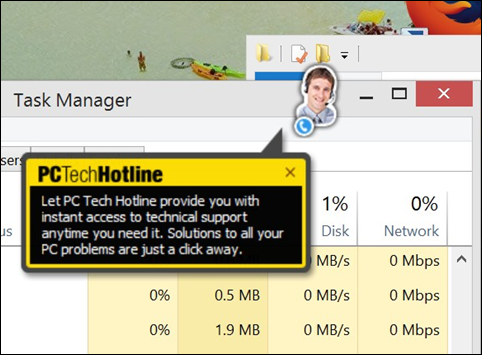

My dad got some kind of evil "PC Tech Hotline" on his machine today because some web site told him his "Google was out of date and that he should update his Google." So he did. And he feels super bad. Now, in this case, it was a malicious thing so it would be really hard to figure out how to solve this for all users. It's like getting mugged on the way to your car. It happens to the best folks in the best situations, it can't always be controlled. But it shouldn't cause the person to blame themselves! He shouldn't fear his own computer and doubt his skills.

People now publically and happily self-identify as computer people and non-computer people. I'll meet someone at a dinner and we'll be chatting and something technical will come up and they'll happily offer up "Oh, I'm not a computer person." What a sad way to separate themselves from the magic of technology. It's a defeatist statement.

Get a Tablet

Older people and people who are new to technology often blame themselves for mistakes. Often they'll write down directions step by step and won't deviate from them. My wife did that recently with a relatively simple (for a techie) task. She wanted to record a lecture with a portable device, load the WAV onto the PC, even out the speech patterns, save it as a smaller file (MP3), then put it in Dropbox. She ended up writing two pages of notes while we went over it, then gave up after 30+ min, blaming herself. I do this task now.

Advanced users might say, you should get your non-technical friend a tablet or iPad. But this is a band-aid on cancer. That's like saying, better put the training wheels back on. And a helmet!

Tablets might get a user email and basic browsing and protect them from basic threats, but most also restrict them to one task at a time. And tablets have hidden UX patterns as well that advanced users use, like four-fingered-swipes and the like. I've seen my great aunt accidentally end up in the iPad task switcher and FREAK OUT. It's her fault, right?

This harkens back to the middle ages when the average person couldn't read. Only the monks cloistered away had this magical ability. What have we done as techies to make regular folks feel so isolated and afraid of all these transformative devices? We MAKE them feel bad.

There used to be a skit on Saturday Night Live called "Nick Burns, Your Company's Computer Guy" that perfectly expresses what we've done to users, and to the culture. Folks ask harmless questions, Nick gives precise and exasperated answers, then finally declares "MOVE." He's like, just let me get this done. Ugh. Stupid Users. Go watch Nick Burns, this is a 19 second snippet.

I basically did this to my own Dad today after 45 min of debugging over the phone, and I'm sorry for it.

I'm not a techie

When users blame themselves they don't feel safe within their own computer. They don't feel they can explore the computer without fear. Going into Settings is a Bad Idea because they might really mess it up. This UX trepidation builds up over the years until the user is at a dinner party and declares publically that they "aren't a computer person." And once that's been said, it's pretty hard to convince them otherwise.

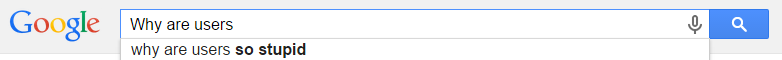

Even Google, the most ubiquitous search engine, with the most basic of user interfaces can cause someone to feel dumb. Google is a huge database and massive intelligence distilled down to a the simplest of UI - textbox and a button. And really, it's just a textbox these days!

But have all had that experience where we google for something for an hour, declare defeat, then ask a friend for help. They always find what we want on the first try. Was it our fault that we didn't use the right keywords? That we didn't know to not be so specific?

I think one of the main issues is that of abstractions. For us, as techies, there's abstractions but they are transparent. For our non-technical friends, the whole technical world is a big black box. While they may have a conceptual model in their mind on how something works, if that doesn't line up with the technical reality, well, they'll be googling for a while and will never find what they need.

Sadly, it seems it's the default behavior for a user to just assume its their fault. We're the monks up on the hill, right? We must know something they don't. Computers are hard.

How do YOU think we can prevent users from blaming themselves when they fail to complete a task with software

Sponsor: Big thanks to our friends at Raygun for sponsoring the feed this week. I use Raygun myself and I encourage you to explore their stuff, it's really something special. Get full stack error reporting with Raygun! Detect and diagnose problems across your entire application. Raygun supports every major programming language and platform - Try Raygun FREE!

About Scott

Scott Hanselman is a former professor, former Chief Architect in finance, now speaker, consultant, father, diabetic, and Microsoft employee. He is a failed stand-up comic, a cornrower, and a book author.

About Newsletter

It's simply a sad fact that being technically skilled is still looked upon by a lot of people in the western world as "geeky" or weird. Look how many people are proud to say that they aren't good at maths or go back 25 years and see how many grown adults happily told everyone that they couldn't program a VCR (one of the simplest "technical" tasks around).

Ultimately, there's no point pandering to people who don't want to learn, especially if it hinders the UX for more technical people or prevents technical support doing their job. Would you expect a plumber to walk you through how to do the repair when he visits your home or would you get out of the way and let him apply his skilled knowledge to the problem without interference?

We should design our UX's to be clear and unambiguous, so that users using it for the first time can do basic tasks without a massive learning curve; then they can progress with confidence rather than being afraid to do even the simplest of tasks. The more complex tasks should be easily and naturally discoverable with prolonged usage. It's not about pandering to people who don't want to learn, but about making it easier and more natural for them to learn.

I'll give you an example. If a warning popped up on your screen right now saying you have malware or a virus, what would you do? I'd be willing to bet you would either know exactly how to clear it using your AV software, or even more likely you would just google the name of the virus to know what its deal is and then follow the instructions on how to remove it. A few more examples:

- You want to stop a PDF being printed or edited

- You want to create a screencast of your desktop

- You want to convert a video to divx

I have barely any more expertise than a non-tech on these sorts of problems and many others. Half the time when I'm helping all I'm doing is doing the same google search they could have done and then looking through the results.

Building applications that are usable by people with high technical levels or are willing to go learn how to use it before doing the most basic things is the easy button for UX. This is the "you have managed it to make it not suck". It will cause people with lower technical levels to get frustrated, it will cause them to have an even lower tolerance for technology on the next thing they try. It causes me to make sarcastic remarks about your product or service on twitter, despite being highly technical. It limits the number of people that can get benefit from your thing (and put money in your pocket) while, for some, also reducing their self-worth a little more and strengthening their resolve that they aren't computer people.

And this all goes back to Scott's point, because every one of these apps that they come in contact with will increase their frustration level and make them more reluctant to try the next app, or less willing to start learning how to use it, regardless of their starting level of technical ionterest. There are no natural "non-computer people", there are only people we have trained to expect frustration, annoyance, and occasional illogical magic when they manage to press the right 5 poorly labeled buttons in 6 places and receive the result they wanted.

As an aside, I find it interesting that there's this mystical group of computer people that don't mind looking up hard stuff, but draw the line at the hard topic of making technology more approachable to less experienced folks.

I've had to learn a lot about making the application for the people using it and not for myself. That doesn't mean that I have dumb down the interface, it means I have to think outside of what I know and learn how the users think and provide them with the experience that makes sense.

I find myself apologizing on behalf of other developers to "non-technical" people and try to help them figure out why they ran into a problem or what a certain feature in an application really means. I never take over a task for someone. I don't learn when someone does something for me and I don't expect someone to learn if I do something for them.

I encourage people to explore the settings in an application, especially before they start doing any real work with the application.

My own parents, by their own admission "not technical people," have actually become self-reliant. They make their own hardware and software purchases with confidence, they don't wait for my wife and I to "bless" sites they can visit, regularly video chat with us on Skype, and are general PITA on social media by themselves. I've stopped being called for computer problems or purchasing advice altogether.

In any case, there is also the possibility of people like us, who built on one technical success after another, culminating in full Hanselmanitude. And that can happen to anyone.

I'm of two minds on it, but I think it really boils down to the car analogy that you and your guest made in the podcast.

Think about the average person's relationship to their computer in the same way that you think about the average person's relationship to their car (minus the love, in my experience).

One the one hand, your average person knows very little about how to troubleshoot anything in their car. They have a problem, they take it to the shop. Even for regular maintenance tasks that are fairly straightforward (e.g. changing the oil) will lead to a visit to a mechanic. So on non-standard operational tasks (i.e. anything other than driving somewhere and parking), your user can't/doesn't feel comfortable.

You can relate that to your wife's two pages of notes on manipulating audio. If you were to give her instructions on how to change out the air filter in the car, I'd imagine she would have a similar list (assuming she's not - ha, I'm about to do this - a car person). Wow, this analogy is better than I thought.

You could argue that cars are superior to most software in that the basics of operation (again, driving and parking) are incredibly simple and intuitive. I would argue that that's not the case. You have to go to classes and pass a test to operate a car. What cars have going for them is that the UX hasn't changed appreciably in 100 years. This makes it seem incredibly intuitive because everyone is constantly exposed to it and it's the same when you're 15 as it is when you're 90.

My wife is an English professor. She's "not technical" but she's very willing to learn, and the approach I've tried to take with her is that, like learning anything else, the key is some repetition to create the muscle memory that allows you to integrate the steps into some sort of subconscious process (I know there's a term for this, but I'm not an education person).

I think the best thing we can do is to realize that there are varying degrees of comfort with technology. We should understand our respective audiences and strive to make their lives easier instead of our own. If we want power toys, we can build ourselves power toys, but it shouldn't be at the expense of our users. We should also give them the same abilities, in a kinder, more comfortable format, so that they do not feel powerless.

My 5 year old can't even read well yet, but she can operate most of the computerized devices in the house. Literate adults have no excuse.

Bottom line Scott...you're an Enabler.

My typical "helping" will often follow the pattern

Me: "What does the message say?"

Other person: "I don't know, I clicked it away."

Me: "OK, make it show again."

Other person: "It says I need to do x."

Me: "OK, do x."

Other person: "Great, that's fixed it. You're a genius."

OK, that's slightly simplified, but I've "solved" many computer problems by just sitting next to someone, telling them to read what they are being told, and giving them the confidence that they are not clicking the wrong thing (maybe falsely, depending on how much attention I'm paying).

In many ways, people self blame with computers because that's how most people react when interacting with something where they don't have common knowledge.

The difference with computers, compared to something like baking, is that people are expected to be more and more computer literate in order to function on a day to day basis, where other skills like baking are not deemed necessary for the average person.

I blame myself for it, after all I'm the one that clicked "Yes". The thing is though, I understand what I did. I know why it was dumb and now I know to never trust those sites. That's the key I think. Instead of trying to teach people about computers we need to teach people that computers can be understood. Get people to think "I can figure this out".

I needed to upgrade my iCloud storage. Apple gives away only a pitiful 5 GB free and the phone was nagging me that it could not complete a backup.

Here are the steps: (1) See the nagging dialog box complaining about cloud storage space. (2) Tap Buy More Storage. (3) Choose an upgrade, then tap Buy and enter your Apple ID password. (4) Get a prompt for payment and enter all your credit card information. (5) Enter your Apple ID password. Again? Okay. (5) Get a prompt for payment and enter all your credit card information. Wait. Why? (6) Realize that this is broken and that nobody at Apple ever tested the scenario that an iCloud customer was not already an iTunes customer. (7) Poke around the icloud.com website looking for a way to do it from a web interface. Fail to find anything useful there. (8) Get the idea to pre-enter the payment information and associate it with your Apple ID before trying again. (9) Open the iTunes Store app and look for a way to manage payment. Fail to find anything useful there. (10) Go to Settings > iTunes & App Store and look for a way to manage payment. Fail to find anything useful there. (11) Use Google to find the "Change or remove your payment information from your iTunes Store account (Apple ID)" instructions at support.apple.com. (https://support.apple.com/en-us/HT201266) (12) On the Home screen, tap Settings. (13) Tap iTunes & App Store. (14) Tap your Apple ID. Sign in with your Apple ID. (15) Tap View Apple ID. (16) Tap Payment Information. (17) Change your information and tap Done. After you change your payment information, the iTunes Store places an authorization hold on your credit card. Thanks. (19) Use Google to find the "iCloud storage upgrades and downgrades" instructions at support.apple.com. (https://support.apple.com/en-us/HT201318) (20) Go to Settings > iCloud > Storage. (21) Tap Buy More Storage or Change Storage Plan. (22) Choose an upgrade, then tap Buy and enter your Apple ID password. (23) Done.

I like the idea of a safe, walled garden. I want to live there. But nobody has yet made one that is good enough. And the app stores aren't doing a good enough job keeping out the impostors and phishers. See this How-To Geek article: The Windows Store is a Cesspool of Scams

That core concept of sandboxed trusted applications that communicate with the system and other apps only through strict contracts and extensions should be the future of computing.

But iOS, ChromeOS, and Windows RT have been disappointing. The multi-finger swipe and button press/tap/hold gestures are often unintuitive and undiscoverable. They don't work on and with lots of different hardware. The one-and-only-one app store model has huge flaws--especially for internal line of business applications. (Let me choose my own gatekeepers. I should be able to designate which few sellers I trust and then download from only those places. Example: only Microsoft Store, Steam, and my company's IT department.)

I'm disappointed that Windows RT is dead. It seemed like maybe Microsoft actually wanted to eventually make it versitile enough for everyday computing.

This xkcd comic touches on how I feel: Troubleshooting

1) The customer is always right. (Many of the comments contradict this, but reality supports it.) When a user input does not match what any of the expected inputs, then the UI should make reasonable suggestions and provide an Introduction and Detailed help (usually as a moderated wiki). If the program was not prepared for a user response, it is the developer's fault, not the user's fault. Search engine spelling suggestions are one step toward this.

1a) Provide prompt about how to get started/continue if the user makes no input for a fairly long time (such as 60 seconds). Never assume that the user has abandoned the product.

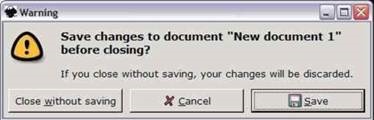

2) The UX must always remain friendly. It is not the user's fault that a step was skipped (such as saving a file in the example in the main article) or that an extra step was added. The file saving example should have been worded more like "Would you like to save this file (name) now?".

3) Make it hard to cause a catastrophe. Deleted files and partial edits should be stored for later in a background (invisible to normal users) version control system (with self cleaning when there is a lack of space).

4) Show results as the user enters changes or commands. Provide for almost infinite ability go back (undo). Only request confirmation of a change when the change cannot be undone.

5) Treat users as individuals. Provide for multiple user experience levels (at least, beginner, experienced, and advanced). Experience levels include experience with the technology, experience with the application area, and experience with the product.

5a) When a user first uses any product, begin with reasonable defaults and assume that the user needs prompts to get started. Each prompt should have a "skip this next time" option.

5b) All commands should be available via an easy to use menu system (Microsoft still hasn't gotten this right). This is required for both new users and those who have disabilities. When a keyboard is available, always make pointing available from the keyboard.

5c) As the user becomes more experienced, suggestion prompts could automatically be suppressed.

5d) Provide keyboard shortcuts, movement triggered commands, and advanced menus (Advanced commands and Options) for more advanced users.

6) Minimize modality. Very few commands (like reset this device to factory settings and delete all user data) are "system modal": it does not make sense to do anything else until the user replies to this prompt. Only commands that cannot be backed-out should have a modal confirmation prompt. The rest of the time, users should be able to ignore the prompts and just keep working.

7) Use direct entry whenever possible. The spread sheet and the fill-able form are the most successful software interface styles.

8) Use breadcrumbs. Use a small part of the screen to provide a way to move between active tasks. Remember that the top level active task is selecting a task (the home screen). An analogue of this is (unless the user requests it) never blank the screen completely or turn off the device, just because the user hasn't made an entry.

9) Separate UI testing from UX testing. Test the user interface by both scripted and random inputs from an automatic testing system. Test the user experience by observing (and getting feedback from) users of various experience levels.

1. Encourage and help all downloadable software to be in the app store. I'm talking everything: all open source software (anything on Tucows, download.com -- without ads --, etc), free software from competitors, paid software, for tablets and for desktops... everything. If it runs on Windows, it should be in the store. Do you want to install FireFox or VLC (not just Metro) or OEM drivers/software or your company's application? Go to the app store. It should be the go to place for all software installations.

People shouldn't even consider using the browser as an installation medium. You never think of doing this on your phone, why would you on your desktop? Now, this would require a lot of legwork from Microsoft. It would have to go after software that's currently installed via the browser and convince them to move to the store, but I feel the future of the OS is at stake; it has to happen!!

2. Teach users on how all this works. Do you know how a video game teaches you about how the game works? The OS itself should have "training" sessions on how this is done. Make the store app prominent and part of the OS -- not just another app. It should be as prominent as the volume changer or battery indicator.

Attempts to install software from the browser should get caught and redirected to the store (for most popular software and where false positives are low). If they search for a piece of software from the "Start menu", that is not installed locally, it should search for it in the app store and offer to install. There are many other subtle ways this could be achieved.

3. Microsoft should maintain a list of "bad actors" and prevent people from installing from those sources. I've seen cases where ransomware was sent to someone's email (Outlook 360) and then the user installed it (cost quite a bit of money to get the data back). This should not have happened! This was a pretty "popular" ransomware. Why did Outlook let it through?

If you want a better OS, which I think you do, please lobby internally for these... Time is running out and people have less and less patience for these kinds of things especially when they don't have to worry about any of this on their phone/tablet.

http://theuserisdrunk.com/

I think now is the time that we can start bringing new ways of connecting the physical and digital worlds to the mainstream. Things like haptic feedback, different display technologies like e-ink, voice and gesture interactions, and harnessing the massive computational power we now have for NLP.

All of this, and some creative thinking and design, can create interfaces that are more familiar, intuitive, and kinder to our bodies.

More recently driving a car was a rare and elite skill. And you had to be somewhat of a mechanic to use one as well since they broke down often.

The computer, or tablet, or anything else you can install software on is by nature a general purpose device. And learning to use one is a skill much like reading, or mathematics. With language you can express and capture any semantic idea and with mathematics you can express the underlying nature of the universe itself. And yet we take years to gain the skills to use written language and mathematics.

Perhaps the ultimate single use computing device is the calculator. They all look broadly the same and at least since reverse polish notation has fallen from favor they all work the same.

And yet, to use one I need to know significantly more than the "UI" of my calculator. To someone who is mathematically literate it makes sense. This is a key point. A user needs a second set of skills to interpret the UI.

The rub here is that any application, no matter how well designed and written requires the user to have additional and often non-trivial skills to use it.

With today's UIs we have by and large standardized on a UI language even across platforms.

But there are multiple way to express a operation in the language. Do I use a menu or a button or a slider or a swipe? Do I use bough or branch or twig or foliage to describe the characteristics of the tree I see in my yard? Interpretation of the UI is going to take understanding of the language of the UI. Unless we want to limit ourselves to the UI equivalent of "See the red ball. See Spot run! Run Spot run!"

Let's take it as read that there are bad UI's out there. And these days there is no real excuse for that. But the UI discussion always goes deeper than that.

In my view many of the complaints I see about UI design (other than plain bad UI's) comes down to insufficient domain knowledge. Take a concrete example. Aircraft flight planning. Even the best flight planning application will be completely opaque to anyone who has no knowledge of aircraft operations. To the outsider it appears to be the worst UI ever. But to the operations guy it makes perfect sense and he loves it. It cannot be self explanatory.

And any UI that approaches self explanatory will be unusable in other ways, like trying to write a paper on nanotechnology in simple language. "See the atom. See the probe. See the probe move the atom. See the atom dance!" Painful isn't it?

Using e-mail takes some external knowledge about how e-mail works. Like using a calculator requires understanding of how to add 2 numbers together. Without that the operations have no context. Heck, they used to teach letter writing in school. It was not assumed that because you could write you could correctly format a letter, address an envelope correctly, put a stamp on it and drop it in a mailbox. Why then do we assume e-mail should be somehow intuitive merely because we can type?

To be sure there are badly written UIs. And malware is no different than the con-men that used to go door-to-door looking for easy marks. Only the technology has changed. It's a fact of life and it cannot be solved through technology, unless you can never install anything. Awareness of that has to be taught, like "stranger danger" taught to kids. Your "Google being out of date" is the modern equivalent of "Do you want to see some puppies?"

So what does this all really mean?

We have always learned skills from our mentors. We do not magically learn stuff without putting in effort. We didn't just learn to read or drive a car because the book was well laid out the controls were well designed. Both those skills are built on a foundation of other skills that we were taught by our mentors. Thus it always was and thus it always will be. Using technology is like learning to read. It takes effort and work to get good at it and you need the domain knowledge to use it effectively. Outstanding domain knowledge can overcome poor UI design. Excellent UI design will never overcome poor domain knowledge.

Thus it always was, and thus it always will be. Eventually technology will not be an elite skill but a common skill, like read and writing are today. All it takes is time.

"No way! This whole design here is all my doing. Probably a good idea if I keep it a bit dumb. Don't want a Skynet situation on our hands. But seriously, I do want to make it better. It's a constant work in progress and I value your feedback. What actions did you immediately, instinctively think to do to accomplish what you want to do?"

That way the UI is no longer personified as 'smart and flawless' or given any kind of merit or social weight over the user. There's prior social and pop culture context to any kind of modern technology (eg 'Skynet'). You only need to remind the user that it is the same as building a car or any other 'meatspace' tool or mundane object we all never assume to be 'smart' like a computer.

A bit harsh but at this point I would say. Give it up. Really.

Tablet apps are the big, modern example of "Easy to Use" gone too far -- they don't expose any errors to the user, even when they're something fixable by the user, so you're left uninstalling the app and reinstalling it, losing some or all of your data in the processed, in the hope that *maybe* some magic will happen.

"Put up a dialog box telling users they are filthy, unwashed idiots with ugly children. Tell them they have just committed error -2147211501 (&H80042713). Display the message "I am unworthy!" and make them click OK. Then terminate the program, throwing all their wretched, unsaved work into the bit bin."

At least our error messages could be much better if MS made the Win7 "Task Dialog" usable in .NET.

https://msdn.microsoft.com/en-us/library/windows/desktop/bb760441%28v=vs.85%29.aspx

> "user self-blame" came up.

If only you had listened to her before the release of "Modern" UI and Visual Studio 2012...

"UX is like a joke, if you have to explain it, it isn't very good"

I think it's worth looking at the other side of the coin. Think about how bad software used to be and how much less we as developers focused on the UX than today.

In my opinion, Apple shifted the focus to UX in a big way and I think things are improving in that regard.

We still have a long way to go. Many people do not find software accessible even today. But just think about how many more people are using software effectively today compared to 20, 15, 10 or 5 years ago. Huge segments of the population are getting more and more effective with software.

My dad who is in his 70s and is the opposite of a techie, can use Facebook, read news and check his stock account. 10 years ago, he couldn't do any of it.

Comments are closed.

I don't believe he has a solution (I'm partway through the book, just discovered it again after reading it for the first time 20+ years ago) - he puts the onus squarely at the people designing the interactions.