How to simulate a low bandwidth connection for testing web sites and applications

Facebook just announced an internal initiative called "2G Tuesdays" and I think it's brilliant. It's a clear and concrete way to remind folks with fast internet (who likely have always had fast internet) that not everyone has unlimited bandwidth or a fast and reliable pipe. Did you know Facebook even has a tiny app called "Facebook Lite" that is just 1Mb and has good support for slower developing networks?

You should always test your websites and applications on a low bandwidth connection, but few people take the time. Many people don't know how to simulate simulate low bandwidth or think it's hard to set up.

Simulating low bandwidth with Google Chrome

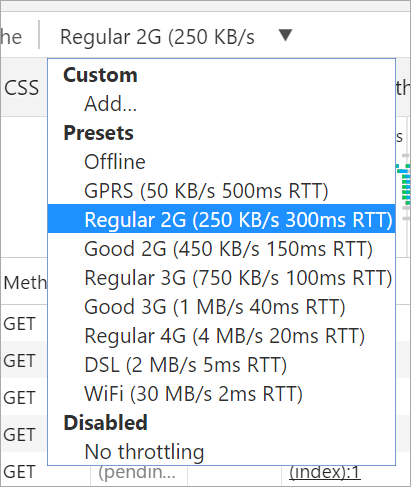

If you're using Google Chrome, you can go to the Network Tab in F12 Tools and select a bandwidth level to simulate:

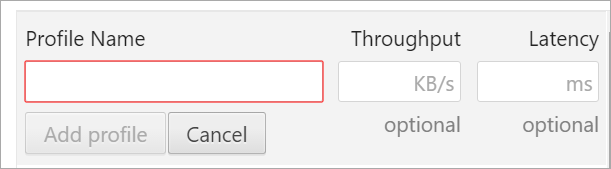

Even better, you can also add Custom Profile to specify not only throughput but custom latency:

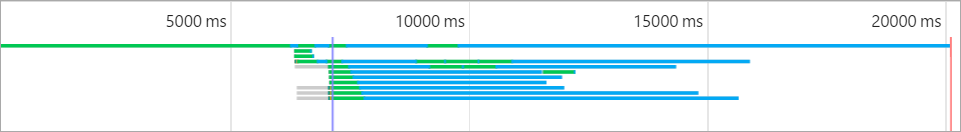

Once you've set this up, you can also click "disable cache" and simulate a complete cold start for your site on a slow connection. 20 seconds is a long time to wait.

Simulating a slow connection with a Proxy Server like Fiddler

If you aren't using Chrome or you want to simulate a slow connection for your apps or other browsers, you can slow it down from a Proxy Server like Fiddler or Charles.

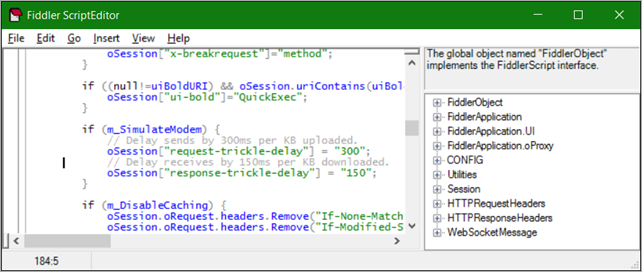

Fiddler has a "simulate modem" option under Rules | Performance, and you can change the values from Rules | Customize Rules:

You can put in delays in milliseconds per KB in the script under m_SimulateModem:

if (m_SimulateModem) {

// Delay sends by 300ms per KB uploaded.

oSession["request-trickle-delay"] = "300";

// Delay receives by 150ms per KB downloaded.

oSession["response-trickle-delay"] = "150";

}

There's a number of proxy servers you can get to slow down traffic across your system. If you have Java, you can also try out one called "Sloppy." What's your favorite tool for slowing traffic down?

Conclusion

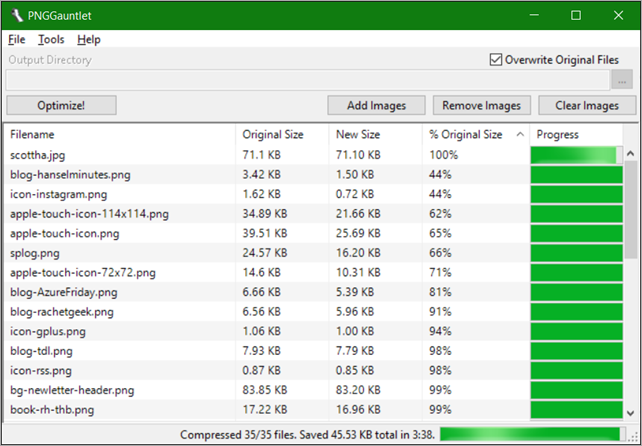

There is SO MUCH you can do to make the experience of loading your site better, not just for low-bandwidth folks, but for everyone. Squish your images! Don't use PNGs when a JPEG would do. Minify! Use CDNs!

However, step 0 is actually using your website on a slow connection. Go do that now.

Related Links

- Bloggers: Know when to use a JPG and when to use a PNG and always Squish them both

- The Importance (and Ease) of Minifying your CSS and JavaScript and Optimizing PNGs for your Blog or Website

Sponsor: Big thanks to Infragistics for sponsoring the feed this week. Quickly & effortlessly create advanced, stylish, & high performing UIs for ASP.NET MVC with Ignite UI. Leverage the full power of Infragistics’ JavaScript-based jQuery/HTML5 control suite today.

About Scott

Scott Hanselman is a former professor, former Chief Architect in finance, now speaker, consultant, father, diabetic, and Microsoft employee. He is a failed stand-up comic, a cornrower, and a book author.

About Newsletter

When I first read "2G Tuesday" I thought brilliant.that was until I read the story. The title should be "Optional 1 hour 2G Tuesday".

<rant>

Slow bandwidth is not restricted to developing nations. I live in Australia's capital city. The best we can get at home is ADSL2 with max 5.2 Mbps down / 0.8 Mbps up. Don't get me started on the unbelievably poor 3G coverage at home.

</rant>

It would great to see all US tech companies have 2G / 3G Tuesday's at least once a year.

I just use conference Wi-Fi. Guaranteed slowness and dropped connections galore!

:)

Actually, I wish we could convince everybody to do this. If I'm looking at pictures I may expect it to be slow, but if I'm actually trying to accomplish work, I expect speed, and could care less if the website looked like crap.

It's worth taking it for a spin to see how your site does on a mobile in Bangalore (and weep).

Simon you are correct WPT has all sorts of great features to do synthetic testing of sites. It is my #1 web performance tool.

TrvisJ - Images still make up most of the payload, but that does not keep the page from rendering. I research this stuff all the time. Right now JavaScript is the biggest problem. Too many poorly designed frameworks, etc. But third party scripts also cause way too many problems. Notice all the articles about big performance gains with Safari's new 3rd party script blocking feature.

And it is not all about page load it is about how your content gets rendered. And of course what triggers rerenders and page layouts? You have to be smart and know how browsers work. You MUST realize the browser is not the server and network latency plays a big role in all this.

If you include many js files you should always have a look at the network tab of dev tools too. Each js file is a http request. Having 5 files from a cdn and another 5 from your domain can actually be slower than serving one huge bundle.

Also you should always serve resized images. I love this component for that: http://imageresizing.net/

For sure you should minify, cache and gzip everything you can.

But with all those good advice I often get into situations, where I fight with caching. On the one hand I need it bon the other hand it can drive me crazy. That's why I often end up in compressing and bundling everything but supressing cache by simply add a time stamp to the query string of every static file that could change on a application update.

How do you ensure that your users get the latest js code of a SPA for example? Any better idea than adding a magic query string, that works even in older IE versions or behind proxy servers?

Here's what we actually get:

2G: 10Kbps average, 35-50 Kbps peaks.

3G/3.5G i.e. HSPA/HSDPA/HSPA+: 50 Kbps average, wildly varying bursts of 350-1Mbps. The operator WILL throttle you down if you watch videos too long and/or if they suspect you of using torrents.

4G/LTE: IF you get 4G on your 4G phone, expect of wildly varying bursts between 10 Kbps to 4Mbps. No averages observed because 4G is a lie, just like the cake ;-)

Perhaps a little overkill for your use case, but it is awesome for testing for issues between all the parts of your distributed system.

Comments are closed.

However, there is another point to make regarding approaches to content with regards to using images as layout. On this page, you use a small image http://www.hanselman.com/images/bg-line-chocolate.png and a css class to repeat that small image as the background to your header. This is a great example of using css and a very small footprint in order to accomplish a visually appealing graphic.

It is important when designing to always attempt to create graphical content using processing power when possible, and that means leveraging the rendering engine by smart use of css such as the example shown above.

Thanks for the tip on the bandwidth testing. Luckily my worst case scenario was 3 seconds at 2G which I think is acceptable. Often I use lazy loading for images, however this post made me consider the memory of loading a set of images in the background and what some of that implication may be on older devices. If only there were a way to cap the memory use of a page in chrome similar to this bandwidth tool.