Leap Motion: Amazing, Revolutionary, Useless

I desperately want it to work, don't you? Just like Minority Report. You wave your hands and your computer interface moves effortlessly.

Frankly, let's forget all that. I'll lower my expectations WAY WAY WAY down. I'd just like to wave my hand left and right and the system move a window between one of my three monitors? Seems reasonable.

This is what I want to feel like with the Leap Motion.

Here's how I really feel using Leap Motion.

Venture Beat says:

The $80 device is 200 times more accurate than Microsoft’s Kinect, sensing even 1/100th of a millimeter motions of all 10 fingers at 290 frames per second.

Really? I find them both equally bad. 1/100th of a millimeter? That's lovely but it makes for an extremely hyperbolic and twitchy experience. I have no doubt it's super accurate. I have no doubt that it can see the baby hairs on my pinky finger - I get it, it's sensitive. However, it's apparently so sensitive that the software and applications that have been written for it don't know how to tell what's a gesture and what's a normal twitch.

My gut says that this is a software and SDK maturity thing and that the Leap Motion folks know this. In the two weeks I've had this device it's updated the software AND device firmware at LEAST three times. This is a good thing.

Perhaps we need to wear gloves with dots on them like Tom Cruise here. When you hold your fingers together and thumb in, Leap Motion sees one giant finger. Digits appear and disappear so you are told to keep your fingers spread out if you can. This becomes a problem if your palm is turned perpendicular to the device. Since Leap Motion only sees up from its position on your desk, it can't exactly tell the difference between a palm down with fingers in and a hand on its side. It tries, but it's about 80% by my reckoning. That may sound great, except when it's 20% completely insane.

I also found that wearing my watch confused the device into thinking I had a third hand. I'm not sure if it's glints off the metal of the watch, but I had to take it off.

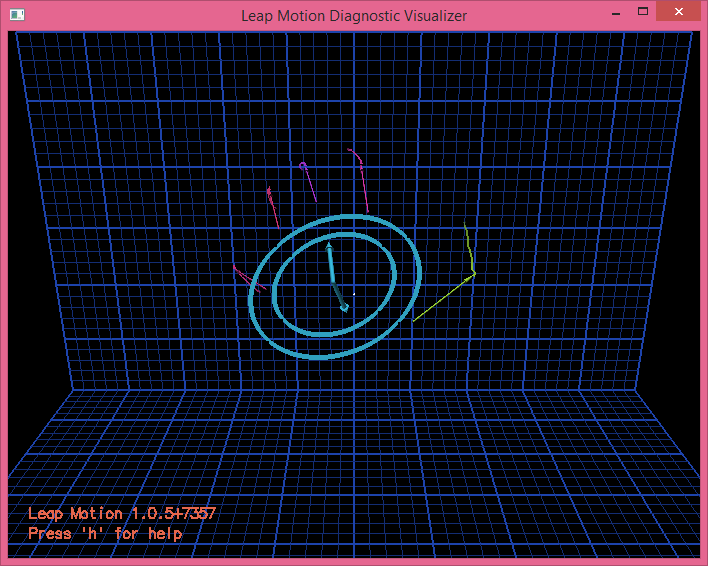

To be really clear, I totally respect the engineering here and I have no doubt these folks are smarter than all of us. Sure, it's super cool to wave your hand above a Leap Motion and go "whoa, that's my hand." But that's the most fun you'll have with a Leap Motion, today.

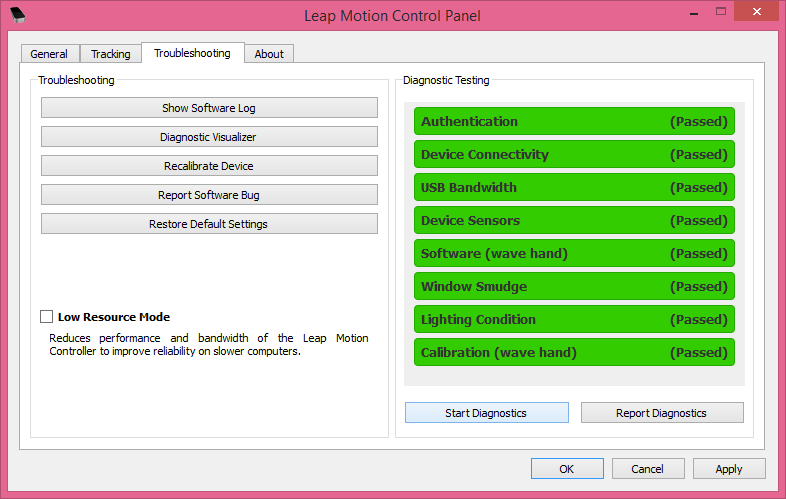

There is an excellent diagnostics system that will even warn you of fingerprints. You'll be impressed too, the first time you get a "smudge detected" warning.

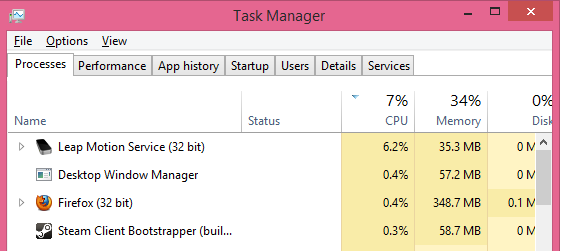

The software is impressive and organized, but on the down side, the Leap Motion Service takes up as much as 6-7% of my CPU when it seems something near it. That's a lot of overhead, in my opinion.

The software that I WANT to work is called "Touchless for Windows." It's launched from the AirSpace store. This Leap Motion specific store collects all the apps that use the Leap Motion.

Having a store was a particularly inspired move on their part. Rather than having to hunt around the web for Leap Motion compatible apps, they are just all in the their "store."

The TouchLess app bisects the space above the Leap Motion such that if you're in front of the device you've moving the mouse and if you've moved through the invisible plane then you're touching the "screen." Pointing and clicking is a challenge to say the least.

Scrolling on the other hand is pretty cool and it's amazing when it works. You move your hand in a kind of forward to backward circle, paging up through web sites.

It's not foolproof by any means. Sometimes the Leap Motion will go into what it calls "robust mode." I am not sure why the device wouldn't want to be "robust" all the time. It seems that this really means is "degraded mode." There are threads on the Leap Motion forums about Robust Mode. Lighting seems to play a large factor.

Here's me attempting to use the Leap Motion with Touchless to do anything to this folder. Open it, move it, select it, anything.

Today, I look at the Leap Motion as an amazing $80 box of potential. Just like the Kinect, the initial outcropping of apps are mostly just technology demos. It remains to be seen if the Leap Motion will mature in the coming months. I still think it's an amazing gadget and if you have $80 to blow, go for it. Set your expectations low and you won't be disappointed.

Sponsor: Big thanks to Red Gate for sponsoring the feed this week. Be sure to pick up their Free eBook: 25 Secrets for Faster ASP.NET Applications - Red Gate has gathered some great tips from the ASP.NET community to help you get maximum performance from your applications. Download them free.

About Scott

Scott Hanselman is a former professor, former Chief Architect in finance, now speaker, consultant, father, diabetic, and Microsoft employee. He is a failed stand-up comic, a cornrower, and a book author.

About Newsletter

Right now I view this as a hacker toy, part of a larger stack for something better (ie. mashing into Rift), but shouldn't be sold in a retail store for mums and dads yet!

The Oculus Rift is succeeding for the same reason. That being that it's actually something real. Something you can hold.

Ryan - I will check it out!

Keeping it now and waiting on my Oculus Dev kit. I thank you and I am sure my wife will curse you :)

Google Earth has worked everywhere and is a cool demo of what's possible, but definitely early days.

UX/non-tech peeps with these atoms/SDK can now take on the challenge of solving, experimenting and playing with new experiences - which I've been dreaming of since I was a kid.

Sure, maybe the Leap is buggy and will only work 80% of the time, maybe it will fail - but I can play, code and touch it now. Amazing.

But I urge you to check out this new app GameWave. It is like TouchLess app on steroids and highly configurable. As usual you have to get used to it, but once you do it's really cool.

I'm sure in upcoming months there will be more interesting apps that will make Leap Motion very usable on daily basis.

That's lovely but it makes for an extremely hyperbolic and spastic experience.

Hi Scott, I understand the word has different meaning in the US, but to UK readers, the term "spastic" is an offensive term for people with Cerebral Palsy and disabled people in general. I know you wouldn't want to offend anyone so just thought you should know.

My current view of the device however is that it is not the most ergonomic path to interacting with your PC/Mac. I find that any app that requires you to hold your hand/s up for prolonged periods of time is nothing more than an energy drain. The cool factor is merely visual but in the actual sense it doesn't add value to your interactive experience. If there is one thing the LEAP does well it's that it makes you appreciate your mouse more. One of the most painful things I've seen when it comes to user interaction is when a friend of mine tried using it for a while. He was fatigued and overwhelmed.

I think the word 'potential' is being thrown around in an effort of folks to validate their purchase. The honest thing is that they possess a novelty item. They were drawn in by the marketing which was just that, marketing. It's a psychological effect which keeps them grasping on the 'potential' of the device.

Another shiny gadget for the dusty drawer of doomed electronics I think...

Now though I do use it, but only to control the TV (turn off sound, pause/play etc.) So on the upside, no more greasy remotes :)

That said, you should check out the SDK, if only to learn what awesome code it really is. I focused on their javascript API, which uses websockets to connect to the device driver running on the PC. Think about that: You can write _javascript_ that runs anywhere and talks to this thing. You could Leap-enable your blog! ;) It's totally rad use of websockets and fun to do. With just a few LOC I got together a little menu: hold up 1 finger for item 1, 2 for 2, etc. To your comment about developers handling sensitivity, I did find for that I had to 'debounce' a bit: I had to check that the user held 2 fingers up for a few milliseconds or else it could randomly pick a menu item.

I will also say, I feel they did a really good job managing the development process. They had regular releases and communicated well what was in each, and what was coming. I was left thinking "this is the way to run this type of project, I just hope it works out for them."

I used to do some volunteer work for the Spastic Society before it became SCOPE, FWIW. Calling someone a spastic is not good IMO, categorising them by their infirmity. But objecting to describing movements as spastic is going too far.

Couple of links for anyone interested: http://www.scope.org.uk/sites/default/files/pdfs/History/Scope_name_change.pdf, http://www.chortle.co.uk/news/2012/05/10/15359/ben_eltons_spastic_gaffe.

Even in Minority Report, they use a normal (futuristic) PC with a keyboard in their desks (image) and they have a special device for using the gloves.

The Kinect on the other hand fails on the extreme of the leap, because the current Kinect cannot detect fingers it becomes somewhat equally useless. There needs to be a happy medium between finger detection, range, and accurate gesture recognition before the whole of this technology will become useful.

Also Kinect 2 will recognize fingers. And I don't see why not, a Kinect Next can't be built in a laptop.

That being said, Leap provides an opportunity for a cool photo or a video, but a trackpad or a mouse provides hand-rest.

I really really have high hopes for the next revision, especially with the influx of funding they received.

The killer app/game for this would be Surgeon Simulator, Oculus Rift, and the Leap Motion all working together.

My write up: http://blog.sheasilverman.com/2013/08/friday-post-semester-startup/

same feeling. Great device, great potential, right price, good sdk but ... where's the deal ? mouse replacement ? new gesture (my shoulder hurts :) ) or some mix of leap / kinect / mouse that now simply doesn't work or no one yet imagined !

BTW, now it's quite useless, same experience of playing tennis with wiimote (you can swing your wirst without acting as Agassi and breaking the lamps), without the fun of playing tennis on wii with some friends ! :) yust to troll and bully some friends on this new toy ...

Another good one Scott.

Gianluca

Kane - Read the post again. I'm VERY aware of the potential. I just think it's not living up to it yet. The Kinect is the same way. Where's my Kinect for Windows app? At least the Leap Motion has Touchless...the Kinect doesn't even have that much. The thing is potential quickly becomes wasted potential.

And that's after only playing with it for a few minutes.

So, I think "useless" is a bit much. This may be a niche area of course, and I get that you acknowledge its potential, but I still think your title is a bit more than unfair.

Ten years later, we all have tablets.

Kinect and LeapMotion are the first tablets. Give it time.

Oh well, I guess I'll give it another try this weekend

It's kind of like the Sphero - it's a really cool idea - then you play with it for a few hours (or minutes) and you put it away.

Sad really...

I know their investor well and was lucky enough to play around with one for a few hours several months ago so I am biased. That said, you need, need to check this out, I will be personally offended if you don't. :)

https://airspace.leapmotion.com/apps/bettertouchtool/osx

While I agree it will take some time (1-2 years?) to reach their potential, its the only way to make people think and move forward with interfaces.

--Steve

OK... but like how many people out there mold clay with a keyboard and experience this singular painpoint. Oh, I've tried better touch tool etc... painful gorilla arm experience. My Leap is collected dust on the shelf.

The (useless) app I enjoyed most is actually Fruit Ninja. Because it was designed to not need any "clicking", I find it much easier to control accurately than say "Cut the Rope". Compared to most other Airspace apps, I was pleasantly surprised.

http://code.google.com/p/coolshell/

...you can move windows around much more easily.

The first thing that came to mind as a practical use for the Leap Motion was as a way to control my HTPC from my couch. The effective range was stated to be about eight feet so it seems doable.

I imagine something like:

Index finger extended = basic cursor movement

Index and middle finger extended (like bunny ears) = left-click-hold (for dragging items, flexing the middle finger here would mean a left-click)

Opening fist to all five fingers (spread out) = pressing ENTER

4 fingers extended (spread out, no thumb) = middle-click-hold (for moving windows)

5 fingers extended (combined, with thumb extended; like a mitten) = pressing SPACEBAR (for pausing video playback, etc.)

So I am pretty pessimist concerning leap motion to replace keyboard/mouse/tablet-screen. Taking account of this laziness trend, the next thing could be moving cursor and writing with mouth, eyes and mind. Concerning mouth, with project like Siri it becomes more and more a reality. Concerning eye and mind the technology already exists for handicapped people, but it looks like it needs to get maturity.

What they'll need to do is get the current detection reliable and then work on adding more gestures. A few months back, I figured out what I'd have mine for; snap your fingers to compile. (There's more in the same vein, but in essence, that sort of stuff.) That can't be done because the gesture can't reliably detected. I can't even get the hand and digits to stay recognized for the duration of the gesture, not once. And yeah, I too get the "robust" mode, because the environment I'm using it in is a fourth as bright as in their own presentation video and apparently, the Leap is now suddenly a vampire.

All this said, I'm reasonably impressed with the way they did "web integration" without plugins; a part of the driver that runs a WebSocket server on a given port to which anything may listen, and for which they have a JavaScript library.

After a week or 2 of fiddling with this thing for O/S control (GameWave looked promising, as does the opensource AirKey project) I've come to a start realisation:

Forget all the hoohah about "O/S's needing native gesture support" yaddayadda yadda

- Gesture interaction is as complex and personal as speech recognition.

It's the lexicon that's missing.

We need to teach a gesture lexicon to the system like the early speech recognition, before the recognition engines mature.

Expecting human dev's to nail down complex, intricate gesture control and then for the world to learn these complexities is folly. It's the planned economy all over again.

More that that, if I'm sitting in a slightly different position, the geometry of my arm>hand movements changes. The system used needs to recognise the abstract gesture regardless of orientation (the leap working from below makes that a little tricky) - coding that by hand is ridiculously complicated. Ask 100,000 users to train the system in a gesture they want to perform a common action and you'll be heading in the same direction as speech recognition, which is getting pretty damned good.

AFAIK, Leap have no capability or plans to crowd source (from owners in the wild) training some AI (probly neural nets with heuristics for context/combo moves) for their gesture recognition. < do that please!

To be honest, when it comes to crowd-sourced training of AI for useful functions, ReCapture (used by Google), Google image identification and a few other Google projects have really shown what can be done. Come on Microsoft, Leap, Apple - get on board!

It's tricky, but hella fun!

Many thanks for your encouragement and inspiration to share my experience, and I say again: definitely not useless!

Here is a demo video on YouTube

Here is a demo video on YouTube

We have developed a computer-vision product called Flowton Controller - combining the beautiful industrial design, gesture control, and voice recognition, aiming to bring the first NI (natural interaction) device to the modern homes.

We have just launched our kickstarter campaign with our working prototype and design vision, we appreciate anyone to check out and let us know what you think. Thanks for the support!

http://www.kickstarter.com/projects/1863394632/flowton-technologies-gesture-control-device-for-yo

Sincerely,

Flowton Technologies

I agree that it's not very useful for controlling Windows. Few things can beat a mouse, touch screen, or great touch pad.

However, I bought one to use for controlling a synthesizer, and for that, it works quite well.

http://10rem.net/blog/2013/08/26/leap-motion-on-windows-81-for-midi-control-and-more

Pete

Because they did not pay adequate attention to precision of mapping commands, and got overly concerned with precision of the hardware itself without understanding a vocabulary they could map that precision to, the device is a toy or at best a performance art piece, like a theremin...quirky and neat to see, but limited to be used by DEVO or whatever.

Even the simplest movements often fail with leap, as opposed to the stability afforded by g-speak. To some extent that is to be expected because g-speak is (many $$$) versus $80, but in the end even $80 is a waste when the thing doesn't work.

Heck, cancel that. I decided to throw it away. I'd feel guilty taking someone's hard-earned money for this useless device (at any price). What a disappointment after all that publicity.

As you said the product does have its rough edges maybe the fact that they marketed a very high precision artifact, that makes you think its going to be an amazing experience.

But there a couple problems with that, with high precision comes a lot of data, and there for you get the problems you described, where the app now has to distinguish between your finger twitch and a actual action.

The solution is not present yet, but what we need are learning algorithms that allow certain things to be taught to the application in a way that you can distinguish easily (fast) what the user is doing and throw away the non important things. After we have that,then gestures will be created by devs and then certain gestures can be incorporated to device and make it common between apps.

We don't actually know whats common or not, what all cultures think of that, so how do you create a device and put all those things inside it, if you don't even know what they are. Unless you impose it. Ex. How do move a windows from one screen to to the other. You flick it? You point and click? You grab and release?

Even simple things like turning a page can be bad: lets say you are on a Windows 8 machine, reading a book. And lets assume the technology is fully supported in windows (Driver like a mouse), the first problem, is how you distinguish from a Right to Left in Windows to get the charms and "in app" to turn the page? Or what if my language is the other way around (left to right), or a book vs a notebook (Bottom Up), now you will get the app bar instead.

Ideally people build things, early adopter buy them and set standards, then much later it becomes something real and usable by all, but it has to go to market first.

What it actually needs is another detector working from above so that you immerse your hands into a 3d "mesh" thereby avoiding the fingers disappearing when you turn your hand.

However that it quite a level of complexity up from where it is now but until it works it'll be a flop. Quite sad about that.

Comments are closed.