The Weekly Source Code 59 - An Open Source Treasure: Irony .NET Language Implementation Kit

_d98ca824-762b-463f-a596-b11594411b29.png) One of the best, if not the best way to sharpen the saw and keep your software development skills up to date is by reading code. Sure, write lots of code, but don't forget to explore other people's

One of the best, if not the best way to sharpen the saw and keep your software development skills up to date is by reading code. Sure, write lots of code, but don't forget to explore other people's brains code. There's always fifteen different ways to create a "textboxes over data" application, and while it's interesting to take a look at whatever the newest way to make business software, sometimes it's nice to relax by looking at some implementations of classic software issues like parsers, lexers, and abstract syntax trees. If you didn't go to school or failed to take a compilers class at least knowing that this area of software engineering exists and is accessible to you is very important.

It's so nice to discover open source projects that I didn't know existed. One such project I just stumbled upon while doing research for a customer is "Irony," a .NET language implementation kit. From the CodePlex site:

Irony is a development kit for implementing languages on .NET platform. Unlike most existing yacc/lex-style solutions Irony does not employ any scanner or parser code generation from grammar specifications written in a specialized meta-language. In Irony the target language grammar is coded directly in c# using operator overloading to express grammar constructs. Irony's scanner and parser modules use the grammar encoded as c# class to control the parsing process. See the expression grammar sample for an example of grammar definition in c# class, and using it in a working parser.

Irony includes "simplified grammars for C#, Scheme, SQL, GwBasic, JSON and others" to learn from. There are different kinds of parsers that are grammar generators you might be familiar with. For example, ANTLR is a what's called a LL(*) grammar generator, while Irony is a LALR (Look Ahead Left to Right) grammar generator.

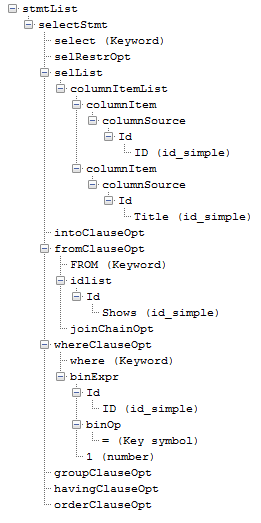

Here's a very basic SQL statement for getting a show from my Podcast database:

SELECT ID, Title FROM Shows WHERE ID = 1

Here's the Irony Parse Tree as viewed in the irony Grammar Explorer:

Typically, in my experience, when creating a parser one will use a DSL (Domain Specific Language) like the GOLD Meta Language building on the BNF (Backus-Naur Form) expression grammar. These domain specific languages are tightly optimized to express exactly how a language is structured and how it should be parsed. You learn a language to create languages.

Remember in the Irony intro text earlier? Let me repeat:

Unlike most existing yacc/lex-style solutions Irony does not employ any scanner or parser code generation from grammar specifications written in a specialized meta-language. In Irony the target language grammar is coded directly in c# using operator overloading to express grammar constructs.

What Roman from Irony has done here is use C# language constructs as if it's a DSL. A fluent parser, as it were. So he's using C# classes and methods to express the language grammar. It's a very interesting and powerful idea if you are interested in creating DSLs but not interested in learning other parsers like GOLD. Plus, it's just fun.

_e5609e46-a103-4c59-8d7e-67b3fdc0f307.png)

He has a very rich bass class called Grammar that you derive from, like:

[Language("SQL", "89", "SQL 89 grammar")]

public class SqlGrammar : Grammar {

public SqlGrammar() : base(false) { //SQL is case insensitive

...

But instead of a grammar language like this (simplified by me) to express a SQL SELECT Statement:

! =============================================================================

! Select Statement

! =============================================================================

<SELECT Stm> ::= SELECT <COLUMNS> <INTO Clause> <FROM Clause> <WHERE Clause>

<GROUP Clause> <HAVING Clause> <ORDER Clause><COLUMNS> ::= <RESTRICTION> '*' |

<RESTRICTION> <COLUMN List>...snip for clarity...<RESTRICTION> ::= ALL |

DISTINCT |<AGGREGATE> ::= Count '(' '*' ')' | Count '(' <EXPRESSION> ')' |

Avg '(' <EXPRESSION> ')' | Min '(' <EXPRESSION> ')' | Max '(' <EXPRESSION> ')' |

StDev '(' <EXPRESSION> ')' | StDevP '(' <EXPRESSION> ')' | Sum '(' <EXPRESSION> ')' |

Var '(' <EXPRESSION> ')' | VarP '(' <EXPRESSION> ')'<INTO Clause> ::= INTO Id |

<FROM Clause> ::= FROM <ID List> <JOIN Chain><JOIN Chain> ::= <JOIN> <JOIN Chain> |

...snip for clarity...

You'd have something like this instead, again, simplified so this doesn't turn into a giant listing of code rather than a blog post.

//Select stmt

selectStmt.Rule = SELECT + selRestrOpt + selList + intoClauseOpt + fromClauseOpt + whereClauseOpt + groupClauseOpt + havingClauseOpt + orderClauseOpt;

selRestrOpt.Rule = Empty | "ALL" | "DISTINCT";

selList.Rule = columnItemList | "*";

columnItemList.Rule = MakePlusRule(columnItemList, comma, columnItem);

columnItem.Rule = columnSource + aliasOpt;aliasOpt.Rule = Empty | asOpt + Id;

asOpt.Rule = Empty | AS;columnSource.Rule = aggregate | Id;

aggregate.Rule = aggregateName + "(" + aggregateArg + ")";

aggregateArg.Rule = expression | "*";

aggregateName.Rule = COUNT | "Avg" | "Min" | "Max" | "StDev" | "StDevP" | "Sum" | "Var" | "VarP";

intoClauseOpt.Rule = Empty | INTO + Id;fromClauseOpt.Rule = Empty | FROM + idlist + joinChainOpt;

joinChainOpt.Rule = Empty | joinKindOpt + JOIN + idlist + ON + Id + "=" + Id;

joinKindOpt.Rule = Empty | "INNER" | "LEFT" | "RIGHT";

whereClauseOpt.Rule = Empty | "WHERE" + expression;

groupClauseOpt.Rule = Empty | "GROUP" + BY + idlist;

havingClauseOpt.Rule = Empty | "HAVING" + expression;

orderClauseOpt.Rule = Empty | "ORDER" + BY + orderList;

Here the variables and terms that are being use to build the grammar were defined earlier like this, as an example:

var SELECT = ToTerm("SELECT"); var FROM = ToTerm("FROM");var AS = ToTerm("AS");

You might immediately declare, Dear Reader, that this is blasphemy! How can C# compete with a specialized DSL like the BNF? This is a C# shaped peg being shoved into a round hold. Well, maybe, but it's interesting to point out that the SQL GOLD Grammar is 259 lines and the C# version of essentially the same thing is 247 lines. Now, I'm not pointing out line numbers to imply that this is a better way or that this is even a valid 1:1 comparison. But, it's interesting that the C# class is even close. You might have assumed it would be much much larger. I think it's close because Roman, the Irony developer, has a very well factored and specialized base class for the derived class to "lean on." Each of his sample grammars are surprisingly tight.

For example:

- "Mini" Python - ~140 lines

- Java - ~130 lines

- Scheme - ~200 lines

- JSON - 39 lines

To conclude, here's the JSON grammar generator.

using System;

using System.Collections.Generic;

using System.Linq;

using System.Text;

using Irony.Parsing;

namespace Irony.Samples.Json

{

[Language("JSON", "1.0", "JSON data format")]

public class JsonGrammar : Grammar

{

public JsonGrammar()

{

//Terminals

var jstring = new StringLiteral("string", "\"");

var jnumber = new NumberLiteral("number"); var comma = ToTerm(",");

//Nonterminals

var jobject = new NonTerminal("Object");

var jobjectBr = new NonTerminal("ObjectBr");

var jarray = new NonTerminal("Array");

var jarrayBr = new NonTerminal("ArrayBr");

var jvalue = new NonTerminal("Value");

var jprop = new NonTerminal("Property");

//Rules

jvalue.Rule = jstring | jnumber | jobjectBr | jarrayBr | "true" | "false" | "null";

jobjectBr.Rule = "{" + jobject + "}";

jobject.Rule = MakeStarRule(jobject, comma, jprop);

jprop.Rule = jstring + ":" + jvalue;

jarrayBr.Rule = "[" + jarray + "]";

jarray.Rule = MakeStarRule(jarray, comma, jvalue);

//Set grammar root

this.Root = jvalue; MarkPunctuation("{", "}", "[", "]", ":", ",");

this.MarkTransient(jvalue, jarrayBr, jobjectBr);

}

}

}

Pretty clever stuff, and a well put together project and solution that is well structured. I could myself using this in a C# or Compiler class to teach some of these concepts. It's also a great little tool for creating small languages of your own. Perhaps you have a Wiki-dialect that's specific to your company and you want to get rid of all that nasty manual parsing? Or many you have an old custom workflow engine or custom expression system embedded in your application and never got around to changing all your parsing to a proper grammar? Maybe now is the time to get that little language you've been thinking about off the ground!

I encourage you, Dear Reader, to support open source projects like this. Why not go leave a comment today on your favorite open source project's site and just let them know you appreciate what they're doing?

About Scott

Scott Hanselman is a former professor, former Chief Architect in finance, now speaker, consultant, father, diabetic, and Microsoft employee. He is a failed stand-up comic, a cornrower, and a book author.

About Newsletter

https://github.com/ifandelse/CouchRS

http://code.google.com/p/sprache/

Sprache uses linq for the grammar definition. Check it out.

https://github.com/AlbertoMonteiro/Portugol-with-CSharp

For me, Irony seems like a tool to do the same in a different way. There is extensive formal documentation and books that explain how to create LL & LR grammars, remove ambiguities... I guess, in Irony you still need to write your grammar, remove ambiguities, remove left/right recursion depending on the parsing algorithm and at the end, you still have to rewrite that to Irony structures. Too much work, when you can just write it once and forget, let alone grammar modifications.

It seems to me that Irony grammars are a lot more complex to read because of the mix of the C# and the grammar definition (specially if it's a large language).

I may be wrong, and it could be because I had formal training at university and I understand the theory of writing a compiler.

I wonder how it performs compared with parser generators, because parser generators generate the code optimized and then compiled, so I guess the code should run quicker.

Any idea if it supports tree transformations?

I wonder what happened with the Oslo Modeling project and the M-Grammar feature that was intended to create parsers as well.

But I do agree that you're probably better off using a toolkit like Irony to get started quickly and easily.

1. Tried ANTLR to do some DSL parsing, but it turns out that transforming from the AST to the domain objects was hard. Irory make that very easy compared to ANTLR or Gold.

2. Expressing the grammar in BNF or EBNF harder if you just want to do some DSL parsing without getting involved deep into syntax. Irony express it in terms of rules on the object which is easy to comprehend and extend.

Sethu

I had a look at the calculator and the JSON examples with Irony and FParsec.

It seems that with FParsec the code it's even shorter.

The calculator example in FParsec is only 23 lines of code.

I think the difference is with FParsec I'm not forced to define the evaluation separately from the parser, which gives more flexibility.

Looks to me that FParsec it's still the best alternative for .NET

Part of the reason why declaring the rules directly in C# is because it's just easier. I don't know of any yacc/bison version that's well integrated with Visual Studio. If mplex/mppg could be written as custom tools that could be plugged in an invoked directly from VS, that would also be useful. I'm thinking about doing that, actually, but starting with Irony. Perhaps the rules could be specified in XML, in addition to .y. Then you run Custom Tool from inside VS, and you've got your parse table.

But, there's something deeper here to me about Irony that fascinates me. The parse table is built at runtime, not compile time. That means that it's theoretically possible to take whatever it is you're doing (perhaps you're writing a VS language service, for example), and make it generic so that it can get it's grammar at runtime (here again, maybe the grammar is loaded from XML or something). Then you can extend your generic language service by installing new grammars. It has the potential to be much more flexibile than statically generated state tables from yacc/bison/mppg/antlr, etc.

Once again thank you very much. Soon i will write my parser and post a link for you guys to have a look at it.

Cheers,

Desmond

Comments are closed.

I'm using it for about three months now and it is fantastic. Easy to learn and very powerful.

Also, Roman is fantastic and gives an excellent support on Irony's discussion list on CodePlex.

Great post!