Useful ASP.NET Core 2.2 Features

Earlier this week I talked about how I upgraded my podcast site to ASP.NET Core 2.2 and added Health Check features fairly easily. There's a ton of new features and so far it's been great running on my site with no issues. Upgrading from 2.1 is straightforward.

- Better integration with popular Open API (Swagger) libraries including design-time checks with code analyzers

- Introduction of Endpoint Routing with up to 20% improved routing performance in MVC

- Improved URL generation with the LinkGenerator class & support for route Parameter Transformers (and a post from Scott Hanselman)

- New Health Checks API for application health monitoring

- Up to 400% improved throughput on IIS due to in-process hosting support

- Up to 15% improved MVC model validation performance

- Problem Details (RFC 7807) support in MVC for detailed API error results

- Preview of HTTP/2 server support in ASP.NET Core

- Template updates for Bootstrap 4 and Angular 6

- Java client for ASP.NET Core SignalR

- Up to 60% improved HTTP Client performance on Linux and 20% on Windows

I wanted to look at just a few of these that I found particularly interesting.

You can get a very significant performance boost by moving ASP.NET Core in process with IIS.

Using in-process hosting, an ASP.NET Core app runs in the same process as its IIS worker process. This removes the performance penalty of proxying requests over the loopback adapter when using the out-of-process hosting model.

After the IIS HTTP Server processes the request, the request is pushed into the ASP.NET Core middleware pipeline. The middleware pipeline handles the request and passes it on as an

HttpContextinstance to the app's logic. The app's response is passed back to IIS, which pushes it back out to the client that initiated the request.

HTTP Client performance improvements are quite significant as well.

Some significant performance improvements have been made to SocketsHttpHandler by improving the connection pool locking contention. For applications making many outgoing HTTP requests, such as some Microservices architectures, throughput should be significantly improved. Our internal benchmarks show that under load HttpClient throughput has improved by 60% on Linux and 20% on Windows. At the same time the 90th percentile latency was cut down by two on Linux. See Github #32568 for the actual code change that made this improvement.

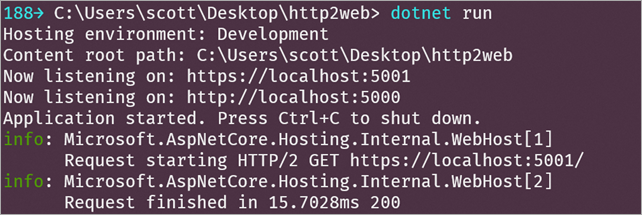

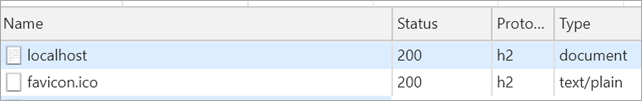

HTTP/2 is enabled by default. HTTP/2 may be sneaking up on you as for the most part "it just works." In ASP.NET Core's Kestral web server HTTP/2 is enabled by default over HTTPS. You can see here at both the command line and in Chrome I'm using HTTP/2 locally.

Here's Chrome. Note the "h2."

Note that you'll only be able to get HTTP/2 when ALPN (Application-Layer Protocol Negotiation) is available. That means ALPN is supported on:

- .NET Core on Windows 8.1/Windows Server 2012 R2 or higher

- .NET Core on Linux with OpenSSL 1.0.2 or higher (e.g., Ubuntu 16.04)

All in all, it's a solid release. Go check out the announcement post on ASP.NET Core 2.2 for even more detail!

Sponsor: Preview the latest JetBrains Rider with its Assembly Explorer, Git Submodules, SQL language injections, integrated performance profiler and more advanced Unity support.

About Scott

Scott Hanselman is a former professor, former Chief Architect in finance, now speaker, consultant, father, diabetic, and Microsoft employee. He is a failed stand-up comic, a cornrower, and a book author.

About Newsletter

What's your thoughts on using ASP.Net Core for intranet apps? We develop about a dozen apps here for intranet use only. Part of me would like to use Docker and Core... but we are a Windows only environment, with Windows servers.

The skinny of it is that certain operations are fairly intensive so we can only support a certain number of concurrent calls and queue size before the site gets too bogged down (think the first few hours of any Steam sale). Anything we tried on the IIS side to munge with the queue and throttling requests didn't seem to be applicable (likely due to IIS 7 and integrated mode changes) but aside from the Kestrel limits themselves we couldn't figure out how to actually set up a queue. It was either "we can handle 'x' calls or we'll 503 ya!"

For better or worse we ended up implementing Tomasz Pęczek's "experimental" throttler on top of making some other changes to our overall pipeline (more/better async/await handling, caching some of the heavy calls, etc) and that at least got us to a stable spot.

Anyway, looking forward to trying it out and now that we're out of the fire I can spend some time researching the IIS to .NET Core proxy and see if I can figure the queue/throttling stuff out directly instead of using the bandaid.

I think you meant Kestrel. Anyway, I'm looking at these new feature from 2.2 right now.

Comments are closed.