Back to Basics: 32-bit and 64-bit confusion around x86 and x64 and the .NET Framework and CLR

I'm running 64-bit operating systems on every machine I have that is capable. I'm running Vista 64 on my quad-proc machine with 8 gigs of RAM, and Windows 7 Beta 64-bit on my laptop with 4 gigs.

Writing managed code is a pretty good way to not have to worry about any x86 (32-bit) vs. x64 (64-bit) details. Write managed code, compile, and it'll work everywhere.

The most important take away, from MSDN:

"If you have 100% type safe managed code then you really can just copy it to the 64-bit platform and run it successfully under the 64-bit CLR."

If you do that, you're golden. Moving right along.

WARNING: This is obscure and you probably don't care. But, that's this blog.

Back to 32-bit vs. 64-bit .NET Basics

I promote 64-bit a lot because I personally think my 64-bit machine is snappier and more stable. I also have buttloads of RAM which is nice.

The first question developer ask me when I'm pushing 64-bit is "Can I still run Visual Studio the same? Can I make apps that run everywhere?" Yes, totally. I run VS2008 all day on x64 machines writing ASP.NET apps, WPF apps, and Console apps and don't give it a thought. When I'm developing I usually don't sweat any of this. Visual Studio 2008 installs and runs just fine.

If you care about details, when you install .NET on a 64-bit machine the package is bigger because you're getting BOTH 32-bit and 64-bit versions of stuff. Some of the things that are 64-bit specific are:

- Base class libraries (System.*)

- Just-In-Time compiler

- Debugging support

- .NET Framework SDK

For example, I have a C:\Windows\Microsoft.NET\Framework and a C:\Windows\Microsoft.NET\Framework64 folder.

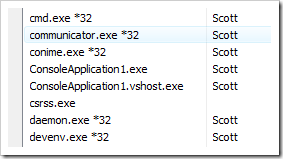

If I File|New Project and make a console app, and run it, this is what I'll see in the Task Manager:

Notice that a bunch of processes have *32 by their names, including devenv.exe? Those are all 32-bit processes. However, my ConsoleApplication1.exe doesn't have that. It's a 64-bit process and it can access a ridiculous amount of memory (if you've got it...like 16TB, although I suspect the GC would be freaking out at that point.)

That 64-bit process is also having its code JIT compiled to use not the x86 instruction set we're used to, but the AMD64 instruction set. This is important to note: It doesn't matter if you have an AMD or an Intel processor, if you're 64-bit you are using the AMD64 instruction set. The short story is - Intel lost. For us, it doesn't really matter.

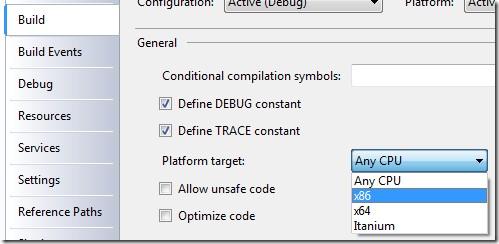

Now, if I right click on the Properties dialog for this Project in Visual Studio I can select the Platform Target from the Build Tab:

By default, the Platform Target is "Any CPU." Remember that our C# or VB compiles to IL, and that IL is basically processor agnostic. It's the JIT that makes the decision at the last minute.

In my case, I am running 64-bit and it was set to Any CPU so it was 64-bit at runtime. But, to repeat (I'll do it a few times, so forgive me) the most important take away, from MSDN:

"If you have 100% type safe managed code then you really can just copy it to the 64-bit platform and run it successfully under the 64-bit CLR."

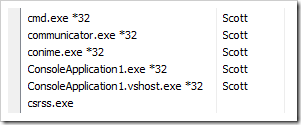

Let me switch it to x86 and look at Task Manager and we see my app is now 32-bit.

Cool, so...

32-bit vs. 64-bit - Why Should I Care?

Everyone once in a while you'll need to call from managed code into unmanaged and you'll need to give some thought to 64-bit vs. 32-bit. Unmanaged code cares DEEPLY about bitness, it exists in a processor specific world.

Here's a great bit from an older MSDN article that explains part of it with emphasis mine:

In 64-bit Microsoft Windows, this assumption of parity in data type sizes is invalid. Making all data types 64 bits in length would waste space, because most applications do not need the increased size. However, applications do need pointers to 64-bit data, and they need the ability to have 64-bit data types in selected cases. These considerations led the Windows team to select an abstract data model called LLP64 (or P64). In the LLP64 data model, only pointers expand to 64 bits; all other basic data types (integer and long) remain 32 bits in length.

The .NET CLR for 64-bit platforms uses the same LLP64 abstract data model. In .NET there is an integral data type, not widely known, that is specifically designated to hold 'pointer' information: IntPtr whose size is dependent on the platform (e.g., 32-bit or 64-bit) it is running on. Consider the following code snippet:

[C#] public void SizeOfIntPtr() { Console.WriteLine( "SizeOf IntPtr is: {0}", IntPtr.Size ); }When run on a 32-bit platform you will get the following output on the console:

SizeOf IntPtr is: 4On a 64-bit platform you will get the following output on the console:

SizeOf IntPtr is: 8

Short version: If you're using IntPtrs, you care. If you're aware of what IntPtrs are, you likely know this already.

If you checked the property System.IntPtr.Size while running as x86, it'll be 4. It'll be 8 while under x64. To be clear, if you are running x86 even on an x64 machine, System.IntPtr.Size will be 4. This isn't a way to tell the bitness of your OS, just the bitness of your running CLR process.

Here's a concrete example. It's a pretty specific edgy thing, but a decent example. Here's an app that runs fine on x86.

using System;

using System.Runtime.InteropServices;

namespace TestGetSystemInfo

{

public class WinApi

{

[DllImport("kernel32.dll")]

public static extern void GetSystemInfo([MarshalAs(UnmanagedType.Struct)] ref SYSTEM_INFO lpSystemInfo);

[StructLayout(LayoutKind.Sequential)]

public struct SYSTEM_INFO

{

internal _PROCESSOR_INFO_UNION uProcessorInfo;

public uint dwPageSize;

public IntPtr lpMinimumApplicationAddress;

public int lpMaximumApplicationAddress;

public IntPtr dwActiveProcessorMask;

public uint dwNumberOfProcessors;

public uint dwProcessorType;

public uint dwAllocationGranularity;

public ushort dwProcessorLevel;

public ushort dwProcessorRevision;

}

[StructLayout(LayoutKind.Explicit)]

public struct _PROCESSOR_INFO_UNION

{

[FieldOffset(0)]

internal uint dwOemId;

[FieldOffset(0)]

internal ushort wProcessorArchitecture;

[FieldOffset(2)]

internal ushort wReserved;

}

}

public class Program

{

public static void Main(string[] args)

{

WinApi.SYSTEM_INFO sysinfo = new WinApi.SYSTEM_INFO();

WinApi.GetSystemInfo(ref sysinfo);

Console.WriteLine("dwProcessorType ={0}", sysinfo.dwProcessorType.ToString());

Console.WriteLine("dwPageSize ={0}", sysinfo.dwPageSize.ToString());

Console.WriteLine("lpMaximumApplicationAddress ={0}", sysinfo.lpMaximumApplicationAddress.ToString());

}

}

}

See the mistake? It's not totally obvious. Here's what's printed under x86 (by changing the project properties):

dwProcessorType =586

dwPageSize =4096

lpMaximumApplicationAddress =2147418111

The bug is subtle because it works under x86. As said in the P/Invoke Wiki:

The use of int will appear to be fine if you only run the code on a 32-bit machine, but will likely cause your application/component to crash as soon as it gets on a 64-bit machine.

In our case, it doesn't crash, but it sure is wrong. Here's what happens under x64 (or AnyCPU and running it on my x64 machine):

dwProcessorType =8664

dwPageSize =4096

lpMaximumApplicationAddress =-65537

See that lat number? Total crap. I've used an int as a pointer to lpMaximumApplicationAddress rather than an IntPtr. Remember that IntPtr is smart enough to get bigger and point to the right stuff. When I change it from int to IntPtr:

dwProcessorType =8664

dwPageSize =4096

lpMaximumApplicationAddress =8796092956671

That's better. Remember that IntPtr is platform/processor specific.

You can still use P/Invokes like this and call into unmanaged code if you're on 64-bit or if you're trying to work on both platforms, you just need to be thoughtful about it.

Gotchas: Other Assemblies

You'll also want to think about the assemblies that your application loads. It's possible that when purchasing a vendor's product in binary form or bringing an Open Source project into your project in binary form, that you might consume an x86 compiled .NET assembly. That'll work fine on x86, but break on x64 when your AnyCPU compiled EXE runs as x64 and tries to load an x86 assembly. You'll get a BadImageFormatException as I did in this post.

The MSDN article gets it right when it says:

...it is unrealistic to assume that one can just run 32-bit code in a 64-bit environment and have it run without looking at what you are migrating.

As mentioned earlier, if you have 100% type safe managed code then you really can just copy it to the 64-bit platform and run it successfully under the 64-bit CLR.

But more than likely the managed application will be involved with any or all of the following:

- Invoking platform APIs via p/invoke

- Invoking COM objects

- Making use of unsafe code

- Using marshaling as a mechanism for sharing information

- Using serialization as a way of persisting state

Regardless of which of these things your application is doing it is going to be important to do your homework and investigate what your code is doing and what dependencies you have. Once you do this homework you will have to look at your choices to do any or all of the following:

- Migrate the code with no changes.

- Make changes to your code to handle 64-bit pointers correctly.

- Work with other vendors, etc., to provide 64-bit versions of their products.

- Make changes to your logic to handle marshaling and/or serialization.

There may be cases where you make the decision either not to migrate the managed code to 64-bit, in which case you have the option to mark your assemblies so that the Windows loader can do the right thing at start up. Keep in mind that downstream dependencies have a direct impact on the overall application.

I have found that for smaller apps that don't need the benefits of x64 but need to call into some unmanaged COM components that marking the assemblies as x86 is a reasonable mitigation strategy. The same rules apply if you're making a WPF app, a Console App or an ASP.NET app. Very likely you'll not have to deal with this stuff if you're staying managed, but I wanted to put it out there in case you bump into it.

Regardless, always test on x86 and x64.

Related Posts

About Scott

Scott Hanselman is a former professor, former Chief Architect in finance, now speaker, consultant, father, diabetic, and Microsoft employee. He is a failed stand-up comic, a cornrower, and a book author.

About Newsletter

(I agree that Itanium is dead though, it was just a handy example because it shows up in the drop down box in that screenshot.)

The answer is yes and no. If you've ever struggled with sn.exe on a 64bit box, you know what I mean:

https://connect.microsoft.com/VisualStudio/feedback/ViewFeedback.aspx?FeedbackID=341426

RichB - Sure, but on a 64-bit machine there's two CLRs...sn for 32-bit only works for that CLR. But, TOTALLY agreed, it's confusing.

I actually ran into this problem just earlier this week, and it took me a while to figure out. The problem originated in a third party assembly that loaded fine into studio, worked great in unit testing(since the tests run inside VS*32), but failed horribly while running the application. I've checked the third party assembly with reflector, and it doesn't have any calls to unmanaged code; beats me why they decided to target x86 on it.

I've got a problem in that my solution is large, consisting of some 16 projects that are fairly heavily intertwined. Is there any way to have a project that can call a x86 targeted dll while still being loadable by a 64 bit application, or do I need to convert my entire solution to x86?

The worst part of this is that it will be running on a 32bit server, I'm just having problems debugging it since my development box is 64bit.

Like you, most of our dev machines are 64-bit - but it feels like 64-bit compat is an afterthought, even for developer tools (I'd have thought devs at MS would be the first to switch over).

For now I share the thought Rico Mariani has on wether Visual Studio should be 32 or 64 bit; there aren't that many applications that need that much memory space. It's better to reduce footprint than to require 64bit.

Also, if you do need the extra memory space, there's no way the app can run (stable and with the same datasets) on 32bit. In this case the only thing that makes sense is target just 64bit (what Microsoft did with Exchange 2007)

http://www.lazycoder.com/weblog/2008/09/12/debugging-managed-web-applications-on-x64/

http://msdn.microsoft.com/en-us/vstudio/aa718629.aspx

Kirk

"...Using serialization as a way of persisting state.."

Would you maybe know why this could be a problem?

Thanks,

Vladan

But keep this in mind when thinking about "making the jump", you might have to play around to find just the right secret handshake to get it all working. There must be some configurations that just won't work but so far I've been quite successful.

As an aside, running Windows 2008 server as a workstation OS is working out great so far.

John

Migration considerations

So after all that, what are the considerations with respect to serialization?

* IntPtr is either 4 or 8 bytes in length depending on the platform. If you serialize the information then you are writing platform-specific data to the output. This means that you can and will experience problems if you attempt to share this information.

"If you have 100% type safe managed code then you really can just copy it to the 64-bit platform and run it successfully under the 64-bit CLR."

If you do that, you're golden. Moving right along.

If only it were that true. It all pre-supposes that any .NET assembly added to the mix is also 32/64-bit transparent. I'm currently dealing with this issue with IBM's Informix drivers for .NET. The 64-bit doesn't quite register itself properly and so you end up having to link directly to it thus causing issues for a 32-bit only user.

If you checked the property System.IntPtr.Size while running as x86, it'll be 4. It'll be 8 while under x64. To be clear, if you are running x86 even on an x64 machine, System.IntPtr.Size will be 4. This isn't a way to tell the bitness of your OS, just the bitness of your running CLR process.

I found the term "bitness" to be a little confusing. I think maybe the terms "bit length" or "bit size" are more descriptive of what's causing the compatibility errors in the cases that you mentioned. Otherwise, good stuff as usual.

Terry - Point taken.

Joseph Cooney - Agreed, that's intensely lame. I don't use the built in code coverage, but I'll ask the team if that's fixed going forward.

KirkJ - Exactly what I do if I need E&C. I'll ask if it's turned on in VS10.

Rupurt - Correct. I didn't get into that for fear I'd lose people, but marshaling structs IS a problem.

http://www.microsoft.com/servers/64bit/itanium/overview.mspx

Who knows, that stuff could go to x64 eventually.

How about x64 on windows azure? Could I still upload apps using x32 OS and IDE without

encountering problem, having their tools for VST2008?

For example: GetWindowLongPtrW in user32.dll. It doesn't actually exist in x86. Instead, winuser.h #define's it straight over to GetWindowLongW.

(I'm actually debugging this right now in an alpha build of Paint.NET v3.5 :) )

Paint.NET v2.6 actually shipped with IA64 support, about 3 years ago. I had written a little utility that would ping my webstats and produce a barchart showing the distribution of usage with respect to OS, based on which update manifests had been touched (they're just text files, and the file names are decorated w/ locale, OS version, and x86/x64/ia64). The most prevalent platform was, of course, Windows XP x86. That update manifest had several hundred thousand downloads over the course of a month or so.

The IA64 update manifest had been downloaded precisely one time over the entire previous year. I dropped support for it in the very next release.

It was kind of fun though -- I had remote desktop access to an Itanium at one point. Paint.NET worked fine on it, there just wasn't any point.

* IMO.

Comments are closed.

There has to be a better way than checking the size of IntPtr - new architectures are coming up all the time.