Extending NerdDinner: Exploring Different Database Options

The original NerdDinner Sample was very simple. Two samples, simple, in fact. Perhaps it's the new Northwind, as it's a good way to start working with ASP.NET MVC. However, it's not a perfect sample or an idealized example on how to do many things that users want to do.

Fortunately, there's been lots of cool folks in the community who have "forked" NerdDinner and done interesting stuff with it. Each of these samples is usually focused on a specific scenario, so they won't necessarily be merged with the trunk, but they are educational nonetheless.

Four Five NerdDinners - Each Accessing Data Differently

When NerdDinner was originally done, I did it in Linq To SQL as L2S was fast, easy, had a 1:1 relationship between objects and tables, and frankly, I wasn't really feeling Entity Framework 3.5. Fast forward to .NET 4 and the Entity Framework 4 is pretty nice. The current NerdDinner v2 sample (available in the Trunk on the NerdDinner Codeplex Site, click Source Control) uses Entity Framework 4.

I heard, however, that Chris Sells was interested in exploring a ASP.NET MVC sample that accessed databases via the various ways you'll find in the wild:

- ADO.NET Connected (DataReaders)

- ADO.NET Disconnected (DataSets)

- LINQ to SQL

- LINQ to Entities (Entity Framework)

Chris worked with Nick Muhonen from Useable Concepts and Nick created four samples. Nick has posted a very in-depth article on http://msdn.com/data.

These samples are on VS2010 and ASP.NET MVC 2. Let's compare and contrast. I've also included a sample that Ayende built for to teach me NHibernate last year as the fifth sample. Big thanks to Ayende for all he does for the community, giving of his time, and for keeping Microsoft honest(ish).

ADO.NET Connected (DataReaders)

Scandalous! People still use these? Of course they do. The are wicked fast and many generated DALs (data access layers) have a DataReader at their heart.

Here's the slightly modified IDinnerRepository that was used in this first sample. Note it's not using IQueryable. I understand that it would be ideal to have a single IDinnerRepository interface and have all these samples share it, but these database access techniques differed so greatly that I'm told they gave up as it made the rest of the code smell (more) just to meet that one goal.

public interface IDinnerRepository {

//Data Access Methods

IEnumerable<Dinner> FindAllDinners();

IEnumerable<Dinner> FindByLocation(float latitude, float longitude);

IEnumerable<Dinner> FindUpcomingDinners();

Dinner GetDinner(int id);

void AddDinner(Dinner dinner);

void UpdateDinner(Dinner dinner);

void DeleteDinner(int id);

void AddDinnerRsvp(int dinnerID, RSVP rsvp);

}

You've likely seen code like this before. At least it's not concatenating the SQL manually! It could also be a sproc. The pattern remains.

public IEnumerable<Dinner> FindByLocation(float latitude, float longitude)

{

using (var connection = new SqlConnection(this.connectionString))

{

var commandText =

@"

select d.DinnerID, d.Title, d.EventDate, d.[Description], d.HostedBy,

d.ContactPhone, d.[Address], d.Country, d.Latitude, d.Longitude

from Dinners d

inner join dbo.NearestDinners(@Latitude,@Longitude) nd on

d.DinnerID = nd.DinnerID

where @CurrentDate <= d.EventDate

order by d.DinnerID

select r.RsvpID, r.DinnerID, r.AttendeeName from RSVP r

inner join Dinners d on

d.DinnerID = r.DinnerID

inner join dbo.NearestDinners(@Latitude,@Longitude) nd on

d.DinnerID = nd.DinnerID

where @CurrentDate <= d.EventDate

order by r.DinnerID, r.RsvpID

";

var command = new SqlCommand(commandText, connection);

var parameters = new[]{

new SqlParameter{ParameterName = "Latitude", DbType = DbType.Double, Value = latitude},

new SqlParameter{ParameterName = "Longitude", DbType = DbType.Double, Value = longitude},

new SqlParameter{ParameterName = "CurrentDate", DbType = DbType.Date, Value = DateTime.Now}};

command.Parameters.AddRange(parameters);

connection.Open();

return GetDinners(command);

}

}

Here's a snippet of the private method, GetDinners, that does the tearing apart of the DataReader and turning it into object(s):

private List<Dinner> GetDinners(SqlCommand command)

{

var returnDinners = new List<Dinner>();

using (var reader = command.ExecuteReader())

{

//Project first result set into a collection of Dinner Objects

while (reader.Read())

{

var dinner = new Dinner()

{

DinnerID = (int)reader["DinnerID"],

Title = (string)reader["Title"],

Description = (string)reader["Description"],

Address = (string)reader["Address"],

ContactPhone = (string)reader["ContactPhone"],

Country = (string)reader["Country"],

HostedBy = (string)reader["HostedBy"],

EventDate = (DateTime)reader["EventDate"],

Latitude = (double)reader["Latitude"],

Longitude = (double)reader["Longitude"]

};

returnDinners.Add(dinner);

}

//...

Pretty classic stuff. I generated TONS of for many years using tools like CodeSmith and T4. Generated code is best not seen. Plus, if you write this by hand, a lot can go around and it's almost always because of copy-paste errors. The compiler can't save you if half your code is written in another language tunneled inside a string.

ADO.NET Disconnected (DataSets)

I once called DataSets the spawn of Satan and destroy of all that is holy. I stand by that. ;) They have a way of leaking all over the place, as exemplified by this IDinnerRepository interface. Look away!

public interface IDinnerRepository {

//Data Access Methods

IEnumerable<NerdDinnerDataSet.DinnerRow> FindAllDinners();

IEnumerable<NerdDinnerDataSet.DinnerRow> FindByLocation(float latitude, float longitude);

IEnumerable<NerdDinnerDataSet.DinnerRow> FindUpcomingDinners();

NerdDinnerDataSet.DinnerRow GetDinner(int id);

void AddDinner(NerdDinnerDataSet.DinnerRow dinner);

void DeleteDinner(NerdDinnerDataSet.DinnerRow dinner);

void AddDinnerRsvp(NerdDinnerDataSet.DinnerRow dinner, NerdDinnerDataSet.RSVPRow rsvp);

void DeleteRsvp(NerdDinnerDataSet.RSVPRow rsvp);

// Persistence Method

void Save();

//Object factory methods

NerdDinnerDataSet.DinnerRow CreateDinnerObject();

NerdDinnerDataSet.RSVPRow CreateRsvpObject();

}

With apologies to the original creator of the Regular Expression joke, I will co-opt it for this new one:

So you've got a problem, and you've decided ADO.NET DataSets to solve it. So, you've got two problems... - Me

What does FindByLocation look like now?

public IEnumerable<NerdDinnerDataSet.DinnerRow> FindByLocation(float latitude, float longitude)

{

var now = DateTime.Now;

var dinnerTableAdapter = new DinnerTableAdapter();

var rsvpTableAdapter = new RSVPTableAdapter();

dinnerTableAdapter.FillByLocation(nerdDinnerDataSet.Dinner, latitude, longitude, now);

rsvpTableAdapter.FillByLocation(nerdDinnerDataSet.RSVP, latitude, longitude, now);

return this.nerdDinnerDataSet.Dinner;

}

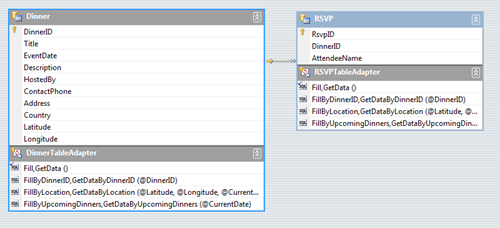

The TableAdapters were created as part of the DataSetDesigner. Here' a screenshot from VS2010:

The Adapters fill the DataTables that consist of Rows. This unfortunately leaks out of our Repository into our Controller as our "Model" is now a DinnerRow. That then leaks (inappropriately) into a ViewPage of type...wait for it...System.Web.Mvc.ViewPage<NerdDinner.Models.NerdDinnerDataSet.DinnerRow>.

If you're going to use DataSets or Rows or DataTables, it's just that much more important that you use a good ViewModel projection. I personally go out of my way to not use DataSets and bump into them only in legacy code. Try to avoid them - I'd prefer the DataReader example over this one.

LINQ to SQL

Remember that LINQ to SQL is a one to one mapping between the physical tables and columns of the database and the objects it creates. Many folks prefer to use it as a DAL (Data Access Layer) that just happens to make objects, then pull the data out of the auto-generated objects into smarter business objects, such that the developer downstream never sees the generated L2S objects. Others just use them all through. For simple samples, I used to use LINQ to SQL straight, and I still do for small (< 5 page) projects, but lately I've been using EF4 as it's just as easy. Anyway, here's the now more sensible modified interface:

public interface IDinnerRepository {

IQueryable<Dinner> FindAllDinners();

IQueryable<Dinner> FindByLocation(float latitude, float longitude);

IQueryable<Dinner> FindUpcomingDinners();

Dinner GetDinner(int id);

void Add(Dinner dinner);

void Delete(Dinner dinner);

void Save();

}

And then the FindByLocation implementation:

public IQueryable<Dinner> FindByLocation(float latitude, float longitude) {

var dinners = from dinner in FindUpcomingDinners()

join i in db.NearestDinners(latitude, longitude)

on dinner.DinnerID equals i.DinnerID

select dinner;

return dinners;

}

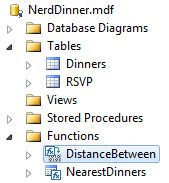

Notice the "NearestDinners" method there. That's a clever thing, I think. The database has a scalar-valued function called DistanceBetween for calculating the distance between two lat-longs (thanks Rob Conery!) and a table value function called NearestDinners. They look like functions from LINQ to SQL's point of view and can be included in a LINQ to SQL query as seen above.

Notice the "NearestDinners" method there. That's a clever thing, I think. The database has a scalar-valued function called DistanceBetween for calculating the distance between two lat-longs (thanks Rob Conery!) and a table value function called NearestDinners. They look like functions from LINQ to SQL's point of view and can be included in a LINQ to SQL query as seen above.

Nice and clean.

LINQ to Entities (Entity Framework)

Entity Framework 4 uses the same interface as above and the same FindByLocation:

public IQueryable<Dinner> FindByLocation(float latitude, float longitude) {

var dinners = from dinner in FindUpcomingDinners()

join i in NearestDinners(latitude, longitude)

on dinner.DinnerID equals i.DinnerID

select dinner;

return dinners;

}

The one small difference, you may notice, is that NearestDinners isn't hanging off the "db" object (the DataContext) as it was with LINQ to SQL. Instead, in order to maintain the same clean query structure, those are helper methods. One is an EdmFunction whose signature maps to that scalar function, and NearestDinner is implemented in code directly.

[EdmFunction("NerdDinnerModel.Store", "DistanceBetween")]

public static double DistanceBetween(double lat1, double long1, double lat2, double long2)

{

throw new NotImplementedException("Only call through LINQ expression");

}

public IQueryable<Dinner> NearestDinners(double latitude, double longitude)

{

return from d in db.Dinners

where DistanceBetween(latitude, longitude, d.Latitude, d.Longitude) < 100

select d;

}

Don't worry about that NotImplementedException, when the method is used in a LINQ to Entities Expression it's automatically mapped to the DistanceBetween function in the database as in the attribute.

I'd like to see better support for TVFs in EF, and I need to dig in to see if there's a better way that outlined here. Entity Framework also supports multiple databases, so you can get an Oracle Provider or a MySQL provider, etc.

So there you have four different database implementations for NerdDinner. Last, but not least, is a sample that Ayende wrote for me to teach me NHibernate, and I would be remiss to not include it in such a comparison.

NHibernate

This sample was written least year with ASP.NET MVC 2 on VS2008 using NHibernate 2.1. I'd love to see an updated version using even newer techniques.

Hibernate has the concept of a "Session" that lives for the life of a request in a Web Application. There's a config file (or a fluent configuration) that has all the properties and connection strings, (similar to EDMX files in EF or DBMLs in L2S) and this all gets setup in the Global.asax. The session is created in the BeginRequest and disposed in the EndRequest.

public MvcApplication()

{

BeginRequest += (sender, args) => CurrentSession = SessionFactory.OpenSession();

EndRequest += (sender, args) => CurrentSession.Dispose();

}

Here's the FindByLocation method. This example isn't 100% fair as this version of NHibernate doesn't support those custom functions I've been talking about. I'm going to see if the latest does and update this post. However, this does give you insight into its flexibility as it allowed inline SQL, set two parameters and returned a list of ints in a very tight single line of code.

public IQueryable<Dinner> FindByLocation(float latitude, float longitude)

{

// note that this isn't as nice as it can be, since linq for nhibernate

// doesn't support custom SQL functions right now

var matching = session.CreateSQLQuery("select DinnerID from dbo.NearestDinners(:latitude, :longitude)")

.SetParameter("longitude", longitude)

.SetParameter("latitude", latitude)

.List<int>();

return from dinner in FindUpcomingDinners()

where matching.Any(x => x == dinner.DinnerID)

select dinner;

}

A better example that lets NHibernate shine would be something more typical like:

public IQueryable<Dinner> FindUpcomingDinners()

{

return from dinner in FindAllDinners()

where dinner.EventDate >= DateTime.Now

orderby dinner.EventDate

select dinner;

}

You'll note that LINQ to NHibernate is nice and comfortable and looks just like you'd expect it to.

Just like EF and LINQ to SQL, there's a mapping file that explains how tables and columns and dataTypes map to real objects, although there isn't a visual editor as far as know. I believe there are fluent ways to express this in code, so if you're an NHibernate user, let me know alternative ways to express this and I'll update the post.

<?xml version="1.0" encoding="utf-8" ?>

<hibernate-mapping xmlns="urn:nhibernate-mapping-2.2" assembly="NerdDinner" namespace="NerdDinner.Models">

<class name="Dinner" table="Dinners" lazy="false">

<id name="DinnerID">

<generator class="identity"/>

</id>

<property name="Title"/>

<property name="EventDate"/>

<property name="Description"/>

<property name="HostedBy"/>

<property name="ContactPhone"/>

<property name="Address"/>

<property name="Country"/>

<property name="Latitude"/>

<property name="Longitude"/>

<bag name="RSVPs" cascade="all-delete-orphan" inverse="true">

<key column="DinnerID"/>

<one-to-many class="RSVP"/>

</bag>

</class>

<class name="RSVP" table="RSVP" lazy="false">

<id name="RsvpID">

<generator class="identity"/>

</id>

<property name="AttendeeName"/>

<many-to-one name="Dinner"

column="DinnerID"/>

</class>

</hibernate-mapping>

This mapping file is actually marked as an Embedded Resource, and is accessed at runtime by the NHibernate runtime. You just need to make it, and the magic happens for you. NHibernate's claim to fame is support for lots of different databases like SQL Server, Oracle, MySQL and more. There's also lots of supporting projects and libraries that orbit NHibernate to give you additional control, or different ways to express your intent.

Conclusion

There's lots of choices for Database Access on .NET. You'll run into DataReaders in older or highly tuned code, but there's no reason it can't be hidden in a Repository and still be pleasant to use. LINQ to SQL is nice, lightweight and fast and has dozens of bug fixes in .NET 4, but Entity Framework is the way they are heading going forward. Plus, Entity Framework 4 is *way* better than EF 3.5, so I'm using it for any "larger than small" projects I'm doing and I'm not having much trouble. NHibernate is very mature, actively developed and has a great community around it and it's not going anywhere.

In my opinion, if you're doing database access with .NET you should be using Entity Framework 4 or NHibernate.

Four Database-styles Sample

NHibernate Sample

Related Links

- 10 How To Videos on Entity Framework from Julie Lerman

- Summer of NHibernate Screencast Series

- Whitepaper: Data Access Practices Using Microsoft .Net: A Nerdly Comparison

About Scott

Scott Hanselman is a former professor, former Chief Architect in finance, now speaker, consultant, father, diabetic, and Microsoft employee. He is a failed stand-up comic, a cornrower, and a book author.

About Newsletter

Using that, code refactoring will not break your xml files, because there are no xml files.

http://chriscavanagh.wordpress.com/2010/05/02/linq-query-translator/

You can write LINQ queries against your business objects and let it translate them into data object queries (results come back as business objects). Currently it relies on mapping attributes in your business model.

So far I'm still preferring L2S over EF (feels lighter... EF seems like it wants to do everything) :] ). What's the longer-term plan for L2S?

My application code looks like this:

using (var connection = CreateDataConnection())

using (var command = Sql.SelectOrders.CreateCommand(connection))

{

command.CustomerID = customerID; // strongly-typed parameter

using (var reader = command.ExecuteReader())

while (reader.Read())

{

// strongly-typed columns

int orderID = reader.OrderID;

decimal totalPrice = reader.TotalPrice;

...

}

}

Tommy Carlier -- I think I get the sense of your code, but the vars are definitely hiding something here. I assume that Sql.SelectOrders.CreateCommand(connection)) creates a strongly type SelectOrdersCommand and that this class overrides ExecuteReader so that var reader = command.ExecuteReader() returns a SelectOrdersReader object. The vars here make the sample much harder to follow.

You can do the same thing with EF, I think it is also the best way to go in services or pages calls with EF. Just create a EF context per call and use it in every repository.

You have that here:

http://microsoftnlayerapp.codeplex.com/

Brilliant blog and podcasts, congratulations.

- http://blog.vascooliveira.com -

For bonus points I'd be interested in seeing this expanded to also include SubSonic active record. And for kicks a MongoDB implementation (though that would be throwing some oranges in).

It's very easy with LINQ to convet a DataSet row to a POCO, and even walk relationships to return a rich POCO and not a flat table lookalike. The DataSet code should look just like the DataSet code, minus all the magic strings.

The DataSet has the designer tool, which makes it easy to isolate all database access and even interactively test methods and check results. Speaking of testing, DataSet.LoadXML makes mocks for unit testing very easy, and allow you to separate the concerns of loading the DataSet and processing the DataSet. And when you need the speed and lower memory footprint of the DataReader, the TableAdapter is a partial class - just add a method that hits the underlying DataReader and does the conversion to POCO with no middle man.

I'm not saying DataSets are the answer all the time, or even a majority of the time - it just depends on what you are working on and with - but they are far from evil!

That's my whole delima with the repository pattern. One big draw for it is that it seems like you can make yout dal pluggable - switch to azure by writing a new iwhateverrepository and hooking it up with your ioc container. Yet in practice, each technology has specific demands that make it difficult or impossible to do that without changing the interface or consuming code. So, if your consuming code is dependent on the implementation, and no other implementation is possible, then the interface is just an extra layer of misdirection.

From Scott:

The Adapters fill the DataTables that consist of Rows. This unfortunately leaks out of our Repository into our Controller as our "Model" is now a DinnerRow. That then leaks (inappropriately) into a ViewPage of type...wait for it...System.Web.Mvc.ViewPage<NerdDinner.Models.NerdDinnerDataSet.DinnerRow>.

Totally agree, and I will take this statement one step forward: all data access methods in the comparison, except DataReader and maybe NHibernate, leak a bit. Even though data objects in Linq To SQL and EF have nice clean class names (Unlike the not so beautiful inner class NerdDinnerDataSet.DinnerRow) I wouldn't call them completely clean. These ORM classes, like the DataSet's DinnerRow class, are automatically generated with loads of api specific inserts for "Clean and Dirty" dirty states, and lots of pretty decorations to allow specific binding to the data access method.

For example Linq To SQL:

[TableAttribute(Name = "dbo.Dinners")]

public partial class Dinner : INotifyPropertyChanging, INotifyPropertyChanged

{

public Dinner(){…}

[ColumnAttribute(

Storage = "_DinnerID",

AutoSync = AutoSync.OnInsert,

DbType = "Int NOT NULL IDENTITY",

IsPrimaryKey = true,

IsDbGenerated = true)]

public int DinnerID{get{...}set{...}}

[ColumnAttribute(Storage = "_Title", DbType = "NVarChar(50) NOT NULL", CanBeNull = false)]

public string Title{get{...}set{...}}

...

And for EF:

[EdmEntityTypeAttribute(NamespaceName = "NerdDinnerModel", Name = "Dinner")]

public partial class Dinner : EntityObject

{

public Int32 DinnerID { get{...}set{...}}

...

[EdmRelationshipNavigationPropertyAttribute("NerdDinnerModel",

"FK_RSVP_Dinners", "RSVP")]

public EntityCollection<RSVP> RSVPs { get{...}set{...}}

}

Which brings me to this readers comment:

It's very easy with LINQ to convert a DataSet row to a POCO, and even walk relationships to return a rich POCO and not a flat table lookalike. The DataSet code should look just like the DataSet code, minus all the magic strings.

Very true indeed, and if this application- being Nerd Dinner - was targeting a large enterprise schema I would do that for all of the compared data access methods. To take that a step further, I would also separate out these wrapper types and the Dinner Repository code into another dll completely. Working in this manner gives the true layered flexibility intended in NTier design. And heck, maybe even get rid of all those crazy ad-hoc queries to secure the database. Props to the commenter on this one- if Nerd Dinner was a bit larger in scope.

However, Nerd Dinner is a small App with an even smaller schema (2 tables, yikes!), whose overall functionality mimicks what a website offering might be for a small company, or quick doit yourselfer. If this was coming a small company or some independent restless "web dudette/dude", my experience has been that some sort of platform decision has been alreay made already (via extensive reserach and soothsaying). This platform - be it DataSets, Linq To SQL, and EF - is usually directly integrated into the applications logic without abstraction to enhance productivity at the cost of bleeding. With that in mind EF still seems to be the best for applications of this scope because:

* It Has Strong Support inth the Visual Studio IDE- requiring very litle hand coded or wizard intensive configuration.

* It supports IQueryable<T> nicely, allowing the developer to define the queries to the db in only one place.

* Its strongly typed, allowing intellisense junkies like myself to be productive.

* It has nice built in POCO support, if you want to truly stop the "leaking":).

* It is apparently the "Chosen One" as far as Microsoft is concerned- and we are already seeing the fruits of this decision with strong ODATA support. Shameless plug for Chris Sells proxied by me: for more info on ODATA and EF check out this great article on the topic at here.

Just my $.02 and again, great to see that nothing quite stirs up the pot like a healthy data access comparison.

Since ADO.NET Connected (DataReaders) is probably the fastest (ignoring developer performance) I'd like to point out that your dataReader example can be made more efficient. When you write

while (reader.Read())

{

var dinner = new Dinner()

{

DinnerID = (int)reader["DinnerID"],

Title = (string)reader["Title"],

// etc

}

}

aren't you searching for the right column using text comparisons FOR EACH ROW? Imagine you have a query retrieving ten fields for 1000 rows, doesn't your code go a 1000 (!) times "Is DinnerID the name of the first column? If not is it the second? If not, etc?" and the same for every other field name?

Why not do it once:

int ciDinnerID = reader.GetOrdinal("DinnerID");

int ciTitle = reader.GetOrdinal("Title");

// etc

while (reader.Read())

{

var dinner = new Dinner()

{

DinnerID = reader.GetInt32(ciDinnerID), // no unboxing

Title = reader.GetString(ciTitle),

// etc

}

}

Next to minimizing the number of string comparisons and avoiding unboxing, you can also use stored procedures to speed up your application.

Why are you calling the DataReaders "connected"? --> ADO.NET Connected (DataReaders)

Aren't DataReaders only connected for the time we are looping through the result and then they are closed?

They are not like a cursor on the server that you can use to browse a command result (what you do in Oracle Forms or others)

This "Connected" suggests: Hey we have a connection to the DB server and can go back and forth through the result list

PS: Marc Schluper is right about performance on DataReaders :-)

I used to use typed DataSets earlier (2003) then started using normal DataTables then later DTOs populated by ''tearing apart of the DataReader and turning it into object(s):''.

Anyways, when you create DataTables/DataSets .NET runtime uses DataReaders internally to populate them so writing the 'while(dr.Read())' is ok to populate DTOs/Entities. In fact due to the fact that DTOs are POCOs and lightweight gives them a huge plus over DataSets (yes, its the spawn of satan :) ).

DTOs earlier lacked the DataTable.Select which was quite useful, now with Linq that problem has gone away.

I had made a tool back in 2006 to do the manual work of converting DataReaders to DTOs/Objects,

Even I used to use code generation(read codesmith), but having the schema in XML that can be hand edited is flexible (for me)

Tried NHibernate, but my avg developers found it hard to use.

Recently put this tool on XmlDataMapper.CodePlex.com, of course it may not be as suave as the other giants out there, but its serves the simple purpose of converting DataReaders/DataTables/DataRows into DTOs/POCOs easily

Example usage for Order DTO:

IDataReader reader = command.ExecuteReader(CommandBehviour.CloseConnection);

IList<Order> list = DTOConverter<Order>.Convert(reader);

One thing that datasets do well that ORMs seem to have trouble with is composite keys. Are any of the ORM's better at this than NHibernate? Most ORM help I've read on the topic says composite keys are not recommended, but some db schemas will need them in order to enforce data integrity properly. How are people dealing with this? Trying to enforce integrity rules in the model only I guess?

How are people dealing with this? Trying to enforce integrity rules in the model only I guess?

You can always make a composite unique key in the db and live normally without composite primary keys.

I've had great success utilizing Castle Project Active Record which sits on top of NHibernate and is very easy to use.

http://www.castleproject.org/activerecord/index.html

From there website: "Castle ActiveRecord is built on top of NHibernate, but its attribute-based mapping free the developer of writing XML for database-to-object mapping, which is needed when using NHibernate directly"

- Nakia

You can always make a composite unique key in the db and live normally without composite primary keys.

Paul

Paul, this solution fails to enforce integrity rules that composite keys can enforce. That's my question, is this typically being dealt with by just not enforcing those rules at the db level, and enforcing them elsewhere (or nowhere) instead?

Ed

See a psychiatrist and learn to code. Stop telling me that DataSets are the bomb. They are literally A bomb.

By the way data integrity properly is managed at the DATABASE LEVEL.

That's the point I was trying to make, Anonymous. ORM solutions commonly refer to composite keys as "legacy" and eschew them, and I'm trying to figure out if devs know or care about the job that DRI can and should do. Datasets certainly have their drawbacks, but this isn't one of them.

Ed

Whenever I go to do real world work, this is where stuff breaks down in these frameworks and I'd like to see some best practices in regards to this. Makes starting out much easier. Thanks.

I am not familiar with NHibernate - have I missed an install or something.

Regards

Alan

Comments are closed.

Has you seen any performance comparisons between the various strategies outlined above? I'm curious as to the pros/cons of each method. It appears that there might be a trade off between developer productivity and database performance, but I don't know how significant it is.

-Mike