Review: Logitech ConferenceCam CC3000e - A fantastic pan tilt zoom camera and speaker for remote workers

I'm forever looking for tools that can make me a more effective remote worker. I'm still working remotely from Portland, Oregon for folks in Redmond, Washington.

I'm forever looking for tools that can make me a more effective remote worker. I'm still working remotely from Portland, Oregon for folks in Redmond, Washington.

You might think that a nice standard HD webcam is enough to talk to your remote office, but I still maintain that a truly great webcam for the remote work is one that:

- Has a wide field of view > 100 degrees

- Has an 5x - 10x optical zoom to look at whiteboards

- Has motorized pan-tilt-zoom

Two years later I'm still using (and happy with) the Logitech BCC950. I'm so happy with it that I wrote and maintain a cloud server to remotely control the PTZ (pan tilt zoom function) of the camera. I wrote all that up earlier on this blog in Cloud-Controlled Remote Pan Tilt Zoom Camera API for a Logitech BCC950 Camera with Azure and SignalR.

Fast-forward to June of 2014 and Logitech offered to loan me (I'm sending it back this week) one of their new Logitech ConferenceCam C3000e conferencing systems. Yes, that's a mouthful.

To be clear, the BCC950 is a fantastic value. It's usually <$200, has motorized PTZ, a remote control, (also works my software, natch), doesn't require drivers with Windows (a great plus), is a REALLY REALLY good speakerphone for Skype or Lync calls, it's camera is 1080p, the speakerphone shows up as a standard audio device, and has a removable "stalk" so you can control how tall the camera is.

BUT. The BCC950's zoom function is digital which sucks for trying to see remote whiteboards, and it's field of view is just OK.

Now, enter the CC3000e, a top of the line system for conference room. What do I get for $1000? Is it worth 4x the BCC950? Yes, if you have the grand and you're on video calls all day. It's an AMAZING camera and it's worth it. I don't want to send it back.

Logitech ConferenceCam CC3000e - What do you get?

The unboxing is epic, not unlike an iPhone, except with more cardboard. It's a little overwhelming as there are a lot of parts, but it's all numbered and very easy to setup. My first impression was "why do I need all these pieces" as I'm used to the all-in-one-piece BCC950 but then I remembered that the CC3000e is actually meant for business conference rooms, not random remote workers in a home office like me. Still, later I appreciated the modularity as I ended up mounting the camera on top of an extra TV I had, while moving the speaker module under my monitor nearer my desk.

You get the camera, the speaker/audio base, a 'hockey puck' that routes all the cables, and a remote control.

The Good

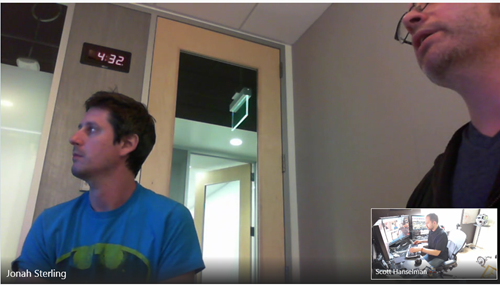

You've seen what a regular webcam looks like. Two heads and some shoulders.

Believe it or not, in my experience it's hard to get a sense of a person from just their disembodied head. Who knew?

I'm regularly Skyping/Lyncing into an open space in Redmond where my co-workers move around friendly, use the whiteboard, stand around, and generally enjoy their freedom of motion. If I've got a narrow 70 degree or less field of view with a fixed location, I can't get a feel for what's going on. From their perspective, none of them really know what my space looks like. I can't pace around, use a whiteboard, or interact with them in any "more than just a head" way.

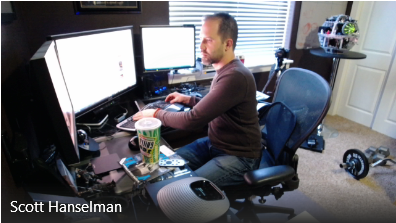

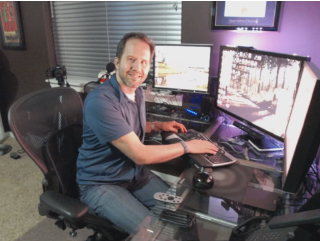

Enter a real PTZ camera with real optics and a wide field of view. You really get a sense of where I am in my office, and that I need to suck it in before taking screenshots.

Now, move the camera around.

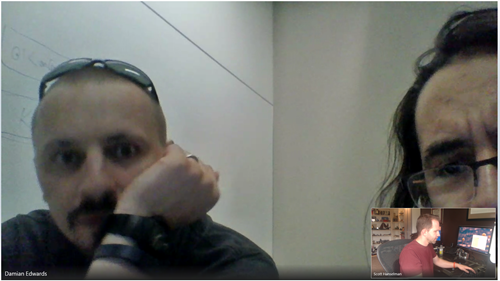

Here's me trying to collaborate with my remote partners over some projects. See how painful that is? EVERY DAY I'm talking to half-heads with tiny cameras.

These calls weren't staged for this blog post, people. FML. These are real meetings, and a real one-on-one with the half a forehead that is my boss.

Now, yes, I admit that you'll not ALWAYS want to see my torso when talking. Easy, I turn, and face the camera and zoom in a smidge and we've got a great 1:1 normal disembodied head conversation happening.

But when you really want to connect with someone, back up a bit. Get a sense of their space.

And if you're in a conference room, darn it, mount that sucker on the far wall.

While only the me-sides of these calls used the CC3000e (as I'm the dude with the camera) I've used the other screenshots of actual calls I've had to show you the difference between clear optics and a wide field of view, vs. a laptop's sad little $4 web cam. You can tell who has a nice camera. Let me tell you, this camera is tight.

The CC3000e has a lot of great mounting options that come included with the kit. I was able to get it mounted on top of my TV like a Kinect, using the included brackets, in about 5 minutes. You can also mount it flat against the wall, which could be great for tight conference room situations.

The camera is impressive, and politely looks away when it's not in use. A nice privacy touch, I thought.

The optical zoom is fantastic. You'll have no trouble zooming in on people or whiteboards.

Here's zoomed out.

Here's zoomed in. No joke, I just zoomed in with the remote and made a face. It's crazy and it's clear.

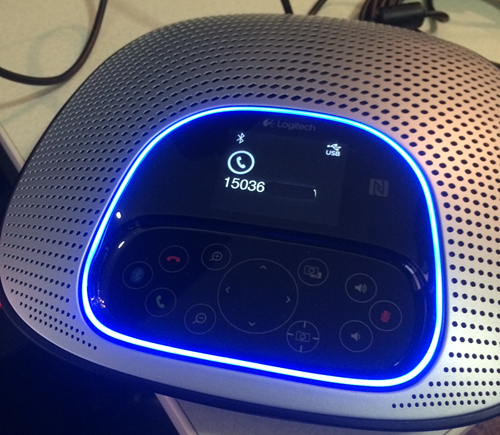

The speakerphone base is impressively sturdy with an awesome Tron light-ring that is blue when you're on a call, and red when you're either on hold (or you're the MCP.)

The screen will also show you the name/number of the current caller.

A nice bonus, you can pair the base with your cell phone using Bluetooth and now you've got a great speaker and speakerphone. This meant I could take all calls (mobile, Lync, Skype) using one speakerphone.

The Weird

There have been a few weird quirks with the CC3000e. For example - right this moment in fact - the camera on indicator light is flashing blue, but no app is using the camera. It's as if it got stuck after a call. Another is that the microphone quality (this is subjective, of course) for people who hear me on the remote side doesn't seem as deep and resonant as with the BCC950. Now, no conference phone will ever sound as nice as a headset, but the audio to my ear and my co-worker's ear is just off when compared to what we're used to. Also, a few times the remote control just stopped working for a while.

On the software side, I've personally found the Logitech Lync "Far End Control" PTZ software to be unreliable. Sometimes it works great all day, other days it won't run. I suspect it's having an isue communicating with the hardware. It's possible, given the weird light thing combined with this PTZ issue that I have a bad/sick review model. Now, here's the Far End Control Application's PDF Guide. It's supposed to "just work' of course. You and the person you're calling each run a piece of software that creates a tunnel over Lync and allows each of you to control the other's PTZ motor. This is a different solution than my PTZ system, as theirs uses Lync itself to transmit PTZ instructions while mine requires a cloud service.

Fortunately, my PTZ System *also* works with the ConferenceCam CC3300e. I just tested it, and you'll simply have to change the name of the device in your *.config file.

<appSettings>

<!-- <add key="DeviceName" value="BCC950 ConferenceCam"/> -->

<add key="DeviceName" value="ConferenceCam CC3000e Camera"/>

</appSettings>

To be clear, the folks at Logitech have told me that they can update the firmware and adjust and improve all aspects of the system. In fact, I flashed it with firmware from May 12th before I started using it. So, it's very possible that these are just first-version quirks that will get worked out with a software update. None of these issues have prevented my use of the system. I've also spoken with the developer on the Far End Control system and they are actively improving it, so I've got high hopes.

This is a truly killer system for a conference room or remote worker (or both, if I'm lucky and have budget.)

- Absolutely amazing optical zoom

- Top of the line optics

- Excellent wide field of view

- The PTZ camera turns a full 180 degrees

- Programmable "home" location

- Can act as a bluetooth speaker/speakerphone for your cell phone

- The camera turns away from you when it's off. Nice reminder of your privacy.

The optics alone would make my experience as a remote worker much better. I am boxing it up and I am going to miss this camera. Aside from a few software quirks, the hardware is top notch and I'm going to be saving up for this camera.

You can buy the Logitech CC3000e from Logitech directly or from some shady folks at Amazon.

Related Links

- Working Remotely Considered Dystopian

- Being a Remote Worker Sucks - Long Live the Remote Worker

- Introducing LyncAutoAnswer.com - An open source remote worker's Auto Answer Kiosk with Lync 2010

- Is Daddy on a call? A BusyLight Presence indicator for Lync for my Home Office

- 30 Tips for Successful Communication as a Remote Worker

- Building an Embodied Social Proxy or Crazy Webcam Remote Cart Thing

- Virtual Camaraderie - A Persistent Video "Portal" for the Remote Worker

Sponsor: Thanks to friends at RayGun.io. I use their product and LOVE IT. Get notified of your software’s bugs as they happen! Raygun.io has error tracking solutions for every major programming language and platform - Start a free trial in under a minute!

About Scott

Scott Hanselman is a former professor, former Chief Architect in finance, now speaker, consultant, father, diabetic, and Microsoft employee. He is a failed stand-up comic, a cornrower, and a book author.

About Newsletter