Don't ever break a URL if you can help it

Back in 2017 I said "URLs are UI" and I stand by it. At the time, however, I was running this 18 year old blog using ASP.NET WebForms and the URL was, ahem, https://www.hanselman.com/blog/URLsAreUI.aspx

Back in 2017 I said "URLs are UI" and I stand by it. At the time, however, I was running this 18 year old blog using ASP.NET WebForms and the URL was, ahem, https://www.hanselman.com/blog/URLsAreUI.aspx

The blog post got on Hacker News and folks were not impressed with my PascalCasing but were particularly offended by the .aspx extension shouting "this is the technology this blog is written in!" A rightfully valid complaint, to be clear.

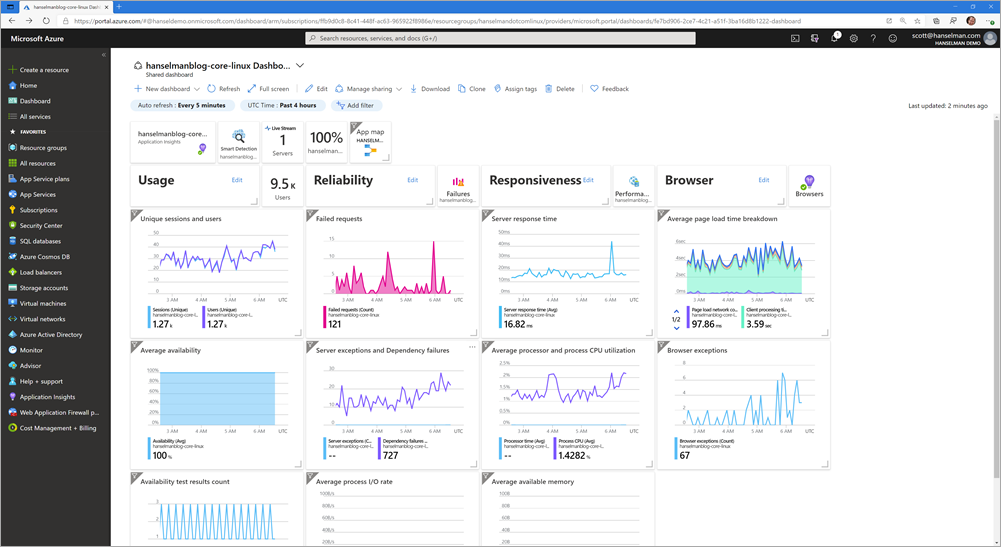

ASP.NET has supported extensionless URLs for nearly a decade but I have been just using and enjoying my blog. I've been slowly moving my three "Hanselman, Inc" (it's not really a company) sites over to Azure, to Linux, and to ASP.NET Core. You can actually scroll to the bottom of this site and see the git commit hash AND CI/CD Build (both private links) that this production instance was built and deployed from.

As tastes change, from anglebrackets to curly braces to significant whitespace, they also change in URL styles, from .cgi extesnions, to my PascalCased.aspx, to the more 'modern' lowercased kebab-casing of today.

But how does one change 6000 URLs without breaking their Google Juice? I have history here. Here's a 17 year old blog post...the URL isn't broken. It's important to never change a URL and if you do, always offer a redirect.

When Mark Downie and I discussed moving the venerable .NET blog engine "DasBlog" over to .NET Core, we decided that no matter what, we'd allow for choice in URL style without breaking URLs. His blog runs DasBlog Core also and applies these same techniques.

We decided on two layers of URL management.

- An optional and configurable XML file in the older IIS Rewrite format that users can update to taste.

- Why? Users with old blogs like me already have rules in this IISRewrite format. Even though I now run on Linux and there's no IIS to be found, the file exists and works. So we use the IIS Rewrite Module to consume these files. It's a wonderful compatibility feature of ASP.NET Core.

- The core/base Endpoints that DasBlog would support on its own. This would include a matrix of every URL format that DasBlog has ever supported in the last 10 years.

Here's that code. There may be terser ways to express this, but this is super clear. With or without extension, without or without year/month/day.

app.UseEndpoints(endpoints =>

{

endpoints.MapHealthChecks("/healthcheck");

if (dasBlogSettings.SiteConfiguration.EnableTitlePermaLinkUnique)

{

endpoints.MapControllerRoute(

"Original Post Format",

"~/{year:int}/{month:int}/{day:int}/{posttitle}.aspx",

new { controller = "BlogPost", action = "Post", posttitle = "" });

endpoints.MapControllerRoute(

"New Post Format",

"~/{year:int}/{month:int}/{day:int}/{posttitle}",

new { controller = "BlogPost", action = "Post", postitle = "" });

}

else

{

endpoints.MapControllerRoute(

"Original Post Format",

"~/{posttitle}.aspx",

new { controller = "BlogPost", action = "Post", posttitle = "" });

endpoints.MapControllerRoute(

"New Post Format",

"~/{posttitle}",

new { controller = "BlogPost", action = "Post", postitle = "" });

}

endpoints.MapControllerRoute(

name: "default", "~/{controller=Home}/{action=Index}/{id?}");

});

If someone shows up at any of the half dozen URL formats I've had over the years they'll get a 301 permanent redirect to the canonical one.

UPDATE: Great tip from Tune in the comments: "After moving several websites to new navigation and url structures, I've learned to start redirecting with harmless temporary redirects (http 302) and replace it with a permanent redirect (http 301), only after the dust has settled…"

The old IIS format is added to our site with just two lines:

var options = new RewriteOptions().AddIISUrlRewrite(env.ContentRootFileProvider, IISUrlRewriteConfigPath);

app.UseRewriter(options);

And offers rewrites to everything that used to be. Even thousands of old RSS readers (yes, truly) that continually hit my blog will get the right new clean URLs with rules like this:

<rule name="Redirect RSS syndication" stopProcessing="true">

<match url="^SyndicationService.asmx/GetRss" />

<action type="Redirect" url="/blog/feed/rss" redirectType="Permanent" />

</rule>

Or even when posts used GUIDs (not sure what we were thinking, Clemens!):

<rule name="Very old perm;alink style (guid)" stopProcessing="true">

<match url="^PermaLink.aspx" />

<conditions>

<add input="{QUERY_STRING}" pattern="&?guid=(.*)" />

</conditions>

<action type="Redirect" url="/blog/post/{C:1}" redirectType="Permanent" />

</rule>

We also always try to express rel="canonical" to tell search engines which link is the official - canonical - one. We've also autogenerated Google Sitemaps for over 14 years.

What's the point here? I care about my URLs. I want them to stick around. Every 404 is someone having a bad experience and some thoughtful rules at multiple layers with the flexibility to easily add others will ensure that even 10-20 year old references to my blog will still resolve!

Oh, and that article that they didn't like over on Hacker News? It's automatically now https://www.hanselman.com/blog/urls-are-ui so that's nice, too!

Here's some articles I've already written on the subject of moving this blog to the cloud:

- Real World Cloud Migrations: Moving a 17 year old series of sites from bare metal to Azure

- Dealing with Application Base URLs and Razor link generation while hosting ASP.NET web apps behind Reverse Proxies

- Updating an ASP.NET Core 2.2 Web Site to .NET Core 3.1 LTS

- Making a cleaner and more intentional azure-pipelines.yml for an ASP.NET Core Web App

- Moving an ASP.NET Core from Azure App Service on Windows to Linux by testing in WSL and Docker first

If you find any issues with this blog like

- Broken links and 404s where you wouldn't expect them

- Broken images, zero byte images, giant images

- General oddness

Please file them here https://github.com/shanselman/hanselman.com-bugs and let me know!

Sponsor: Suffering from a lack of clarity around software bugs? Give your customers the experience they deserve and expect with error monitoring from Raygun.com. Installs in minutes, try it today!

About Scott

Scott Hanselman is a former professor, former Chief Architect in finance, now speaker, consultant, father, diabetic, and Microsoft employee. He is a failed stand-up comic, a cornrower, and a book author.

About Newsletter

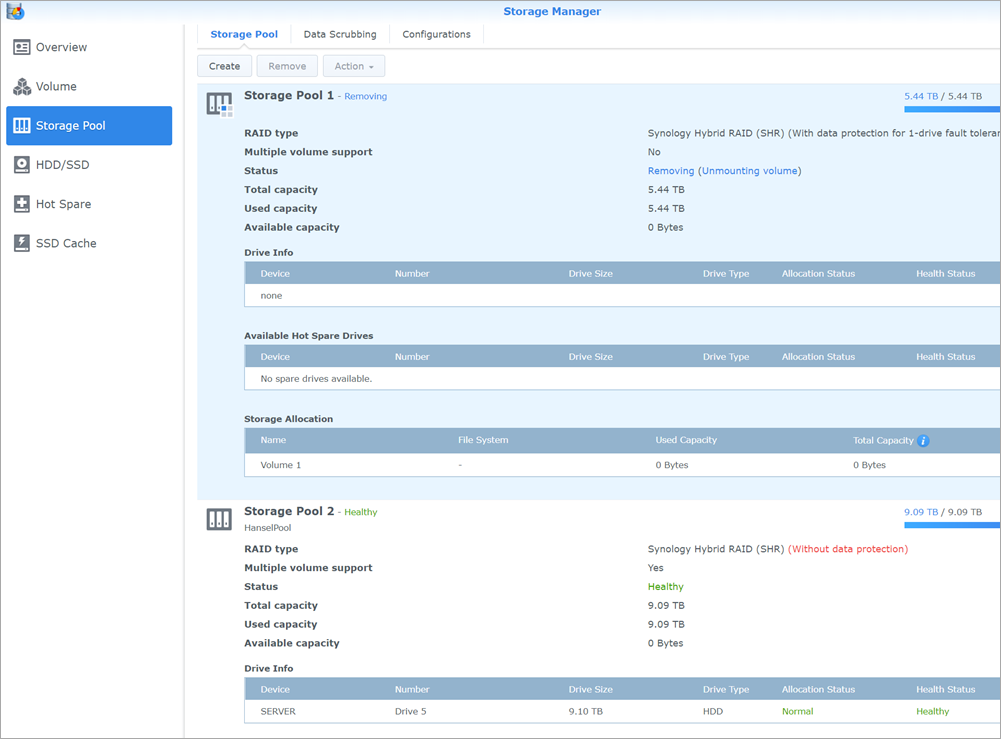

I recently moved my home NAS over from a Synology DS1511 that I got in May of 2011 to a

I recently moved my home NAS over from a Synology DS1511 that I got in May of 2011 to a

![image[3] image[3]](https://images.hanselman.com/blog/Windows-Live-Writer/52a307008943_A4F2/image%5B3%5D_3.png)