Installing PowerShell Core on a Raspberry Pi (powered by .NET Core)

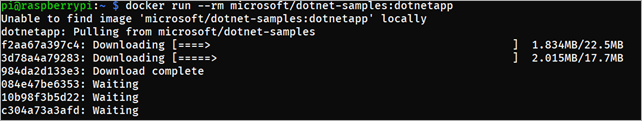

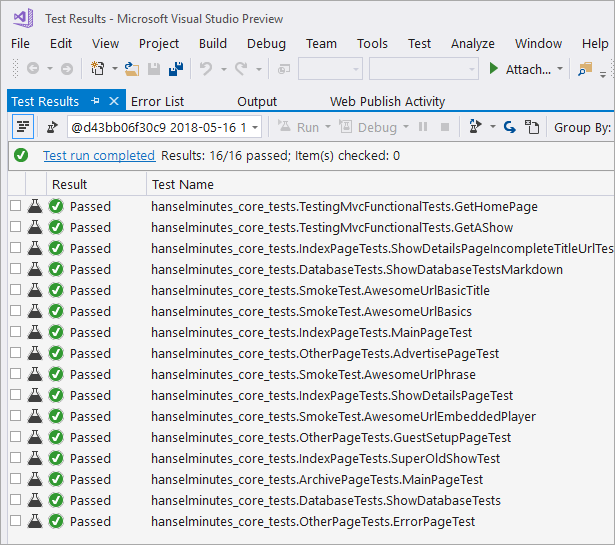

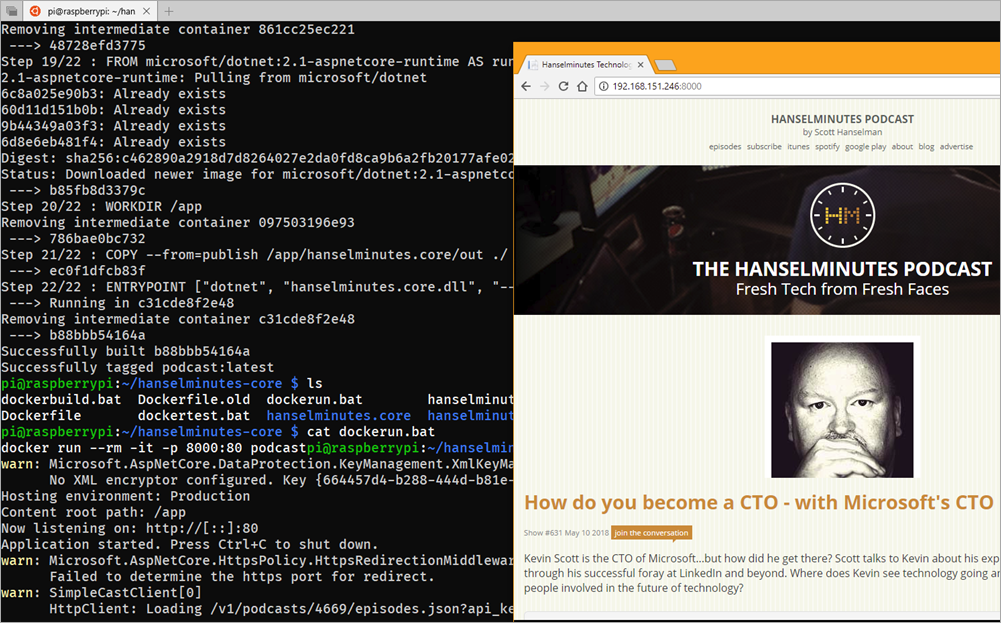

Earlier this week I set up .NET Core and Docker on a Raspberry Pi and found that I could run my podcast website quite easily on a Pi. Check that post out as there's a lot going on. I can test within a Linux Container and output the test results to the host and then open them in VS. I also explored a reasonably complex Dockerfile that is both multiarch and multistage. I can reliably build and test my website either inside a container or on the bare metal of Windows or Linux. Very fun.

Earlier this week I set up .NET Core and Docker on a Raspberry Pi and found that I could run my podcast website quite easily on a Pi. Check that post out as there's a lot going on. I can test within a Linux Container and output the test results to the host and then open them in VS. I also explored a reasonably complex Dockerfile that is both multiarch and multistage. I can reliably build and test my website either inside a container or on the bare metal of Windows or Linux. Very fun.

As primarily a Windows developer I have lots of batch/cmd files like "test.bat" or "dockerbuild.bat." They start as little throwaway bits of automation but as the project grows inevitably more complex.

I'm not interested in "selling" anyone PowerShell. If you like bash, use bash, it's lovely, as are shell scripts. PowerShell is object-oriented in its pipeline, moving lists of real objects as standard output. They are different and most importantly, they can live together. Just like you might call Python scripts from bash, you can call PowerShell scripts from bash, or vice versa. Another tool in our toolkits.

PS /home/pi> Get-Process | Where-Object WorkingSet -gt 10MB

NPM(K) PM(M) WS(M) CPU(s) Id SI ProcessName

------ ----- ----- ------ -- -- -----------

0 0.00 10.92 890.87 917 917 docker-containe

0 0.00 35.64 1,140.29 449 449 dockerd

0 0.00 10.36 0.88 1272 037 light-locker

0 0.00 20.46 608.04 1245 037 lxpanel

0 0.00 69.06 32.30 3777 749 pwsh

0 0.00 31.60 107.74 647 647 Xorg

0 0.00 10.60 0.77 1279 037 zenity

0 0.00 10.52 0.77 1280 037 zenity

Bash and shell scripts are SUPER powerful. It's a whole world. But it is text based (or json for some newer things) so you're often thinking about text more.

pi@raspberrypidotnet:~ $ ps aux | sort -rn -k 5,6 | head -n6

root 449 0.5 3.8 956240 36500 ? Ssl May17 19:00 /usr/bin/dockerd -H fd://

root 917 0.4 1.1 910492 11180 ? Ssl May17 14:51 docker-containerd --config /var/run/docker/containerd/containerd.toml

root 647 0.0 3.4 155608 32360 tty7 Ssl+ May17 1:47 /usr/lib/xorg/Xorg :0 -seat seat0 -auth /var/run/lightdm/root/:0 -nolisten tcp vt7 -novtswitch

pi 1245 0.2 2.2 153132 20952 ? Sl May17 10:08 lxpanel --profile LXDE-pi

pi 1272 0.0 1.1 145928 10612 ? Sl May17 0:00 light-locker

pi 1279 0.0 1.1 145020 10856 ? Sl May17 0:00 zenity --warning --no-wrap --text

You can take it as far as you like. For some it's intuitive power, for others, it's baroque.

pi@raspberrypidotnet:~ $ ps -eo size,pid,user,command --sort -size | awk '{ hr=$1/1024 ; printf("%13.2f Mb ",hr) } { for ( x=4 ; x<=NF ; x++ ) { printf("%s ",$x) } print "" }'

0.00 Mb COMMAND

161.14 Mb /usr/bin/dockerd -H fd://

124.20 Mb docker-containerd --config /var/run/docker/containerd/containerd.toml

78.23 Mb lxpanel --profile LXDE-pi

66.31 Mb /usr/lib/xorg/Xorg :0 -seat seat0 -auth /var/run/lightdm/root/:0 -nolisten tcp vt7 -novtswitch

61.66 Mb light-locker

Point is, there's choice. Here's a nice article about PowerShell from the perspective of a Linux user. Can I install PowerShell on my Raspberry Pi (or any Linux machine) and use the same scripts in both places? YES.

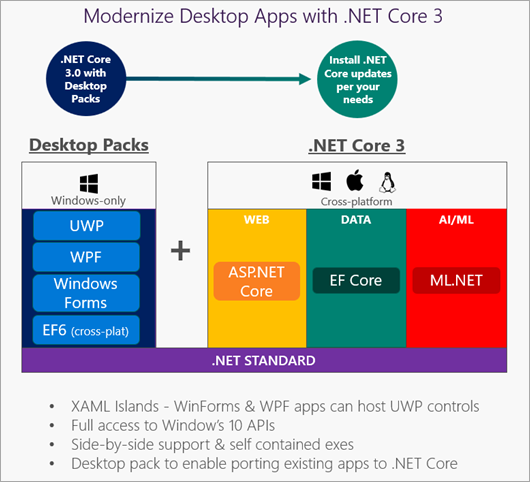

For many years PowerShell was a Windows-only thing that was part of the closed Windows ecosystem. In fact, here's video of me nearly 12 years ago (I was working in banking) talking to Jeffrey Snover about PowerShell. Today, PowerShell is open source up at https://github.com/PowerShell with lots of docs and scripts, also open source. PowerShell is supported on Windows, Mac, and a half-dozen Linuxes. Sound familiar? That's because it's powered (ahem) by open source cross platform .NET Core. You can get PowerShell Core 6.0 here on any platform.

Don't want to install it? Start it up in Docker in seconds with

docker run -it microsoft/powershell

Sweet. How about Raspbian on my ARMv7 based Raspberry Pi? I was running Raspbian Jessie and PowerShell is supported on Raspbian Stretch (newer) so I upgraded from Jesse to Stretch (and tidied up and did the firmware while I'm at it) with:

$ sudo apt-get update

$ sudo apt-get upgrade

$ sudo apt-get dist-upgrade

$ sudo sed -i 's/jessie/stretch/g' /etc/apt/sources.list

$ sudo sed -i 's/jessie/stretch/g' /etc/apt/sources.list.d/raspi.list

$ sudo apt-get update && sudo apt-get upgrade -y

$ sudo apt-get dist-upgrade -y

$ sudo rpi-update

Cool. Now I'm on Raspbian Stretch on my Raspberry Pi 3. Let's install PowerShell! These are just the most basic Getting Started instructions. Check out GitHub for advanced and detailed info if you have issues with prerequisites or paths.

NOTE: Here I'm getting PowerShell Core 6.0.2. Be sure to check the releases page for newer releases if you're reading this in the future. I've also used 6.1.0 (in preview) with success. The next 6.1 preview will upgrade to .NET Core 2.1. If you're just evaluating, get the latest preview as it'll have the most recent bug fixes.

$ sudo apt-get install libunwind8

$ wget https://github.com/PowerShell/PowerShell/releases/download/v6.0.2/powershell-6.0.2-linux-arm32.tar.gz

$ mkdir ~/powershell

$ tar -xvf ./powershell-6.0.2-linux-arm32.tar.gz -C ~/powershell

$ sudo ln -s ~/powershell/pwsh /usr/bin/pwsh

$ sudo ln -s ~/powershell/pwsh /usr/local/bin/powershell

$ powershell

Lovely.

GOTCHA: Because I upgraded from Jessie to Stretch, I ran into a bug where libssl1.0.0 is getting loaded over libssl1.0.2. This is a complex native issue with interaction between PowerShell and .NET Core 2.0 that's being fixed. Only upgraded machines like mind will it it, but it's easily fixed with sudo apt-get remove libssl1.0.0

Now this means my PowerShell build scripts can work on both Windows and Linux. This is a deeply trivial example (just one line) but note the "shebang" at the top that lets Linux know what a *.ps1 file is for. That means I can keep using bash/zsh/fish on Raspbian, but still "build.ps1" or "test.ps1" on any platform.

#!/usr/local/bin/powershell

dotnet watch --project .\hanselminutes.core.tests test /p:CollectCoverage=true /p:CoverletOutputFormat=lcov /p:CoverletOutput=./lcov

Here's a few totally random but lovely PowerShell examples:

PS /home/pi> Get-Date | Select-Object -Property * | ConvertTo-Json

{

"DisplayHint": 2,

"DateTime": "Sunday, May 20, 2018 5:55:35 AM",

"Date": "2018-05-20T00:00:00+00:00",

"Day": 20,

"DayOfWeek": 0,

"DayOfYear": 140,

"Hour": 5,

"Kind": 2,

"Millisecond": 502,

"Minute": 55,

"Month": 5,

"Second": 35,

"Ticks": 636623925355021162,

"TimeOfDay": {

"Ticks": 213355021162,

"Days": 0,

"Hours": 5,

"Milliseconds": 502,

"Minutes": 55,

"Seconds": 35,

"TotalDays": 0.24693868190046295,

"TotalHours": 5.9265283656111105,

"TotalMilliseconds": 21335502.1162,

"TotalMinutes": 355.59170193666665,

"TotalSeconds": 21335.502116199998

},

"Year": 2018

}

You can take PowerShell objects to and from Objects, Hashtables, JSON, etc.

PS /home/pi> $hash | ConvertTo-Json

{

"Shape": "Square",

"Color": "Blue",

"Number": 1

}

PS /home/pi> $hash = @{ Number = 1; Shape = "Square"; Color = "Blue"}

PS /home/pi> $hash

Name Value

---- -----

Shape Square

Color Blue

Number 1

PS /home/pi> $hash | ConvertTo-Json

{

"Shape": "Square",

"Color": "Blue",

"Number": 1

}

Here's a nice one from MCPMag:

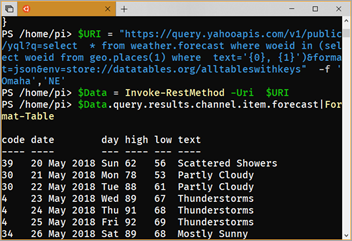

PS /home/pi> $URI = "https://query.yahooapis.com/v1/public/yql?q=select * from weather.forecast where woeid in (select woeid from geo.places(1) where text='{0}, {1}')&format=json&env=store://datatables.org/alltableswithkeys" -f 'Omaha','NE'

PS /home/pi> $Data = Invoke-RestMethod -Uri $URI

PS /home/pi> $Data.query.results.channel.item.forecast|Format-Table

code date day high low text

---- ---- --- ---- --- ----

39 20 May 2018 Sun 62 56 Scattered Showers

30 21 May 2018 Mon 78 53 Partly Cloudy

30 22 May 2018 Tue 88 61 Partly Cloudy

4 23 May 2018 Wed 89 67 Thunderstorms

4 24 May 2018 Thu 91 68 Thunderstorms

4 25 May 2018 Fri 92 69 Thunderstorms

34 26 May 2018 Sat 89 68 Mostly Sunny

34 27 May 2018 Sun 85 65 Mostly Sunny

30 28 May 2018 Mon 85 63 Partly Cloudy

47 29 May 2018 Tue 82 63 Scattered Thunderstorms

Or a one-liner if you want to be obnoxious.

PS /home/pi> (Invoke-RestMethod -Uri "https://query.yahooapis.com/v1/public/yql?q=select * from weather.forecast where woeid in (select woeid from geo.places(1) where text='Omaha, NE')&format=json&env=store://datatables.org/alltableswithkeys").query.results.channel.item.forecast|Format-Table

Example: This won't work on Linux as it's using Windows specific AIPs, but if you've got PowerShell on your Windows machine, try out this one-liner for a cool demo:

iex (New-Object Net.WebClient).DownloadString("http://bit.ly/e0Mw9w")

Thoughts?

Sponsor: Check out JetBrains Rider: a cross-platform .NET IDE. Edit, refactor, test and debug ASP.NET, .NET Framework, .NET Core, Xamarin or Unity applications. Learn more and download a 30-day trial!

About Scott

Scott Hanselman is a former professor, former Chief Architect in finance, now speaker, consultant, father, diabetic, and Microsoft employee. He is a failed stand-up comic, a cornrower, and a book author.

About Newsletter