13 hours debugging a segmentation fault in .NET Core on Raspberry Pi and the solution was...

Debugging is a satisfying and special kind of hell. You really have to live it to understand it. When you're deep into it you never know when it'll be done. When you do finally escape it's almost always a DOH! moment.

I spent an entire day debugging an issue and the solution ended up being a checkbox.

NOTE: If you get a third of the way through this blog post and already figured it out, well, poop on you. Where were you after lunch WHEN I NEEDED YOU?

I wanted to use a Raspberry Pi in a tech talk I'm doing tomorrow at a conference. I was going to show .NET Core 2.0 and ASP.NET running on a Raspberry Pi so I figured I'd start with Hello World. How hard could it be?

You'll write and build a .NET app on Windows or Mac, then publish it to the Raspberry Pi. I'm using a preview build of the .NET Core 2.0 command line and SDK (CLI) I got from here.

C:\raspberrypi> dotnet new console

C:\raspberrypi> dotnet run

Hello World!

C:\raspberrypi> dotnet publish -r linux-arm

Microsoft Build Engine version for .NET Core

raspberrypi1 -> C:\raspberrypi\bin\Debug\netcoreapp2.0\linux-arm\raspberrypi.dll

raspberrypi1 -> C:\raspberrypi\bin\Debug\netcoreapp2.0\linux-arm\publish\

Notice the simplified publish. You'll get a folder for linux-arm in this example, but could also publish osx-x64, etc. You'll want to take the files from the publish folder (not the folder above it) and move them to the Raspberry Pi. This is a self-contained application that targets ARM on Linux so after the prerequisites that's all you need.

I grabbed a mini-SD card, headed over to https://www.raspberrypi.org/downloads/ and downloaded the latest Raspbian image. I used etcher.io - a lovely image burner for Windows, Mac, or Linux - and wrote the image to the SD Card. I booted up and got ready to install some prereqs. I'm only 15 min in at this point. Setting up a Raspberry Pi 2 or Raspberry Pi 3 is VERY smooth these days.

Here's the prereqs for .NET Core 2 on Ubuntu or Debian/Raspbian. Install them from the terminal, natch.

sudo apt-get install libc6 libcurl3 libgcc1 libgssapi-krb5-2 libicu-dev liblttng-ust0 libssl-dev libstdc++6 libunwind8 libuuid1 zlib1g

I also added an FTP server and ran vncserver, so I'd have a few ways to talk to the Raspberry Pi. Yes, I could also SSH in but I have a spare monitor, and with that monitor plus VNC I didn't see a need.

sudo apt-get pure-ftpd

vncserver

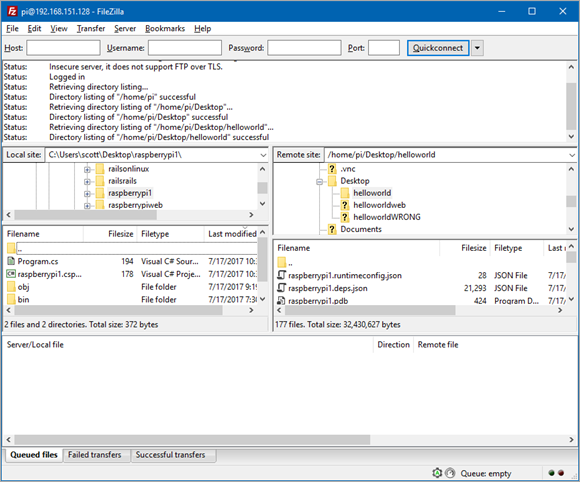

Then I fire up Filezilla - my preferred FTP client - and FTP the publish output folder from my dotnet publish above. I put the files in a folder off my ~\Desktop.

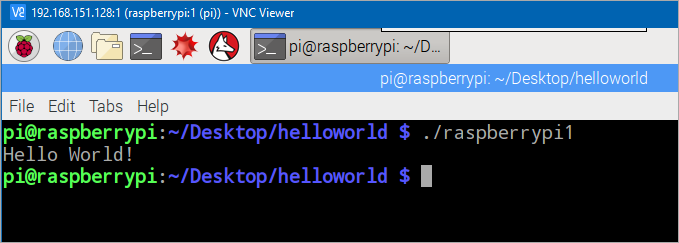

Then from a terminal I

pi@raspberrypi:~/Desktop/helloworld $ chmod +x raspberrypi

(or whatever the name of your published "exe" is. It'll be the name of your source folder/project with no extension. As this is a self-contained published app, again, all the .NET Core runtime stuff is in the same folder with the app.

pi@raspberrypi:~/Desktop/helloworld $ ./raspberrypi

Segmentation fault

The crash was instant...not a pause and a crash, but it showed up as soon as I pressed enter. Shoot.

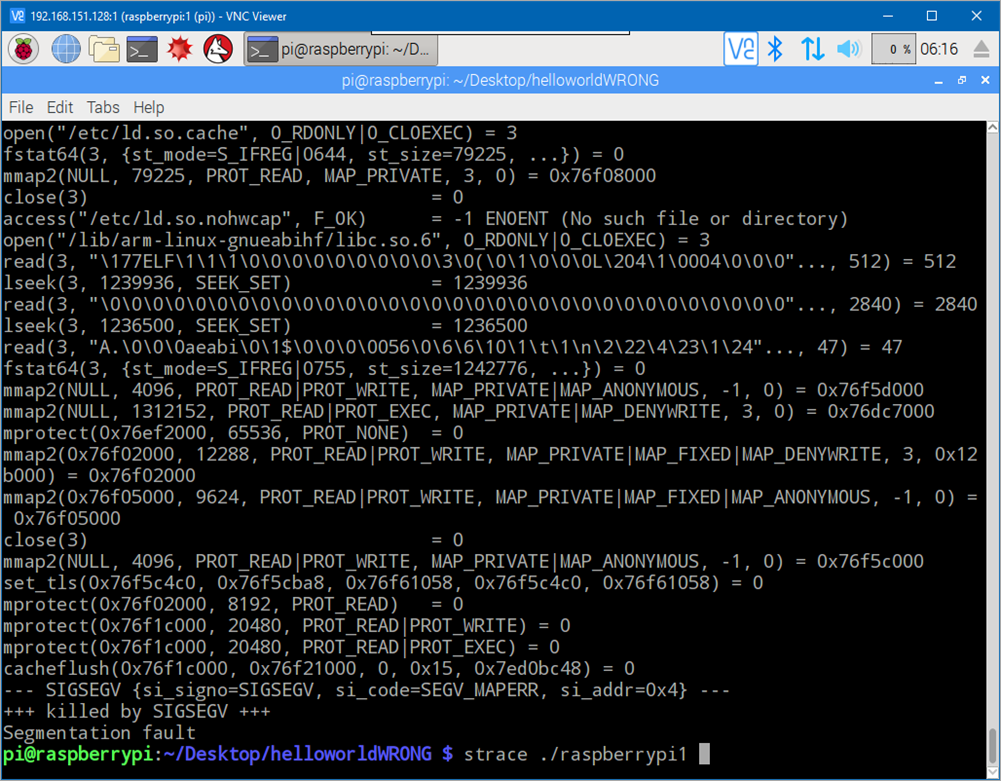

I ran "strace ./raspberrypi" and got this output. I figured maybe I missed one of the prerequisite libraries, and I just needed to see which one and apt-get it. I can see the ld.so.nohwcap error, but that's a historical Debian-ism and more of a warning than a fatal.

I used to be able to read straces 20 years ago but much like my Spanish, my skills are only good at Chipotle. I can see it just getting started loading libraries, seeking around in them, checking file status, mapping files to memory, setting memory protection, then it all falls apart. Perhaps we tried to do something inappropriate with some memory that just got protected? We are dereferencing a null pointer.

Maybe you can read this and you already know what is going to happen! I did not.

I run it under gdb:

pi@raspberrypi:~/Desktop/WTFISTHISCRAP $ gdb ./raspberrypi

GNU gdb (Raspbian 7.7.1+dfsg-5+rpi1) 7.7.1

Copyright (C) 2014 Free Software Foundation, Inc.

This GDB was configured as "arm-linux-gnueabihf".

"/home/pi/Desktop/helloworldWRONG/./raspberrypi1": not in executable format: File truncated

(gdb)

Ok, sick files?

I called Peter Marcu from the .NET team and we chatted about how he got it working and compared notes.

I was using a Raspberry Pi 2, he a Pi 3. Ok, I'll try a 3. 30 minutes later, new SD card, new burn, new boot, pre-reqs, build, FTP, run, SAME RESULT - segfault.

Weird.

Maybe corruption? Here's a thread about Corrupted Files on Raspbian Jesse 2017-07-05! That's the version I have. OK, I'll try the build of Raspbian from a week before.

30 minutes later, burn another SD card, new boot, pre-reqs, build, FTP, run, SAME RESULT - segfault.

BUT IT WORKS ON PETER'S MACHINE.

Weird.

Maybe a bad nuget.config? No.

Bad daily .NET build? No.

BUT IT WORKS ON PETER'S MACHINE.

Ok, I'll try Ubuntu Mate for Raspberry Pi. TOTALLY different OS.

30 minutes later, burn another SD card, new boot, pre-reqs, build, FTP, run, SAME RESULT - segfault.

What's the common thread here? Ok, I'll try from another Windows machine.

SAME RESULT - segfault.

I call Peter back and we figure it's gotta be prereqs...but the strace doesn't show we're even trying to load any interesting libraries. We fail FAST.

Ok, let's get serious.

We both have Raspberry Pi 3s. Check.

What kind of SD card does he have? Sandisk? Ok, I'll use Sandisk. But disk corruption makes no sense at that level...because the OS booted!

What did he burn with? He used Win32diskimager and I used Etcher. Fine, I'll bite.

30 minutes later, burn another SD card, new boot, pre-reqs, build, FTP, run, SAME RESULT - segfault.

He sends me HIS build of a HelloWorld and I FTP it over to the Pi. SAME RESULT - segfault.

Peter is freaking out. I'm deeply unhappy and considering quitting my job. My kids are going to sleep because it's late.

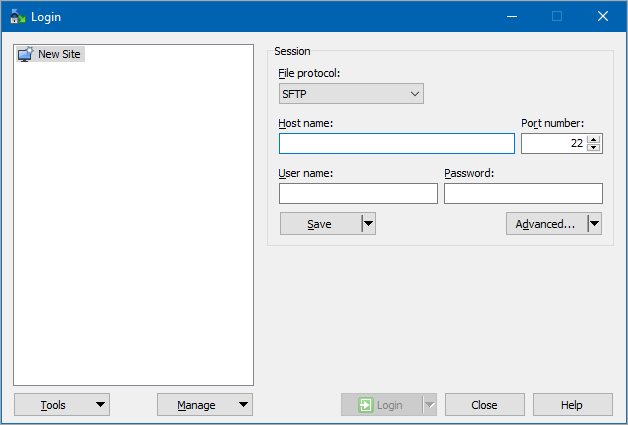

I ask him what he's FTPing with, and he says WinSCP. I use FileZilla, ok, I'll try WinSCP.

WinSCP's New Session dialog starts here:

I say, WAIT. Are you using SFTP or FTP? Peter says he's using SFTP so I turn on SSH on the Raspberry Pi and SFTP into it with WinSCP and copy over my Hello World.

IT FREAKING WORKS. IMMEDIATELY.

BUT WHY.

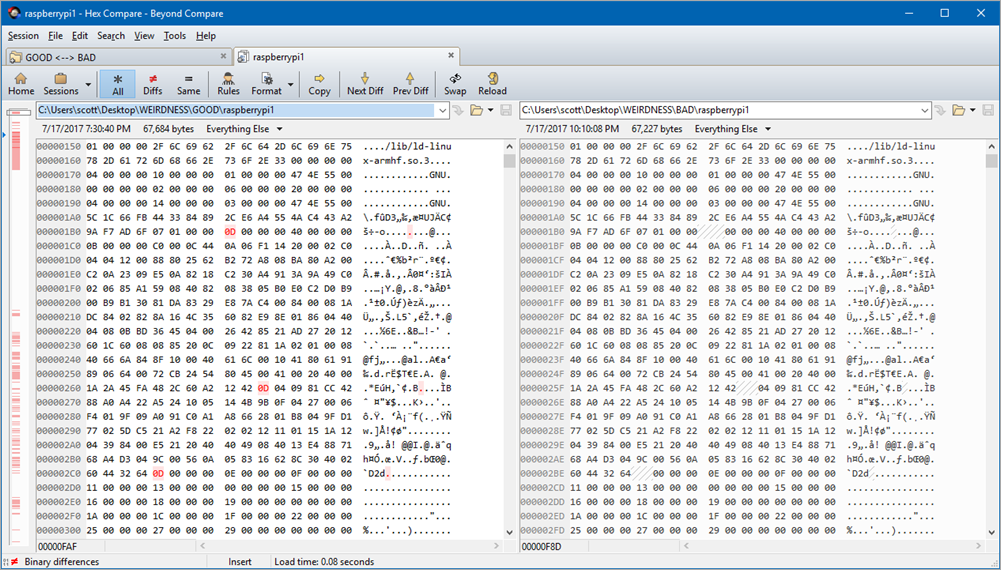

I make a folder called Good and a folder called BAD. I copy with FileZilla to BAD and with WinSCP to GOOD. Then I run a compare. Maybe some part of .NET Core got corrupted? Maybe a supporting native library?

pi@raspberrypi:~/Desktop $ diff --brief -r helloworld/ helloworldWRONG/

Files helloworld/raspberrypi1 and helloworldWRONG/raspberrypi1 differ

Wait, WHAT? The executable are different? One is 67,684 bytes and the bad one is 69,632 bytes.

Time for a visual compare.

At this point I saw it IMMEDIATELY.

0D is CR (13) and 0A is LF (10). I know this because I'm old and I've written printer drivers for printers that had both carriages and lines to feed. Why do YOU know this? Likely because you've transferred files between Unix and Windows once or thrice, perhaps with FTP or Git.

All the CRs are gone. From my binary file.

Why?

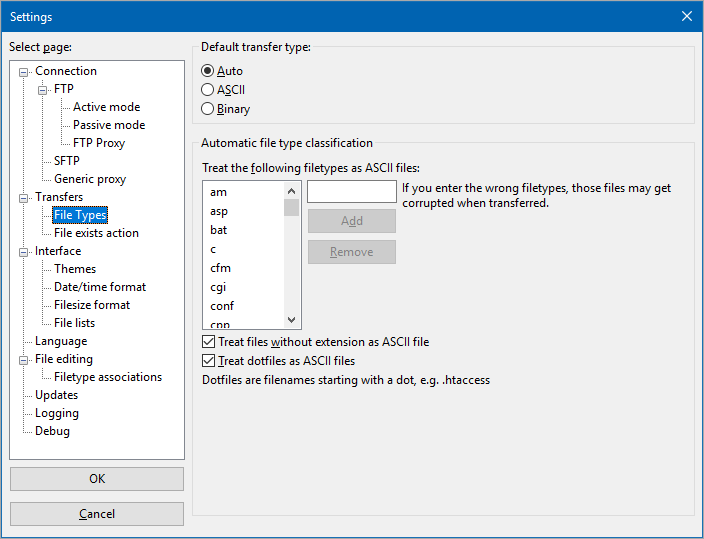

I went straight to settings in FileZilla:

See it?

Treat files without extensions as ASCII files

That's the default in FileZilla. To change files that are just chilling, minding their own business, as ASCII, and then just randomly strip out carriage returns. What could go wrong? And it doesn't even look for CR LF pairs! No, it just looks for CRs and strips them. Classy.

In retrospect I should have used known this, but it wasn't even the switch to SFTP, it was the switch to an FTP program with different defaults.

This bug/issue whatever burned my whole Monday. But, it'll never burn another Monday, Dear Reader, because I've seen it before now.

FAIL FAST FAIL OFTEN my friends!

Why does experience matter? It means I've failed a lot in the past and it's super useful if I remember those bugs because then next time this happens it'll only burn a few minutes rather than a day.

Go forth and fail a lot, my loves.

Oh, and FTP sucks.

Sponsor: Thanks to Redgate! A third of teams don’t version control their database. Connect your database to your version control system with SQL Source Control and find out who made changes, what they did, and why. Learn more

About Scott

Scott Hanselman is a former professor, former Chief Architect in finance, now speaker, consultant, father, diabetic, and Microsoft employee. He is a failed stand-up comic, a cornrower, and a book author.

About Newsletter

I tend to find when interacting with and Linux system I get less errors using bash on windows do do things

Another hint for anyone new to doing development work across devices, md5sum (or something similar) the files on either side to ensure they've arrived intact.

https://trac.filezilla-project.org/ticket/4235

Wow, eight years of ignoring this!

I would also have been annoyed with Filezilla for having that as a default and fired off an email to them to say, if in doubt, don't mess with it!

I used to spend days debugging horrific spaghetti code written by others (or myself earlier in my career). I hated it. Multi-threading was a whole new rollercoaster of debugging.

It's part of life though. We soldier on. ;)

...and I generally have the feeling that the longest debugging-sessions tend to be solved by VERY simple fixes - like just (un-)checking a checkbox x-P.

Any ideas about services to find out issues like this in FTP? (checksum, etc)

I use MobaXTerm which is ssh and WinScp combined, it's actually quite nice I would check it out. I'm not related to them in anyway btw...

$ scp -r bin/Debug/netcoreapp2.0/linux-arm/publish pi@192.168.128.151:~/helloworld

Added bonus: you don't need to run an FTP server on the Pi.

By the way, why the hell are you running Windows in the first place? It's not free (as in freedom), and is not even a decent operating system (such as Mac OS), so why even bother? Putting aside Microsoft's imperialism and many illegal practices to make Windows the only OS on this planet, does this system really have ANYTHING worth it?

Which is why I always insist on doing a full restore every six months to make sure it works.

The whole concept of changing files on transfer by default is very flawed in my mind. Every developer who does this should get banned from making such tools or get capital punishment.

I like the UI, but it seems like FileZilla's maintainer(s) are just unwilling to fix problems that don't suit their tastes (like the plain text password file for so many years).

You're absolutely right. A data transfer tool should not inspect a file and randomly change its content. Why change the content? WHY?

It's pissed me off to no avail in the 90s, and my blood boils when thinking such problem still exists.

EOL encoding is a problem for editors, not file transfer programs.

I so hate that the Unix derivatives have never changed to something that makes a little more sense.

This is, by far, not the only time that time has been wasted by this insidious little issue.

And lots od times was od course transfer type.

I've been burned too many times by file corruption, so I would have reached for md5sum fairly early to confirm that there was no difference between the original on my dev machine and the copy on the Pi.

If I were writing a tool with an "Auto" mode for ASCII vs Binary, I'd do a better job of detecting binary files than FileZilla apparently does. Pretty much the only bytes less than 0x20 that are reasonable in ASCII text are 0x09, 0x0A, and 0x0D. And if the bytes are greater than 0x7E, I'd look for valid UTF-8 sequences. (It's 2017: lots of "ASCII" files are UTF-8 now.) Any EXE would fail this heuristic. Or to put it another way, if the output of strings is significantly different from the original, it's not an ASCII file.

Not to mention, many binary files have well known magic numbers that can be used fairly reliably for file type detection.

Finally, it's almost always the case that when you want to strip CRs they're immediately followed by LFs (\r\n → \n) and isolated CRs are rare and suspicious.

I'm ambivalent about its very existence, but Git's autocrlf behavior seems to work well most of the time.

I used to think the ASCII option was useless because I could control line endings from my text editor. Then I downloaded some text files from OpenVMS in binary mode. There were no line endings at all. Turns out OpenVMS stores the line endings as record lengths in the filesystem metadata. Honestly, I'm only in my thirties.

It is irritating that ftp defaults to ascii transaltion. It was always the first thing one did - switch to binary mode - before a file transfer. Back in the day when I searched for files using Archie.

(I know you have prior experience with Linux, but everyday is a potential new adventure with it).

What isn't clear to me is why the default shouldn't always be binary?

"I'm deeply unhappy and considering quitting my job. "

I feel with you.

Best Regards

Matthias :-)

bin

prompt

Don't remember the last time I typed these but they're etched into my memory from my sysadmin days in the world of ms-dos

"Hey, Scott, how long will it take you to make a Hello World program on a raspberry PI ?"

Second is even more disgusting. They store passwords plain text in files. And some malware authors knows this so they make special modules for their "tools". I know two cases when sites was infected over and over w/o security holes in site. Once they change FTP passwords, hacks stops. And your guess was right - that laptop with passwords was infected.

There are too many caveats to be dealing with. One day it's a program like filezilla modifying your datastream. Another day it's gmail doing stuff and another day it's Windows or the browser locking dll's

The encrypted header is because then the medium you use cannot look into the archive and see all them 'dangerous' files.

Plus, you also get a CRC check for free for unfinished/broken transfers.

So getting the working file from your colleague would have been a reasonable start because that would exclude about half of the differences that there could be between your two setups (the building of the file vs. your Pi environment).

I know it's easy to say in retrospect though.

https://wiki.filezilla-project.org/User:CodeSquid

If I could shoot this guy, I would.

I too faced similar issue with CVS version control.

It had a unique requirement of specifying the file type (ASCII/binary) when we add them (cvs add).

We didn't know that and did some mass checkin of GIF files using custom made tool.

After a few days we started getting complaints about some grabled images in UI and we couldn't figure out why they appear perfectly fine on our machines (windows) where we checked in from.

I compared the MD5 HASH of two copies (the one I had checked out and the one packaged in build) and found they are different, which seriously raised doubts on our build process, but the things were perfect in build server logs (Jenkins running on Linux).

Finally comparing both images using HxD viewer, it hit me the hex patterns were changing after a few bytes, and the problem was 0D 0A byte sequence in normal file converted to 0A.

The renderer logic of GIF seems to be forgiving enough to render the image as much as possible even though it is corrupt.

Anyways everyone has their own share of debugging hell !

Jatan

{

"files.eol": "\n",

"terminal.integrated.shell.windows": "C:\\Windows\\sysnative\\bash.exe"

}

I bet you could wire up a build process in VSCode to run dotnet publish and then use the SFTP extension (or your own, external SFTP/FTP client if it has a CLI tool) to upload the result, making the whole process a simple keyboard shortcut away from pushing your updates to test.

The reason I use bash as my shell for the project is to open an SSH session to the target machine; now to actually run the executable to test it, I just have to hit Ctrl+` to access my remote shell.

Comments are closed.