Inception-Style Nested Data Formats

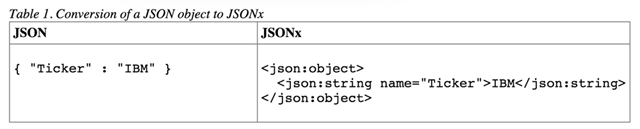

Dan Harper had a great tweet this week where he discovered and then called out a new format from IBM called "JSONx."

"JSONx is an IBM standard format to represent JSON as XML"

Oh my. JSONx is a real thing. Why would one inflict this upon the world?

Well, if you have an existing hardware product that is totally optimized (like wire-speed) for processing XML, and JSON as a data format snuck up on you, the last thing your customers want to hear is that, um, JSON what? So rather than updating your appliance with native wire-speed JSON, I suppose you could just serialize your JSON as XML. Send some JSON to an endpoint, switch to XML really quick, then back to JSON. And there we are.

But still, yuck. Is THIS today's enterprise?

In 2003 I wrote a small Operating System/Virtual Machine in C#. This was for a class on operating systems and virtual CPUs. As a joke when I swapped out my memory to virtual memory pages on disk, I serialized the bytes with XML like this:

Hilarious, I thought. However, when I showed it to some folks they had no problem with it.

DTDD: Data Transformation Driven Development?

That's too close to Enterprise reality for my comfort. It's a good idea to hone your sense of Code Smell.

Mal Sharmon tweeted in response to the original IBM JSONx tweet and pointed out how bad this kind of Inception-like nested data shuttling can get, when one takes the semantics of a transport and envelope, ignores them, then creates their own meaning. He offers this nightmarish Gist.

--

Http Response Code: 200 OK

--

{"errorCode":"ItemExists","errorMessage":"EJPVJ9161E: Unable to add, edit or delete additional files for the media with the ID fc276024-918b-489d-9b51-33455ffb5ca3."}

Here we see an HTML document returned presumably is the result of an HTTP GET or POST. The response, as seen in headers, is an HTTP 200 OK.

Within this HTML document, is a META tag that says, no, in fact, nay nay, this is not a 200, it's really a 409. HTTP 409 means "conflict," and it usually means that in the context of a request. "Hey, I can't do this, it'll cause a conflict, try again, user."

Then, within the BODY OF THE HTML with a Body tag that includes a CSS class that itself includes some explicit semantic meaning, is some...wait for it....JSON. And, just for fun, the quotes are HTML entities. "e;

What's in the JSON, you say?

{

"errorCode": "ItemExists",

"errorMessage": "EJPVJ9161E: Unable to add, edit or delete additional files for the media with the ID fc276024-918b-489d-9b51-ffff5ca3."

}

Error codes and error messages that include an unique error code that came from another XML document downstream. Oh yes.

But why?

Is there a reason to switch between two data formats? Sure, when you are fully aware of the reason, it's a good technical reason, and you do it with intention.

Is there a reason to switch between two data formats? Sure, when you are fully aware of the reason, it's a good technical reason, and you do it with intention.

But if your system changes data formats a half-dozen times from the moment leaves its authoritative source on its way to the user, you really have to ask yourself (a half-dozen times!) WHY ARE WE DOING THIS?

Are you converting between data formats because "but this is our preferred format?" Or, we had nowhere else to stick it!

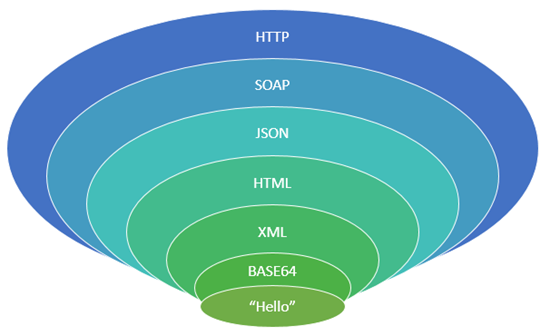

Just because I can take a JSON document, HTML encode it, tunnel it with a SOAP envelope, BASE64 that into a Hidden HTML input, and and store it in a binary database column DOES NOT MEAN I SHOULD.

Sometimes there's whole teams who exist only as a data format transformation as they move data to the next team.

Sound Off

What kinds of crazy "Data Format Inception" have you all seen at work? Share in the comments!

UPDATE: Here's an insane game online that converts from JSON to JSONx to JsonML until your browser crashes!

P.S. I can't find the owner of the BMW picture above, let me know so I can credit him or her and link back!

About Scott

Scott Hanselman is a former professor, former Chief Architect in finance, now speaker, consultant, father, diabetic, and Microsoft employee. He is a failed stand-up comic, a cornrower, and a book author.

About Newsletter

I also worked with a system that occasionally needed to serialize its data to WDDX, an old ColdFusion XML data serialization format. Instead of just serializing when needed, objects in the C# domain model had property setters and getters that immediately serialized and deserialized to and from a single string backing field for the entire object. As I commented at the time, premature serialization is the root of all evil.

And yes, that's how a lot of people write web applications these days. And it's absurd, but they do it anyway, because it's 'the right way to do it', at least they think it is. My point: it's easy to laugh at the IBM example, but frankly the current webdev community is not being any more clever, on the contrary. So a typical case of pot-kettle-black.

The IBM example might not be what you'd do in that situation, but chances are they have a heck of a lot more experience with backwards compatibility on their systems than you do: DB2, a database which is around for a long long time (first release was in '83), can still run applications which are written decades ago, simply because there are customers which run these apps. Those customers are their bread and butter; they don't write new apps every week, they keep the ones that work around for a long long time, because rewriting them might take a long time and a lot of work/money. The average web-developer might think that the world consists of mostly developers who write new stuff but that's simply not true: most software devs do maintenance on existing software, and thus are faced with problems like this: how to make sure existing large systems, which were perhaps booted up the first time before the developer was even interested in computers, keep working with modern new clients?

This 'workaround' of json-as-xml might look silly, but it's a way to keep existing applications alive without expensive rewrites, this is perhaps the best way to solve it, as it can make new clients work with existing back-ends (with perhaps a helper converter on that end, but that's it).

Not one of your best articles, Scott.

I worked in a company that made some B2B products. At one time we were tasked to transform/import data from a public data provider to make invoices and such... the format was in XML: Because thats what the government demands..

Sure enough, the data was XML... with a big fat CDATA chunk of CSV data ... fuck sake.

I've seen many examples where people just use 200 for everything (most probably because that's what the framework they're using does easily) and use their own payload to replace HTTP status codes. It's especially beyond me that the example you show is not ignorant of status codes, but actively decides to circumvent them.

If you're using HTTP, you should use HTTP. If you decide to send some crazy stuff over the wire, that's kind of up to you. That's not to say that I agree with JSON inside CSS inside HTML as a data format.

{

"type": "ItemAddedEvent",

"payload": "{\"id\":42,\"name\":\"foobar\"}"

}

Instead of just this:

{

"type": "ItemChangedEvent",

"payload": { "id": 42,"name": "foobar" }

}

Well, at least it's still only JSON...

(There is actually a reason for doing this: to make it easier for the client to deserialize the payload based on the type. But I don't think that reason is good enough to justify such a horrible design...)

<root>

<item key="MyKey" Value="MyValue" />

<item key="MyKey2" Value="MyValue2" />

</root>

His solution was for me to Base64 encode the data as tab delimited and embed it in a static SOAP header.

We have a real need for JSON to XML and XML to JSON -- a legacy XML based J2EE app that needs to be extended to support a proper RESTful web service which should ideally support both XML and JSON payloads.

However, the horrible idea that is JSONx does not meet our very real need nor can I imagine it meeting any real need.

XML vs CSV : The Choice is Obvious

There are so many other lovely examples on their site :D

XML'd XML

That product is a set of schemas and pipeline components which convert between HL7 (pipe and hat delimited EDI format for the healthcare sector) and XML. It seems ridiculous, but BizTalk is inherently XML-based for mapping, orchestrating, etc. and in that context it makes complete sense to have an XML representation.

I'm not saying JSONx doesn't look crazy, but sometimes there is a bigger picture to consider.

Also I'd add that SQL Server doesn't support JSON (except as a blob) but does support XML... Sometimes legacy is all we have to work with.

Javascript embedded in HTML embedded in a SQL query embedded in an ASP page to display videos embedded in OBJECT tags embedded in HTML embedded in the database.

For better or worse, enterprise vendors bet big on XML. Unfortunately (for them) that bet hasn't paid off and now they're faced with either going through a costly rewrite of their products or performing strange conversions like this to protect their investment.

Speaking of nested data formats, here's a fun json <-> jsonX <-> jsonML "game"/browser crash simulator: http://orihoch.uumpa.com/jsonxml/

The spec seemed innocent enough - XML request and response, nicely detailed document for both. The devil started in the details.

The request and response packets, while looking like XML, had to had a specific layout - new lines in the right place, no comments, certain fields on certain lines etc etc. Apparently the DVLA had written its own parser.

Then came the limitations - no more than 100 driver or vehicle requests at a time, no more than one single request a day. That's it.

Ok, so how do we send the request and get the response? Web service? No, that would be way way too easy. They wanted the request file SCPed to their own SSH server end point, with responses SCPed back again at some point in the next 24 hours.

Right, so we can do this over the internet, after all we had to exchange SSH keys with them in order to gain access? No. We had to have a dedicated leased line installed to connect us to the Government Secure Intranet. WHY IN GODS NAME?!

This was in 2009 - as far as I'm aware its still running.

In fact progress in the computer world evolves mostly utilizing whatever was first and wrapping it around new covers... this has affected our minds in a way that this is the only way we know in how to operate and it becomes difficult for us to come up with new original ways to do things unless it is to continue to encapsulate...

Real business engineering sometimes requires solutions that appear awkward and asinine from the outside because the outside viewer only gets the view available from the porthole. There's usually quite a bit going on that can't be seen but which applies constraints and requirements that contort the eventual solution. Often the most onerous constraints include "it has to work on time and in budget."

Which doesn't mean that people don't sometimes do stupid things either from a sense of frivolity or from ignorance. Or possibly a lack of mastery. And, as per TDWTF, also doesn't preclude laughing at the absurdity of it all.

Still, I have to agree with Scott. JSONx? Yuck!

What could be worse? Hmmm... Base64 encoded pgp signed Fortran punchcards scans in Tiff with metadata?

Within this HTML document, is a META tag that says, no, in fact, nay nay, this is not a 200, it's really a 409.

This is actually 'industry-standard practice'. For a real-world example which is used just about everywhere, try cXML

Relevant section:

Because cXML is layered above HTTP in most cases, many errors (such as HTTP

404/Not Found) are handled by the transport.

Which would be fine, except that the cXML status mimic HTTP status. So yeah, a cXML OK is 200, 204 is No Content, and so on.

So, you can hit a webservice, get a HTTP 200, and as a response a gem like this:

<cXML payloadID="9949494@supplier.com"

timestamp="2000-01-12T18:39:09-08:00" xml:lang="en-US">

<Response>

<Status code="200" text="OK"/>

</Response>

</cXML>

I think Scott probably understands this. Personally, I don't think he's posting a critique on IBM. I believe he's just highlighting the irony of our situation on a broader scale -- the example being that JSON, a lightweight protocol intended to displace and consolidate heavier protocols, actually necessitated and begat another protocol that did need to exist beforehand.

We'll never get away from legacy protocols and systems.

-Oisin

Application => Sql 2000 Stored Procedure => Invokes DTS Package => Runs VB Module => Loads .NET Assembly => Invokes Remote Web Service.

My understanding is that this is still running.

I can't find the owner of the BMW picture above, let me know so I can credit him or her and link back!

Hm, it's problem, because it's crazy Russia :) All I can say about him/her car registration number is that it's registered in Moscow Oblast. I can't found it in public databases...

Say I take in a csv file and convert it to json, do I need a new format called CSVJson? Or if I use an ORM to bring in sql data to json do I need a SQLJson format that has everything listed as table and column properties?

Or to further illustrate the point with the given example, why isn't the XML that is created just <ticker>IBM</ticker>?

To try to create a whole new standardized format for representing json as xml is, at least in my opinion, the main issue here and not the fact that you might have a use case where you need to convert from json to xml or vice versa. Such as this website http://www.utilities-online.info/xmltojson/ which manages to do it without the need for JSONx.

So I think peoples examples saying that front end developers do it, or biztalk does it, or whatever are misguided, in those cases they aren't creating a new intermediary standardized format, they are just doing a translation/transformation.

Someone I know is a sales rep for a big company and he goes to stores to write down orders and have them delivered to the store. All sales reps have laptops. But since he's not very good with laptops he prefers to write the orders down. In the evening when he gets home, he copies his written notes to an excel template. Then he prints the excel document and faxes it over to the headquarters in Brussels. There sits a guy who receives the fax and types all of this over into an e-mail. This e-mail gets sent to the headquarters in Germany where they manually enter it into their tracking system.

Of course, tracking of these orders follows the same path: when the client calls him, he calls Brussels HQ, they call HQ in Germany to get an update on the order and then all the way back down the chain.

As for transformation in the same format (object, json, xml,etc), this is done all the time. It is rare that the data that you want to use in app is stored in exactly the shape you want to use it in every scenario.

As for serialization, "flattening data structures to a text format" unless you are using a language like node or you are directly exposing your database that stores objects in json, you are likely going to be doing some serialization to expose your data, its up to you what formats you want to serialize your data in. Json seems to be the common option these days.

What it does:

1) Binary serialize ClaimsPrincipal (let's call it CP_bin)

2) Encrypt and compress CP_bin --> CP_bin_crypt_zip

3) Base64 encode CP_bin_crypt_zip --> CP_bin_crypt_zip_base64

4) Place CP_bin_crypt_zip_base64 in an XML --> CP_bin_crypt_zip_base64_xml

5) Base64 encode CP_bin_crypt_zip_base64_xml --> CP_bin_crypt_zip_base64_xml_base64

6) Write CP_bin_crypt_zip_base64_xml_base64 into HTTP response cookie, which by this time weighs several KB and spans multiple cookies (split into 2KiB chunks by ChunkedCookieHandler)

Many people faced frustration with the large cookies (e.g. http://stackoverflow.com/questions/7219705/large-fedauth-cooke-fedauth4-with-only-7-claims)

How it might be improved:

1) Have a custom serializer that shortens the claims namespace

3) Base85 encode rather than base64

4) Do we really need XML with fully qualified namespace here?

5) Escape the characters that are invalid in cookies, rather than base64 it

Sure, but did you do it with a partner on the same keyboard?

Comments are closed.

At that same company, there was it a piece of the app that used a SOAP envelope to transfer data to/from a mainframe stack (COBOL, Assembler). At the time I thought, "that's stupid!" but after getting to know how the system (Java based) dealt with the EBCDIC translation, I knew that SOAP was a better way. Easier to troubleshoot and get going when issues came up.