Managed Snobism

There was a great comment on a recent post here last week where I was trying to get a Managed Plugin working with an application that insisted on its plugins being C++ DLLs with specific virtual methods implemented.

There was a great comment on a recent post here last week where I was trying to get a Managed Plugin working with an application that insisted on its plugins being C++ DLLs with specific virtual methods implemented.

Here's the comment with my emphasis:

Great article. It would be nice to see a little less "managed snobism". Personally, I don't need to use up 100MB of my memory with a framework just to let me generate 32x32 bitmaps. So I'm grateful that the managed route is not the default. Remember, 90% of functions in .NET are just wrappers around the underlying API functions, so in effect, all they do is slow you down, while giving you convenience.

This reminded me of an article I did a few years ago when folks were still asking silly questions like "Is your application Pure .NET?" The article was called The Myth of .NET Purity and was published up on MSDN under an article series called ".NET in the Real World." To this day I'm still surprised that they let me publish it.

The (interestingly anonymous) commenter says: "...so in effect, all they do is slow you down, while giving you convenience." Well, sure. Everyone knows this quote:

Any problem in Computer Science can be solved with another layer of indirection.

It's a great quote. As an aside, the quote is attributed to nearly every smart Computer Scientist. Including David Wheeler, Butler Lampson and Steven M. Bellovin. Lampson says it was Wheeler, but it was one of these three guys.

But a game developer at Sun adds a clever touché to the old adage:

The two software problems that can never be solved by adding another layer of indirection are that of providing adequate performance or minimal resource usage. - Jeff Kesselman

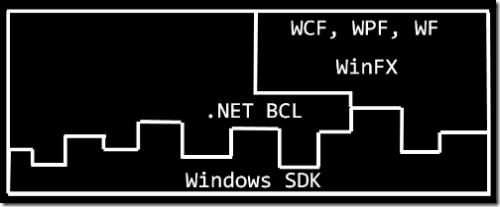

And he's right. Of course, .NET is a (most excellent layer of) Managed Spackle over the Win32 API. But it's really GOOD spackle. It's so good that we get collectively frustrated when a new API (SideShow, AzMan) doesn't have a good initial managed API (SideShow does now). A nice, clean managed API adds a fantastic amount of convenience in exchange for a very reasonable performance hit.

The performance hit - which I haven't personally measured - is no doubt less than even the most trivial of network calls. How much overhead is added? Not much.

Approximate overhead for a platform invoke call: 10 machine instructions (on an x86 processor)

Approximate overhead for a COM interop call: 50 machine instructions (on an x86 processor)

Gosh, that isn't much. Sure, there are always scenarios we conceive of that could add up, but that's what profiling on a case-by-case basis is for.

If .NET Purity is a myth, and the whole thing is just there to make our lives easier, then this is an easy trade off. I just remember that I can code in C#/VB.NET, for a small cost. I get speed of dev, and I give up speed of execution. I can code in C++, and give up speed of dev (a smidge) and gain (possibly) speed of execution. I can code in ASM and give up lots of productivity in exchange for my immortal soul and a really fast program. Or I can go to heaven, pursue beauty if I like, and give up so much performance to cause a scandal.

But it's not a simple trade off. Certainly not at the method or even component level. William Caputo makes a similar point with emphasis mine:

...this calculation is done unconsciously by those programmers who hear "we're trading efficiency for productivity." It's why they are reluctant to take a serious look at higher-level languages. A one-time productivity hit to get faster run-time performance certainly seems like a good trade-off, but the flaw in the argument is that productivity measurement is not reset with each task. It's cumulative. Unlike a programming assignment ("Implement Quick Sort Please"), productivity is measured across an entire solution (whether a build script or a trading system) -- and not just the first writing of the code, but throughout its useful lifetime (the vast majority of coding time is spent changing, or maintaining existing code).

In the real world, its not "write once, run forever", its "write a bit, run a bit, change a bit, run a bit", and so on. I am not saying that run-time efficiency isn't important. It is. The best way to compare run-time efficiency and programmer productivity is not at the micro level, but at the macro level.

Yes, .NET adds overhead. Certainly not enough to worry about for business apps, given the productivity gains. We're not writing device drivers here. In my original example where I want to write managed plugins for the Optimus Keyboard, since the max frames per second on the keyboard is 3fps, performance isn't a concern (nor would it be even if I needed 30fps).

If being a Managed Code Snob is wrong, I don't wanna be right.

About Scott

Scott Hanselman is a former professor, former Chief Architect in finance, now speaker, consultant, father, diabetic, and Microsoft employee. He is a failed stand-up comic, a cornrower, and a book author.

About Newsletter

Reg Braithwaite has a post about a related idea: http://weblog.raganwald.com/2007/03/why-why-functional-programming-matters.html

Factoring (or decomposing) your program lets you separate its concerns, making your program better (maintainable, understandable, flexible). Languages with more ways to factor your program, to separate its concerns, are more powerful (more expressive) than those with fewer.

Here's a great illustration. A handyman has various tools, you wouldn't use a screwdriver to drive a nail into the wall, right? Likewise, you wouldn't use a hammer to wipe your mirrors clean, right? Every situation has it's perfect toolset.

And we just happen to be business app developers...so bring on the coding efficiencies...bring on .NET.

Reminds me of a conversation I had not a week ago with a friend of mine (he's got about 3 years experience) who I recently turned on to O/R mapping (specifically, WilsonORMapper); he kept bringing up performance as an issue and it was driving me insane because he isn't writing an application where it was going to be an issue (he's working on a WinForms app that has SQL Express as its database, deployed to one machine, with the possibility that other instances of the app might access a DB on another machine on the network). I asked him what made him think performance would be an issue and he didn't seem to have a good reason, I don't know what experiences have led him to believe it was going to be an issue.

Anyway, one other reason to write 'pure .NET' when possible is hope that you might run the same app, hopefully with few changes, against Mono; the more you mix non-.NET code with .NET code, the harder it would be to do so. Mono is continuing to mature and I would hate to lock myself out of the possibility of having my code work with it.

I started out programming in assembly 25 years ago. And there were times when I would still drop to it for specific things from C, and later C++, but those few things got increasingly rare. With .NET I wouldn't even dream of dropping to C or C++ to get something done with less resources or more quickly, because there are actually cases were managed code is *faster* than unmanaged C++ code (mainly do to with resource allocation and object retrieval times).

.NET is a tool. And it is a poor craftsman who blames their tools...

You're absolutely right, the performance of a system should include the time it takes to enhance it between versions - programmer productivity most certainly should be included in the perf. calculation.

What is it that makes huge populations of developers think they're working on a Ferrari when their app is really just a Pinto?

"I'm writing a web app that pulls data from a database and puts it on a web page. I never use 'foreach' because I heard it's slower than explicitely iterating a for loop."

Wake up!

-/rant-

Not having to worry about pulling in arcane headers and libraries to do something as simple as constructing a stack or queue or god forbid do some memory streaming without crashing the app because you made an error clearing out some pointers is a B-L-E-S-S-I-N-G.

All this alpha-male chest thumping about "real" programmers doing it in C or C++ is such hogwash. If you really want to thump your chest, do it in assembly and be just as efficient and productive and performant - dare ya.

Until then, I'm with the .NET Snobs. Scott: Get some t-shirts made up!

Eventually Microsoft's language and framework designers will hold C# and .NET in the same regard as they now hold Classic VB. I'm all in favor of developing new programming paradigms, but when a vendor suddenly withdraws support for the current paradigm in order to accelerate migration to the new paradigm, that has a significant effect on productivity.

I developed 200K+ lines of VB code and 100K lines of C++ code over an 8 year period. Now I am porting that all of that VB code to C++. I'd much rather be porting it to C#. But I fear that MS will someday do to C# what they have done to VB. I can't afford to rewrite that code again if MS decides to withdraw support for C#.

BTW, I just read that Visual FoxPro is going to be supported until 2015. If they can support FoxPro, which is much older, then they could certainly support Classic VB.

As I said, I'd rather be using C#. Programming and debugging a large system in C++ is painfully slow in comparison. But at least I have a reasonable degree of certainty that the language won't be abandoned any time soon.

I haven't ever had a performance issue in .Net and when I have benchmarked anything I have always been surprised at how fast things have run (my thinking on this is that the compilation from IL to native is very good). Yet when you look at all of programming and where people are having performance issues today (like on the web) .Net is outside of that and in a lot of ways actually solves performance issues the rest can be left upto Moore's Law to fix.

The second is tied to IL and the JITter. I'm still not convinced of the quality of the code generated by the JITter, and it's hard to get away from. With native code, it's relatively inexpensive to drop down to assembly for a few functions, and I've seen enough people get decent performance boosts by doing so that I'd like to preserve the option. Yes, you can do something similar in .net, but it's harder, and the cost of thunking to native code makes the payoff a lot less. Also, despite the garbage collector, I still find myself managing objects a lot to get decent performance. (Hello IDisposable!) There's also the problem with plugins using different versions of the framework. It's bad enough that MS has said that you shouldn't use .net for Office or shell plug-ins.

Who could ever need more?

A commenter said "I think the performance overhead for managed code is overrated, just look at the XNA video at channel9 where they show a 3D car game running on .NET in the highest HD resolution 1080p."

Actually, the CPU does nothing here. Most of the time is spent rendering the scene, which is done by the graphics card. You could write this game in Javascript (an interpreted language) and end up with the same speed.

If you really want to compare .NET and no .NET, try to do something that does something real, such as image processing.

Approximate overhead for a platform invoke call: 10 machine instructions (on an x86 processor)

Approximate overhead for a COM interop call: 50 machine instructions (on an x86 processor)"

This is obviously not true. Marshalling structures alone goes way way over thousand CPU instructions. And you have to add to this all the stack walking in the CLR.

Good point, compare Paint Shop Pro to Paint.NET. I haven't noticed any difference in performance when using them.

Java isn't there yet with GUI stuff - as humans we're just too adapt at noticing discrepancies between the real thing and an attempt to copy the real thing. Look at the way we judge each other's personal appearances - a nose (like mine) extended just a few millimeters too far, or a chin arched just a couple millimeters in either direction and we notice it right away. Same thing with software user interfaces.

Getting back on track with my comment... C# (or VB.NET) is spot on the mark with the ability to easily create attractive UIs in a short period of time. And business users don't care how many processing instructions are going on being the scenes - they just like that it looks great and didn't take forever to build.

And as a consultant I like that my project manager thinks I'm a genious because I can whip out a nice looking user interface in a very short period of time, using .NET.

Comments are closed.

This meets my argumentation if I try to convince a potential customer of the choice for .NET. "No, it's not really slower for your intended purposes - and we will gain a huge speed-up on development!"