NuGet Package of the Week #9 - ASP.NET MiniProfiler from StackExchange rocks your world

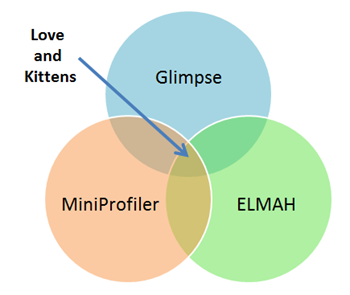

I LOVE great debugging tools. Anything that makes it easier for me to make a site correct and fast is glorious. I've talked about Glimpse, an excellent firebug-like debugger for ASP.NET MVC, and I've talked about ELMAH, and amazing logger and error handler. Now the triad is complete with MiniProfiler, my Package of the Week #9.

I LOVE great debugging tools. Anything that makes it easier for me to make a site correct and fast is glorious. I've talked about Glimpse, an excellent firebug-like debugger for ASP.NET MVC, and I've talked about ELMAH, and amazing logger and error handler. Now the triad is complete with MiniProfiler, my Package of the Week #9.

Yes, #9. I'm counting "System.Web.Providers" as #8, so phooey. ;)

Hey, have you implemented the NuGet Action Plan? Get on it, it'll take only 5 minutes: NuGet Action Plan - Upgrade to 1.4, Setup Automatic Updates, Get NuGet Package Explorer. NuGet 1.4 is out, so make sure you're set to automatically update!

The Backstory: I was thinking since the NuGet .NET package management site is starting to fill up that I should start looking for gems (no pun intended) in there. You know, really useful stuff that folks might otherwise not find. I'll look for mostly open source projects, ones I think are really useful. I'll look at how they built their NuGet packages, if there's anything interesting about the way the designed the out of the box experience (and anything they could do to make it better) as well as what the package itself does.

This week's Package of the Week is "MiniProfiler" from StackExchange.

Each are small bad-ass LEGO pieces that make debugging, logging and profiling your ASP.NET application that much more awesome.

So what's it do? It's a Production Profiler for ASP.NET. Here's what Sam Saffron says about this great piece of software Jarrod Dixon, Marc Gravell and he worked on...and hold on to your hats.

Our open-source profiler is perhaps the best and most comprehensive production web page profiler out there for any web platform.

Whoa. Bold stuff. Is it that awesome? Um, ya. It works in ASP.NET, MVC, Web Forms, and Web Pages.

The powerful stuff here is that this isn't a profiler like you're used to. Most profilers are heavy, they plug into the runtime (the CLR, perhaps) and you'd avoid messing with them at production time. Sometimes people will do "poor man's profiling" with high performance timers and log files, but there's always a concern that it'll mess up production. Plus, digging around in logs and stuff sucks.

MiniProfiler will profile not only what's happening on the page and how it renders, but also separate statements whose scope you can control with using() statements, but also database access. Each one is more amazing.

First, from an ASP.NET application, install the MiniProfiler package via NuGet. Decide when you will profile. You can't profile everything, so do you want to profile local requests, just requests from administrators, or from certain IPs? Start it up in your Global.asax:

protected void Application_BeginRequest()

{

if (Request.IsLocal) { MiniProfiler.Start(); } //or any number of other checks, up to you

}

protected void Application_EndRequest()

{

MiniProfiler.Stop(); //stop as early as you can, even earlier with MvcMiniProfiler.MiniProfiler.Stop(discardResults: true);

}

Add a call to render the MiniProfiler's Includes in a page, usually the main layout after wherever jQuery is added:

<head>

<script type="text/javascript" src="https://ajax.googleapis.com/ajax/libs/jquery/1.6.1/jquery.min.js"></script>

@MvcMiniProfiler.MiniProfiler.RenderIncludes()

</head>

The, if you like, put some using statements around some things you want to profile:

public class HomeController : Controller

{

public ActionResult Index()

{

var profiler = MiniProfiler.Current; // it's ok if this is null

using (profiler.Step("Set page title"))

{

ViewBag.Title = "Home Page";

}

using (profiler.Step("Doing complex stuff"))

{

using (profiler.Step("Step A"))

{ // something more interesting here

Thread.Sleep(100);

}

using (profiler.Step("Step B"))

{ // and here

Thread.Sleep(250);

}

}

using (profiler.Step("Set message"))

{

ViewBag.Message = "Welcome to ASP.NET MVC!";

}

return View();

}

}

Now, run the application and click on the chiclet in the corner. Open your mouth and sit there, staring at your screen with your mouth agape.

_3.png)

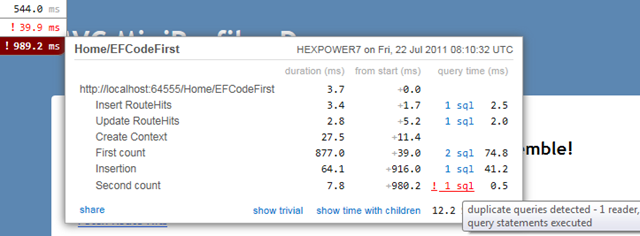

That's hot. Notice how the nested using statements are nested with their timings aggregated in the popup.

If you want to measure database access (where the MiniProfiler really shines) you can use their ProfiledDbConnection, or you can hook it into Entity Framework Code First with the ProfiledDbProfiler.

If you manage connections yourself or you do your own database access, you can get Profiled connections manually:

public static MyModel Get()

{

var conn = ProfiledDbConnection.Get(GetConnection());

return ObjectContextUtils.CreateObjectContext<MyModel>(conn);

}

Or, if you are using things like Entity Framework Code First, just add their DbProvider to the web.config:

<system.data>

<DbProviderFactories>

<remove invariant="MvcMiniProfiler.Data.ProfiledDbProvider" />

<add name="MvcMiniProfiler.Data.ProfiledDbProvider" invariant="MvcMiniProfiler.Data.ProfiledDbProvider"

description="MvcMiniProfiler.Data.ProfiledDbProvider"

type="MvcMiniProfiler.Data.ProfiledDbProviderFactory, MvcMiniProfiler, Version=1.6.0.0, Culture=neutral, PublicKeyToken=b44f9351044011a3" />

</DbProviderFactories>

</system.data>

Then tell EF Code First about the connection factory that's appropriate for your database.

I've spent the last few evenings on Skype with Sam trying to get the EF Code First support to work as cleanly as possible. You can see the checkins over the last few days as we bounced back and forth. Thanks for putting up with me, Sam!

Here's how to wrap SQL Server Compact Edition in your Application_Start:

protected void Application_Start()

{

AreaRegistration.RegisterAllAreas();

RegisterGlobalFilters(GlobalFilters.Filters);

RegisterRoutes(RouteTable.Routes);

//This line makes SQL Formatting smarter so you can copy/paste

// from the profiler directly into Query Analyzer

MiniProfiler.Settings.SqlFormatter = new SqlServerFormatter();

var factory = new SqlCeConnectionFactory("System.Data.SqlServerCe.4.0");

var profiled = new MvcMiniProfiler.Data.ProfiledDbConnectionFactory(factory);

Database.DefaultConnectionFactory = profiled;

}

Or I could have used SQLServer proper:

var factory = new SqlConnectionFactory("Data Source=.;Initial Catalog=tempdb;Integrated Security=True");

See here where I get a list of People from a database:

_3.png)

See where it says "1 sql"? If I click on that, I see what happened, exactly and how long it took.

It's even cooler with more complex queries in that it can detect N+1 issues as well as duplicate queries. Here we're hitting the database 20 times with the same query!

Here's a slightly more interesting example that mixes many database accesses on one page.

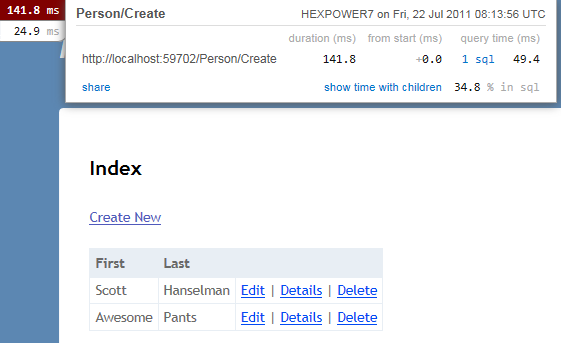

Notice that there's THREE chiclets in the upper corner there. The profiler will capture GET, POSTs, and can watch AJAX calls! Here's a simple POST, then REDIRECT/GET (the PRG pattern) example as I've just created a new Person:

Notice that the POST is 141ms and then the GET is 24.9. I can click in deeper on each access, see smaller, trivial timings and children on large pages.

I think that this amazing little profiler has become, almost overnight, absolutely essential to ASP.NET MVC.

I've never seen anything like it on another platform, and once you've used it you'll have trouble NOT using it! It provides such clean, clear insight into what is going on your site, even just out of the box. When you go an manually add in more detailed Steps() you'll be amazed at how much it can tell you about your side. MiniProfiler works with WebForms as well, because it's all about ASP.NET! There are so many issues that pop up in production that can only be found with a profiler like this.

Be sure to check out the MiniProfiler site for all the detail and to download samples with even more detail. There's lots of great features and settings to change as seen in just their sample Global.asax.cs.

Stop what you're right doing now, and go instrument your site with MiniProfiler! Then go thank Jarrod Dixon, Marc Gravell and Sam Saffron and the folks at StackExchange for their work.

UPDATE - July 24th

Some folks don't like the term "profiler" to label what the MiniProfiler does. Others don't like the sprinkling of using() statements and consider them useless, perhaps like comments. I personally disagree, but that said, Sam has created a new blog post that shows how to automatically instrument your Controller Actions and View Engines. I'll work with him to make a smarter NuGet package so this is all done automatically, or easily optionally.

This is done for Controllers with the magic of the MVC3 Global Action Filter:

class ProfilingActionFilter : ActionFilterAttribute

{

IDisposable prof;

public override void OnActionExecuting(ActionExecutingContext filterContext)

{

var mp = MiniProfiler.Current;

if (mp != null)

{

prof = MiniProfiler.Current.Step("Controller: " + filterContext.Controller.ToString() + "." + filterContext.ActionDescriptor.ActionName);

}

base.OnActionExecuting(filterContext);

}

public override void OnActionExecuted(ActionExecutedContext filterContext)

{

base.OnActionExecuted(filterContext);

if (prof != null)

{

prof.Dispose();

}

}

}

And for ViewEngines with a simple wrapped ViewEngine. Both of these are not invasive to your code and can be added to your Global.asax.

public class ProfilingViewEngine : IViewEngine

{

class WrappedView : IView

{

IView wrapped;

string name;

bool isPartial;

public WrappedView(IView wrapped, string name, bool isPartial)

{

this.wrapped = wrapped;

this.name = name;

this.isPartial = isPartial;

}

public void Render(ViewContext viewContext, System.IO.TextWriter writer)

{

using (MiniProfiler.Current.Step("Render " + (isPartial?"parital":"") + ": " + name))

{

wrapped.Render(viewContext, writer);

}

}

}

IViewEngine wrapped;

public ProfilingViewEngine(IViewEngine wrapped)

{

this.wrapped = wrapped;

}

public ViewEngineResult FindPartialView(ControllerContext controllerContext, string partialViewName, bool useCache)

{

var found = wrapped.FindPartialView(controllerContext, partialViewName, useCache);

if (found != null && found.View != null)

{

found = new ViewEngineResult(new WrappedView(found.View, partialViewName, isPartial: true), this);

}

return found;

}

public ViewEngineResult FindView(ControllerContext controllerContext, string viewName, string masterName, bool useCache)

{

var found = wrapped.FindView(controllerContext, viewName, masterName, useCache);

if (found != null && found.View != null)

{

found = new ViewEngineResult(new WrappedView(found.View, viewName, isPartial: false), this);

}

return found;

}

public void ReleaseView(ControllerContext controllerContext, IView view)

{

wrapped.ReleaseView(controllerContext, view);

}

}

Get all the details on how to (more) automatically instrument your code with the MiniProfiler (or perhaps, the MiniInstrumentor?) over on Sam's Blog.

Enjoy!

About Scott

Scott Hanselman is a former professor, former Chief Architect in finance, now speaker, consultant, father, diabetic, and Microsoft employee. He is a failed stand-up comic, a cornrower, and a book author.

About Newsletter

Do I have to modify all my code with such calls?

using (profiler.Step("Doing complex stuff"))

{ }

Assume you have a hundred pages web application, I will have to modify all pages that I want to be profiled.

And do I keep the calls to profiler?

After the slow code is fixed, must I remove the calls?

Again Sam Saffron makes a bold, unfounded statement. First on InfoQ about o/r mappers and now this. I'm sure the profiler is nice and does a great job for what it is built for. But please, Sam, leave the bold claims out of the arena: you make your work and yourself only look like a marketing puppet.

I haven't tried to use the profiler that is the subject of the post, but I can't see how littering code with using statements is ever a good idea? Code should be clean and convey the intent of what it is trying to do, rather than accomodating cross-cutting concerns such as profiling (or logging) within method bodies.

Wouldn't an attribute based approach where you decorate the operations (methods) that you want to profile be a better approach?

Rich

@samsaffron, why do you need all the IL voodoo? intercepting the dbproviderfactory and wrap everything is all you need. The wrapping itself is hardly a perf. dip. Everything that happens after that can be switched on/off, be handled in multi-threaded manner, be handled off-site.

Our open-source profiler is perhaps the best and most comprehensive production web page profiler out there for any web platform.

Again Sam Saffron makes a bold, unfounded statement. First on InfoQ about o/r mappers and now this. I'm sure the profiler is nice and does a great job for what it is built for. But please, Sam, leave the bold claims out of the arena: you make your work and yourself only look like a marketing puppet.

I totally agree with Frans here and agree about the marketing puppet comment. I think Jeff Atwood and the guys are pushing to hard and making claims that may cause short term wins but is leaving a funny taste in my mouth.

Yes I think that it is a good tool, but saying it is the "best and most comprehensive production web page profiler out there for any web platform" is rather insulting, a little big headed and no true. There are a lot of products out there and this is simply just another tool to be used inappropriately when needed.

What are you trying to sell?

@nicklarsen I agree that you only profile where you need to, but it still means that you put using statements where you are profiling, which detracts from code clarity.

performance is definitely a key intent when I write code, and I'm perfectly content with adding explicit demonstration that I have investigated the performance. As it happens, I've found this is also actually a very *effective* way of instrumenting: most automatic instrumentation is either too high-level or too low-level to mKe it clear what is happening. Here, you can instrument to the level that *you* decide. Which can be none at all if you so wish.

Another thing we were doing was to aggregate the perf data by entry point (request-url or Controller/action combo), by out-of-process calls, etc. Then using simple statistical analysis (avg, std, etc.) we were able to get good overview of what is going on, regressions, and more.

I am definitely going to give this MiniProfiler thing a very close look come my next .NET based web project.

Of course, you can control granularity by refactoring any statement you want profiled into its own little method call, but I fail to see how that's much cleaner than putting in a profiling statement. Either way, you're changing your code to support profiling.

I think the benefit of this approach is the control is in your hands. Don't want to litter your code with profiling code, good. Don't.

I also don't have a problem with adding the profiling code. It serves a specific purpose, is easily understandable, and can be removed at will when they aren't needed anymore (i.e., they're delete key friendly). Objections to this seem purity based rather than pragmatic.

I presume this is truly intended just more or less for the 'C' portion of an MVC application as you'd generally separate your business components from view models with an application of moderate complexity...and at that point, why would I want said components knowing anything about MVC, ASP.NET or in genral - what platform is consuming it?

Profilers require no intervention by a developer to measure pieces of code and are capable of measuring everything. Tools like AviCode (that'd be one of MS' products), HP's suite of tools, Compuware's Dynatrace (recent purchase on their part), amongst others offer profiling. Further, calling this the best webpage profiler? Seriously? Not only do those tools offer a helluva lot more on the server side, most, if not all, can also profile client script and report that back as well so at best it's half baked. They also allow you to dial up and down the degree to which you're profiling...without having to litter your code.

I'll keep my big boy toys, thanks ;)

The best thing about this profiler though, is that monitoring performance becomes SO easy that it'd be hard for developers NOT to optimise as many pages as they could. When the information is available all the time, in your face, the friction that goes with finding monitoring/profiling tools and chasing down their output goes completely away. I'd truly be surprised if developers weren't competing to optimise as much as they could as a sort of game.

Props to Sam, Mark, and Jarrod for another great tool, and the SE crew as a whole for continuing to make the web a better place, bit by bit :P

Those are fighting words. I could argue that at the point you are serving 6 million dynamic pages a day you are not a trivial application. Stack Overflow has its complexity and there are some fairly tricky subsystems.

Also, to those claiming I am a "marketing shill" with totally unfounded delusions of granduor. Let's take a step back and try to figure out what problem we are solving.

As a developer on a high scale website I want to be able to tell what is going on, in production, as I browse through my website. Meaning ... I want to tell how long it took the server to generate the page I am looking at AND I want every millisecond the web/db server were working, accounted for. This accountability has resulted in some huge performance improvements on our sites.

I don't care if I am on a holiday in Hawaii, I still want to see this information coupled with the page I am looking at. I don't want to have to go to another dashboard to look up this info. I don't want to attach any profiler to my application which often destabilises and crashes it. I just want ubiquitous - always on - production profiling

This approach is key for us, as all of the team are heavy consumers of the site we are building. If I see that a page took 200ms it upsets me. I can quickly turn that anger into something productive by having a proper account of "why?"

I believe we are the first to bring this approach and attitude to the market. Of course, being first to market, makes you implicitly "best". My intention with this hyperbole was to drive other vendors and platforms to build similar tools. Which in turn will make the Internet better. This has worked, Ayende is working on his set of tools and the concept has been ported to Google App Engine.

Think of it as the old "this is how long it took to render the page" thing you used to see in footer of pages (on steroids). I may be wrong here, in fact I am very curious if there are others that solved this problem in a more elegant way. We searched for a long time and could not find anything that fits the bill.

This, in no way is a replacement for "birds-eye-view" monitoring/profiling, which we do by analysing haproxy logs. It is not a replacement for server monitoring which we do using nagios and other tools. It is not a replacement for the deep analysis you get from intrusive profilers like ants (though, in reality we use them less and less every day - since we have stuff instrumented)

avicode is focused on isolating root causes of exceptions, something we do with elmah. Dynatrace does not couple the profiling info with the page rendered, you use another tool to view the results. NewRelic is focused on the birds eye view and collection of information for later analysis. There are probably thousands of profiling and monitoring suites out there but very few that have a similar approach.

Similarly, you would have to be mad to use something like dottrace or ants in production, default on. These tools are not designed with that use case in mind. There is a massive list of profilers that work great in dev and fail in prd due to being too intrusive.

I think a lot of the upset is due to the word "profiler" ... it just can mean too many things... perhaps I should have used the acronym UAOPP when referring to this work to avoid confusion :)

I admit, this tool will probably not help you that much, if you have no database access AND have 99% of your application living in 3rd party libraries. But I would argue that at that point you probably have bigger problems anyway.

Before the next person argues that MiniProfiler is the best in class UAOPP please include a counter example ... otherwise your complaint is totally unfounded.

UAOPP = ubiquitous - always on - production profiling

Plus all this "using profiler" stuff scattered everywhere... seems a bit alpha.

Described here

You can get pretty damn far without sprinkling anything in your code, see: http://samsaffron.com/archive/2011/07/25/Automatically+instrumenting+an+MVC3+app

I think your MiniProfiler is simply great. It's very simple to plug everywhere and with as much detail as you really need; also coupled with the latest improvements where you plug it directly on the MVC ActionFilter it's also easier to embed without making any code change too.

An interesting point is also that you place custom descriptions into profiling output, which definitely helps.

Your approach is great, I think that Using() statement is great because helps creating profile area hierarchies and, when necessary, dispose stuff without taking care of exception handling and other stuff... very clean from this point.

Thanks you for this great package.

Cheers

Have you talked to them about the issue?

http://localhost:61679/MySite/mini-profiler-results?id=1f63cfb8-8154-4a12-bb1a-4a2969860ed2&popup=1

I'm not that familiair with MVC but i think it has something todo with routing? (strange thing is that .js resources load just fine, and no routing is done in web.config).

What do I do now?

Grtx, Jeroen.

Those are fighting words. I could argue that at the point you are serving 6 million dynamic pages a day you are not a trivial application. Stack Overflow has its complexity and there are some fairly tricky subsystems.

If I'm serving up 6mil pages a day and I'm serving up a blank page, the site is trivial, no? I'm sorry, but no, SO does not compare in complexity to systems that say, do purchasing, financial transactions, etc... and have to deal with numerous backend systems and still deliver content within competitive thresholds, lest be ranked at the bottom of an arbitrary list. I'm not saying you can't write complex code or systems, or what have you. I'm saying a discussion board (which is effectively what SO is), simply does not compare. Can you honestly say your site is complex as something like BofA, Delta, Amazon or Ebay? I'm assuming you're a reasonable person and the answer to that is no.

There's a difference between popularity and complexity and those that have to deal with both have additional burdens.

I don't care if I am on a holiday in Hawaii, I still want to see this information coupled with the page I am looking at.

The points that you point out demonstrate that you probably haven't seen a lot of these tools, turned their dials up and down and have seen what they can do. They provide ALWAYS ON profiling. Not at every line of code, however if that's elected, that can be enabled as well. You don't provide that. They also provide this information across every server in a farm, consolidated into nice little charts and graphical views so that you can see, at run time, what systems are suffering bottlenecks or if it's a particular server, etc... So for what is presented here, I'd still have to write a tool that says "oh, server A isn't serving up pages fast enough" which would lead me to find out that some ops person installed a security tool for PCI compliance that caused an inordinate amount of I/O which lead to that particular server being slow.

Why should I have to actively engage (i.e. open the site in a browser) to get that performance information? These tools coalesce data, they report and alert on it. The only time you need to touch it is if you need more detail and need to scale up the extent to which you're profiling and I can take that to any arbitrary line of code - without having the side effects of mangling my code base or introducing artificial dependencies in layers to which they don't belong.

I'd rather sit on the beach and not worry about it unless I get an SMS or email that says there's a problem then be able to trace all of those transactions through the system and understand what happened. Yet another gap - you only get 'this is now, this is for me' numbers. Not 'this is for Bob, Sally, Joe in the last 30 minutes'.

I want to tell how long it took the server to generate the page I am looking at AND I want every millisecond the web/db server were working, accounted for. This accountability has resulted in some huge performance improvements on our sites.

The only way you could achieve this with your tool is to literally wrap this around every single statement and if you're doing that, you're introducing a dependency on ASP.NET + MVC in other layers that should have no knowledge of such dependencies. This is not something I need to suffer through with any of those tools.

I can't really dispute the statement that you've gained improvements or not from the tool, I'm sure you have - but at best you only get generic areas to look at. Again, with proper tools, I get line level resolution of problem areas - and I should be doing that BEFORE it ever hits production. I know exactly what to target and why.

I'd also be willing to wager a copy of RedGate that if I ran it on your application in a development environment, I could find ...oh, let's say at least 3...concrete areas for improvement that you have not caught with your tool. (kind of flying blind here, but I'm willing to part with a few $)

I believe we are the first to bring this approach and attitude to the market. Of course, being first to market, makes you implicitly "best". My intention with this hyperbole was to drive other vendors and platforms to build similar tools. Which in turn will make the Internet better. This has worked, Ayende is working on his set of tools and the concept has been ported to Google App Engine.

First of all, it's nothing new or novel. It's a stopwatch. It's QueryPerformanceCounter wrapped around blocks of code. Even my current customer has very similar code in a codebase that's ooooh, what, 5 years old? There's a reason it's all ripped out and not used anymore. It's because in a large scale system, this approach does not scale. Flat out. It sucks. If you want to call it the "best" for simple MVC applications that have no business logic (again, dependencies), then I guess I can stipulate that, but not the first.

Think of it as the old "this is how long it took to render the page" thing you used to see in footer of pages (on steroids). I may be wrong here, in fact I am very curious if there are others that solved this problem in a more elegant way. We searched for a long time and could not find anything that fits the bill.

Telling me how long it took to render the page is useless ;) Telling me exactly WHAT caused it to render slowly is what I care about. People have solved the problem. A LOT of vendors have and a lot of their solutions are pretty much PFM. As I phrased it in an off-thread conversation, if you're presented with finding THE critical line of code that's causing a problem in a system that leaks $15-20kish a MINUTE, is this the tool you want? If so, good luck, have fun. Then again, that's the difference between SO and a complex, revenue generating site. You're not pissing off customers and not losing any substantive amount of money if you're sluggish for an hour. In that context, a tool like this is ok, but still not the best.

Similarly, you would have to be mad to use something like dottrace or ants in production, default on. These tools are not designed with that use case in mind. There is a massive list of profilers that work great in dev and fail in prd due to being too intrusive.

No kidding. You SHOULD be using them before you release to production, or even DynaTrace is both developer and production oriented. The other tools pick up where those leave off and provide a lot more flexibility and configuration with regards to what level at which they profile the application.

This is also where (as I tweeted to you), you should be designing your infrastructure with profiling and monitoring in mind, not just trying to throw it in the middle of a !@#)(storm and hoping all works well.

avicode is focused on isolating root causes of exceptions

Did you just read the product slicks or have you seen it work? It's focused on both.

Dynatrace does not couple the profiling info with the page rendered, you use another tool to view the results

We have differing views on this I suppose. I really don't care about looking at a page. That adds no value to me when I can use said single tool to see everything, every page, period.

You also kinda left out HP ;)

There are probably thousands of profiling and monitoring suites out there but very few that have a similar approach.

For good reason. Hunting and pecking is not an effective way to spend time to resolve production performance problems.

I think a lot of the upset is due to the word "profiler" ... it just can mean too many things... perhaps I should have used the acronym UAOPP when referring to this work to avoid confusion :)

If you called it "always available and always on, but unable to pinpoint the exact line of code that represents a performance issue without exceptional effort on the part of the developer and introducing unnecessary dependencies in your non-web assemblies (you do have those, right?)", that sounds like an appropriate title. AAAAOBUTPTELOCTRAPIWEETPOTDAIUDIYNWAYDHTR. Helluva long acronym.

I admit, this tool will probably not help you that much, if you have no database access AND have 99% of your application living in 3rd party libraries. But I would argue that at that point you probably have bigger problems anyway.

LOL - really? This tool won't help you if you have it in your own libraries and have implemented separation properly. If you've kludged everything together in a monolithic application with no notion of tiering, I guess, but ...uh, that's where you have bigger problems and/or something so relatively simple to pretty much any application I've ever worked on that it's a moot point.

When you have a set of requirements or user stories that can sit in a stack of paper about a foot high with, oh, I don't know...business rules. Then we can talk, but I can only assume by a lot of this that your internal architecture is little more than caching wrapped around a database.

I'll just leave it at this and call it a day - if you're leaking revenue and goodwill, is this the tool you want to help you find a problem?

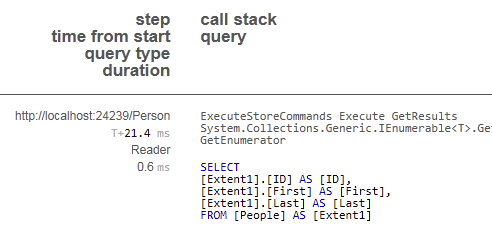

One thing I don't like right now is the useless "call stack" on the query popup... I wish it would tell me the actual method call stack so I can make sure I get to the right query. It's always GetEnumerator or GetResults or whatever, none of my actual methods. That said, it's still awesome and the nesting really seals the deal.

It also really showed me the issues with EF lazy loading and that you have to be pretty darn careful. In fact, it's really convinced me to just disable it and explicitly fetch related entities if I need them to avoid duplicate queries. It's also eye-opening because I would EF would fetch entities already in memory on the second duplicate query but maybe not; maybe it wants to be sure it gets the absolute latest version? I have fixed some duplicate issues by using the .Local property but I wish it was clearer if that uses a query as backup in case it doesn't find the in-memory entity(ies).

Nice,

This is already built-in, you can exclude assemblies, methods and so on see:

MiniProfiler.Settings.ExcludeAssembly()

MiniProfiler.Settings.ExcludeType()

MiniProfiler.Settings.ExcludeMethod()

@Jeroen

see this ticket

totally off-topic question. I see you always using scripts inside the head section of the page. You are doing it here as well.

Nowadays, I am always adding them right before the closing body tag. Should I continue doing that or do u think it does not effect much?

Thanks for your great article. I tried it, and it work perfect but query sql is not shown.

Could you please send me an source code that configurated for EF CodeFirst.

Try to remove the connection string from web.config (if you have it there). Maybe this will be helpful.

Scott, I have a question regarding the way that you describe for handling the usage of profiled provider and connection factory. Setting the Database.DefaultConnectionFactory enables sql profiling only when you rely on the conventions for code first, but if you specify a custom connection string in the web.confg/app.config then you have to specify the provider as well and in that case, EF does not use the DefaultConnectionFactory at all, so the profiler does not show any sql traces.

Is there a way to get the connection or connection string before actually instantiating the DbContext? (I mean through EF so that I can actually utilize the conventions) or will I have to rewrite the connection string resolving on my own?

I saw that EF 4.1 Update 1 introduced DbContextInf and IDbContextFactory<> but I'm not sure how to utilize them in this scenario.

Comments are closed.

_thumb.png)