Pinching pennies when scaling in The Cloud

Running a site in the cloud and paying for CPU time with pennies and fractions of pennies is a fascinating way to profile your app. I mean, we all should be profiling our apps, right? Really paying attention to what the CPU does and how many database connections are main, what memory usage is like, but we don't. If that code doesn't affect your pocketbook directly, you're less likely to bother.

Interestingly, I find myself performing optimizations driving my hybrid car or dealing with my smartphone's limited data plan. When resources are truly scarce and (most importantly) money is on the line, one finds ways - both clever and obvious - to optimize and cut costs.

Sloppy Code in the Cloud Costs Money

I have a MSDN Subscription which includes quite a bit of free Azure Cloud time. Make sure you've turned this on if you have MSDN yourself. This subscription is under my personal account and I pay if it goes over (I don't get a free pass just because I work for the cloud group) so I want to pay as little as possible if I can.

One of the classic and obvious rules of scaling a system is "do less, and you can do more of it." When you apply this idea to the money you're paying to host your system in the cloud, you want to do as little as possible to stretch your dollar. You pay pennies for CPU time, pennies for bandwidth and pennies for database access - but it adds up. If you're opening database connection a loop, transferring more data than is necessary, you'll pay.

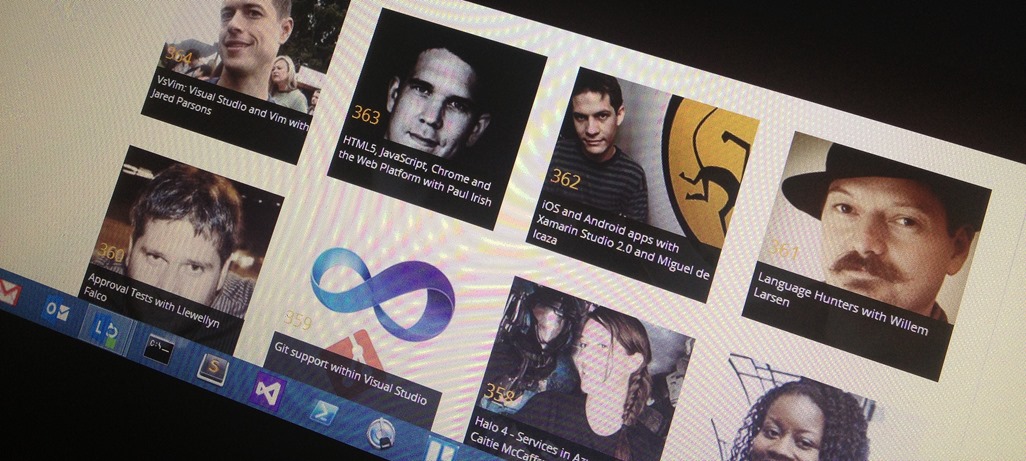

I recently worked with designer Jin Yang and redesigned this blog, made a new home page, and the Hanselminutes Podcast site. In the process we had the idea for a more personal archives page that makes the eponymous show less about me and visually more about the guests. I'm in the process of going through 360+ shows and getting pictures of the guests for each one.

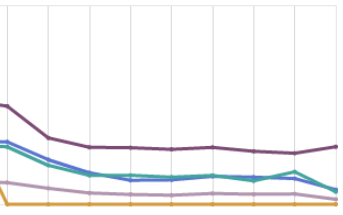

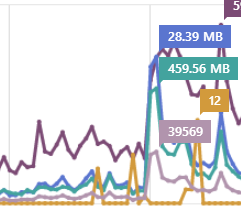

I launched the site and I think it looks great. However, I noticed immediately that the Data Out was REALLY high compared to the old site. I host the MP3s elsewhere, but the images put out almost 500 megs in just hours after the new site was launched.

You can guess from the figure when I launched the new site.

I *rarely* have to pay for extra bandwidth, but this wasn't looking good. One of the nice things about Azure is that you can view your bill any day, not just at the end of the month. I could see that I was inching up on the outgoing bandwidth. At this rate, I was going to have to pay extra at the end of the month.

I thought about it, then realized, duh, I'm loading 360+ images every time someone hits the archives page. It's obvious, of course, in retrospect. But remember that I moved my site into the cloud for two reasons.

- Save money

- Scale quickly when I need to

I added caching for all the database calls, which was trivia, but thought about the images thing for a while. I could add paging, or I could make a "just in time" infinite scroll. I dislike paging in this instance as I think folks like to CTRL-F on a large page when the dataset isn't overwhelmingly large.

ASIDE: It's on my list to add "topic tagging" and client-side sorting and filtering for the shows on Hanselminutes. I think this will be a nice addition to the back catalog. I'm also looking at better ways to utilize the growing transcript library. Any thoughts on that?

The easy solution was to lazy load the images as the user scrolls, thereby only using bandwidth for the images you see. I looked at Mike Tupola's jQuery LazyLoad plugin as well as a number of other similar scripts. There's also Luis Almeida's lightweight Unveil if you want fewer bells and whistles. I ended up using the standard Lazy Load.

Implementation was trivial. Add the script:

<script src="//ajax.aspnetcdn.com/ajax/jQuery/jquery-1.7.2.min.js" type="text/javascript"></script>

<script src="/jquery.lazyload.min.js" type="text/javascript"></script>

Then, give the lazy load plugin a selector. You can say "just images in this div" or "just images with this class" however you like. I chose to do this:

<script>

$(function() {

$("img.lazy").lazyload({effect: "fadeIn"});

});

</script>

The most important part is that the img element that you generate should include a TINY image for the src. That src= will always be loaded, no matter what, since that's what browsers do. Don't believe any lazy loading solution that says otherwise. I use the 1px gray image from the github repo. Also, if you can, set the final height and width of the image you're loading to ease layout.

<a href="/363/html5-javascript-chrome-and-the-web-platform-with-paul-irish" class="showCard">

<img data-original="/images/shows/363.jpg" class="lazy" src="/images/grey.gif" width="212" height="212" alt="HTML5, JavaScript, Chrome and the Web Platform with Paul Irish" />

<span class="shownumber">363</span>

<div class="overlay">HTML5, JavaScript, Chrome and the Web Platform with Paul Irish</div>

</a>

The image you're going to ultimately load is in the data-original attribute. It will be loaded when the area when the image is supposed to be enters the current viewport. You can set the threshold and cause the images to load a littler earlier if you prefer, like perhaps 200 pixels before it's visible.

$("img.lazy").lazyload({ threshold : 200 });

After I added this change, I let it run for a day and it chilled out my server completely. There isn't that intense burst of 300+ requests for images and bandwidth is way down.

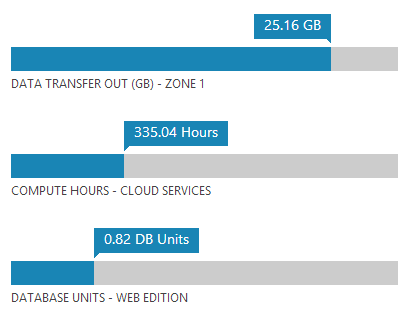

10 Websites in 1 VM vs 10 Websites in Shared Mode

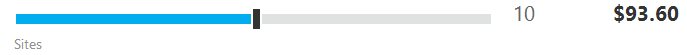

I'm running 10 websites on Azure, currently. One of the things that isn't obvious (unless you read) in the pricing calculator is that when you switch to a Reserved Instances on one of your Azure Websites, all of your other shared sites within that datacenter will jump into that reserved VM. You can actually run up to 100 (!) websites in that VM instance and this ends up saving you money.

Aside: It's nice also that these websites are 'in' that one VM, but I don't need to manage or ever think about that VM. Azure Websites sits on top of the VM and it's automatically updated and managed for me. I deploy to some of these websites with Git, some with Web Deploy, and some I update via FTP. I can also scale the VM dynamically and all the Websites get the benefit.

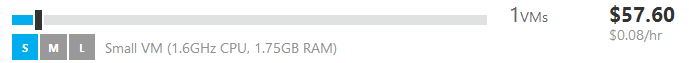

Think about it this way, running 1 Small VM on Azure with up to 100 websites (again, I have 10 today)...

...is cheaper than running those same 10 websites in Shared Mode.

This pricing is as of March 30, 2013 for those of you in the future.

The point here being, you can squeeze a LOT out of a small VM, and I plan to keep squeezing, caching, and optimizing as much as I can so I can pay as little as possible in the Cloud. If I can get this VM to do less, then I will be able to run more sites on it and save money.

The result of all this is that I'm starting to code differently now that I can see the pennies add up based on a specific changes.

About Scott

Scott Hanselman is a former professor, former Chief Architect in finance, now speaker, consultant, father, diabetic, and Microsoft employee. He is a failed stand-up comic, a cornrower, and a book author.

About Newsletter

And the customer/owner doesn't see any difference in cost.

But if you are in a cloud the company that has more optimized solution has direct benefits (in $$$) if they have a more optimized solution.

It seems like someone would easily be able to spike your outgoing bandwidth leaving you with few options.

Option 1: price cap - however this would effectively shut down your site/sites

Option 2: IP blocking - is this possible with Azure (and can it be done dynamically)? Also, it does not protect the site if the malicious user detects this and switches their IP

Are there other options?

Next optimization. Serve the images with long duration cache headers. That way a specific client browser instance will only request the image once.

Makes your site much snappier, will delight mobile users and anyone else who pays for incoming bandwidth, and reduces your outgoing bandwidth cost too.

Best article I've seen is here: http://blog.httpwatch.com/2007/12/10/two-simple-rules-for-http-caching/

- Richard

I don't think you can run a 100 websites on one VM. Either you're running out of memory running all of those side by side, or your warm up times make using those sites a pain.

Why not host your blog completely statically? I run my brother's photography website completely statically off S3/CloudFront, and keep the "content management" part on a VM where warm up times don't matter.

Bla bla bla... How stupid do you think are the ones who read your blog ???

Azure looks cooler and cooler everytime I read about it, it does look a little bit expensive at first, but I think all the cool stuff makes it worth.

As for DDoS, yes I would need to hook up cloudflare or throttle the traffic if that happened.

Dave - yes, Jekyll or something like that would work also, but I consider aggressive caching to be close to running static. Great point though!

Edward - DB is either SQL compact or its in a DB in the same data center, so you're not charged for bandwidth between the site and DB.

Anil - yes, images (for now) are served normally in RSS.

very good point , I think azure should have max daily /monthly limit so that no one wake up to find he has a big bill waiting him there because some one consume his resources , it could be web spider .

How are you handling your caching? Just doing ASP.NET Caching? Or something else?

Have you ever heard complaints about ajax.aspnetcdn.com being blocked anywhere? We do parts catalogs for car dealerships and I used to use a script tag similar to your example:

<script src="//ajax.aspnetcdn.com/ajax/jQuery/jquery-1.7.2.min.js" type="text/javascript"></script>

For some customers, inside their dealership the site didn't work. We tracked it down to ajax.aspnetcdn.com wasn't resolving or was outright blocked. Now we host jquery-x.x.x.min.js ourselves as a result.

Just looking at the archives page on hanselminutes.com, you could further cut down on bandwidth by reusing the same 3 images once you hit show #248.

It looks like you're cycling through the same 3 images, but rendering each from a different URL which means browsers are going to request those images instead of using one from cache (as they would if you reused the images).

Brian - wow, NEVER heard that, but you can ask @damianedwards as he runs it

Salman - Thanks for the tip!

Bennor - That's true! I should, but soon I'll have unique images for all the shows. I'm doing it a little at a time.

Sam - Yes, but if it was free, it wouldn't really be the service that the public (you) use and I wouldn't have written this post! ;)

There are different opinions to this topic. Personally I prefer the optimization base line idea - as this isn't only valid for your servers but also 'smart' clients on mobile networks - nonetheless the bandwidth is growing constantly there too.

I once heard (sorry no references and most probably its not valid anymore) at SAP people shouldn't think to much about memory or cpu power - as this may be just there. Which is kind of true if we look around for today's systems and networks. But if we look back, what people did achieve and still do within embedded systems with much less memory and bandwidth available - just imagine what we really could do with today's net, severs and clients. Amazing! (Curiosity may be a valid current example - even far away from a everyday web coders live - in some points.)

So: thanks for the post and thanks for highlighting how important optimization may and should still be - besides the pennies (and energy) it may safe in the long run.

Just a note to say the main site looks nice, but the way the blog posts flow at http://www.hanselman.com/blog is quite jarring (the background on the title of each post). I don't know how many people actually read your stuff that way rather than via RSS, etc.

Keep up the good work!

I read your blog in a browser, but the podcast is always in my mobile phone.

When you changed the design my phone took a long time to load your site. Your optimizations will help not only your resource consumption but also me as the user. I personally prefer no pictures at all when I use is the phone.

This article just got put in my +Pocket (which I learned about from you)

I can't wait to read this article since I had a bit of a bad experience the last time I tried to learn about some of the cloud services stuff out there.

I was doing a tutorial on Amazon AWS and got interrupted halfway through. A month later I got a bill for $150 :(

Clicking the comments link downloads it again. It looks funny in fiddler, cause when I load the page I see 3 simultaneous mp3 downloads, maybe there are multiple streams in IE or maybe it's just how fiddler works.

Curious what your thought's are with using preload=metadata? Would be nice to set an initial buffer size.

They block ALL CDN's here at my customer site, which as you can imagine is very frustrating since tons of sites fail to render at all. For example http://www.outlook.com does not render, IMO all sites should implement a failsafe that if the cdn fails to load your content you fallback to a local copy. There is javascript out there that does this that I implemented on my site, works decently enough.

Great blog post, I too am into penny-pinching when it comes to cloud-hosting, and I've done quite a bit of optimising over the years to keep my sites resource-efficient, and people will be quite surprised how much you can get out of a Small instance (hell I've got 2 production sites running on a XS instance - not busy sites mind ;-)).

I actually have a question about the MSDN benefits, I'm a VS Ultimate subscriber and we are looking to host our large government website in Azure, however I wanted to use my MSDN Azure subscription to set it up for testing, will my account allow me to use a Small VM instance (1) within the subscription for free? It says 1500 compute hours, but does that cover VMs? And if so, what size VM, it's a little confusing.

PS Liking the new site design btw

Thanks

Kev

Thanks for clearing up, does having 1500 compute mean I could potentially have 2 x Small running for a month?

Kev

Out of interest, doesn't using lazyload affect the browser caching? On face value, you're saving on first visits, but losing on returns - or am I missing something?

[hair splitting mode on]

301 is permanent redirect, not modified is 304

[hair splitting mode off]

10 WEBSITES IN 1 VM VS 10 WEBSITES IN SHARED MODE

This is something I've been wondering about for a while now. The number of websites I could run in the 1 VM is what has concerned me the most. I'm probably only ever going to have 5 - 10 sites. I'm interested where you got the info "running 1 Small VM on Azure with up to 100 websites". If I can see that on the Azure site then I will feel more comfortable giving it a go for my sites.

http://www.windowsazure.com/en-us/pricing/calculator/ click on Reserved tab.

Thanks for the quick reply. I have been looking at Virtual Machines calculator, http://www.windowsazure.com/en-us/pricing/calculator/?scenario=virtual-machines, hence why I couldn't find the info.

Sorry to hit you with another question.

Do you get Remote Desktop access with Web Sites - Reserved? I will want to remote in to setup RavenDB.

If Web Sites - Reserved does not have a Remote Desktop then why not use Virtual Machines. The Small option has same CPU & RAM Web Sites - Reserved. I assume you don't get custom domain names, built-in FTP, Git, TFS, and Web Deploy support built in but that's not hard to implement.

Tim

Is there any advantages to going with a small VM vs reserved websites? It looks like they are both a VM and their prices is within a few dollars of each other. Thanks!

Howdy Scott,

So, last week (3 Jun 2013) ScottGu announced new pricing model for MSDN Subscriptions (see here). Some great improvements, but it does add some restrictions to our use of this Azure/MSDN Subscription offer. Looks to me that the new "Dev/Test" only model precludes the use of our Azure/MSDN Subscription offer from running production sites--is that right, Scott? That would mean we wouldn't be able to run, say, a virtual ASP.NET conference anymore? That's a real shame.

Cheers,

Jon

Comments are closed.